Google's Pixel 6 and Pixel 6 Pro are stunning phones with a cutting-edge processor from Google that's designed to process AI-based features like nothing else on the market. Among the many features that Google debuted on the Pixel 6 series coinciding with the launch of its Tensor processor was Magic Eraser, a feature that was teased back in 2017 and finally delivered in at least some capacity on these phones.

Magic Eraser is designed to help you erase things from photos that just shouldn't be there, whether it was a photobomber that ruined the perfect moment, distracting folks in the background, or even an object that got in the way of the perfect shot.

But Google isn't the only one who has been working on this kind of tech. In many ways, Adobe Photoshop has been able to do it for years — although the new object selection tool in Photoshop 2022 is designed to make this "content-aware fill" function better — and Samsung launched a feature called Object Eraser with the Galaxy S21 series that does essentially the same thing Google's Magic Eraser does.

Object Eraser was even updated with the release of the Galaxy S22, which makes it easier to tone down reflections and shadows with the single click of a button.

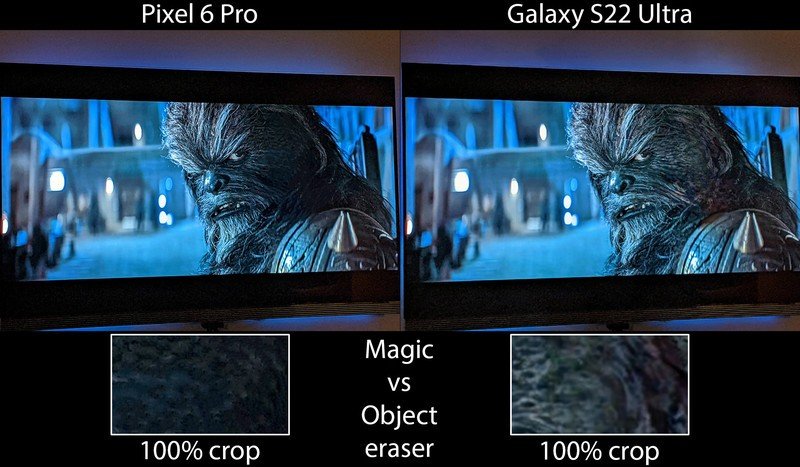

So who does it best? The Google Pixel 6 was the winner in our original tests, with its Tensor-powered object identification algorithms and Google smarts. But does the Magic Eraser vs Object Eraser conclusion change with the release of the Galaxy S22? Let's take a look.

How easy is it to edit a photo?

For each of these photos, I imported them into either the Pixel 6 or Samsung Galaxy gallery apps and used either Magic Eraser or Object Eraser on them to erase people or objects, saving the results afterward. I also included examples from Photoshop 2022 — which is available for $10 a month on Adobe Creative Cloud — where I highlighted people or areas using the object selection tool, followed by using "content-aware fill" from the edit menu.

The idea was that most people likely wouldn't want to spend significant amounts of time editing a photo, so comparing automated tools is the most sensible.

On a UX level, I found that Google's Magic Eraser was the easiest to use for erasing objects. When first opening a photo and selecting Magic Eraser, the tool will quickly scan the photo and attempt to identify any objects it thinks needs to be erased. If you like the automatic selections, a single "erase all" button can be pressed and a result given in mere seconds.

Manual selections can be made by circling any object you'd like to erase, and Google's Tensor processor spends a moment to process the object and remove it. After an object is erased, you can draw a shape around an area with your finger in an attempt to better clean it up.

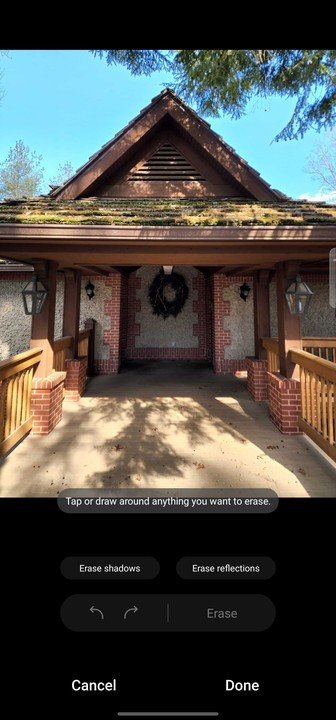

Samsung's Object Eraser works on three different levels now. Previously, there were just two avenues to edit photos.

First, upon opening a photo and selecting Object Eraser from the menu, you'll need to manually select any objects that need to be erased by either tapping or circling them. Tapping an object will attempt to identify the object and erase it from the scene.

Alternatively, you can draw a shape around any area, and Object Eraser will attempt to fill in the area without identifying objects. That last part is important because Google's method always tries to identify shapes no matter if you tap or draw around an object.

The third is a new set of tools Samsung debuted alongside the Galaxy S22, which make it easy to tone down shadows and reflections by selecting either of the two buttons in the UI. This automated method is pretty hit-or-miss, and I found that Samsung's tool would successfully identify shadows or reflections to erase just as often as it would find nothing to do.

But this shadow and reflection tool gives Samsung an edge over Google or Photoshop, as it could help brighten up dark photos and erase distracting reflections without circling anything at all.

Adobe Photoshop has long been the professional tool of choice, but recent years have brought easier-to-use tools to the platform. For this test, I used Photoshop 2022 on my Windows 11-based PC that I regularly work on.

Photoshop 2022 introduced a new object selection tool that's a refined version of similar tools from the past. Object selector works essentially identically to what you'll find on Google and Samsung smartphones but also gives more granular options if you so choose to use them. By default, though, a single click with the tool will initiate a scan — which can take 10-20 seconds on some computers — and will then identify individual objects it detects in blue.

Google's Magic Eraser was the easiest to use and made the fewest mistakes.

Holding shift and clicking on other objects will include them in the selection. After that's done, you can initiate an automated content-aware fill by hitting the backspace key or shift+F5. Alternatively, heading to the Edit menu and selecting "Content-Aware Fill" will bring up a more advanced interface where you can make more granular sample selections for Photoshop to draw from for a more accurate fill.

For this test, I simply used the object selection tool and then hit shift+F5 to achieve the simplest and most automated result.

Galaxy S22 vs Pixel 6

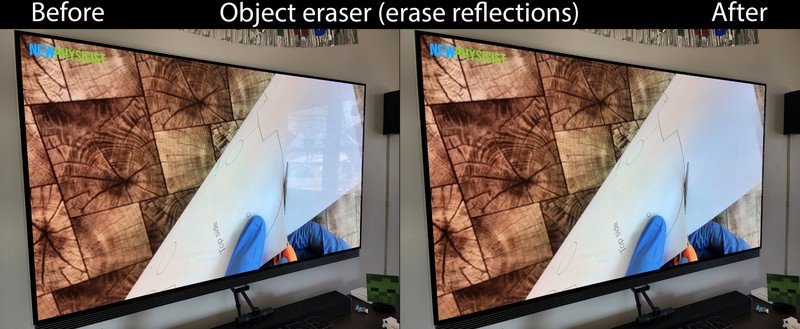

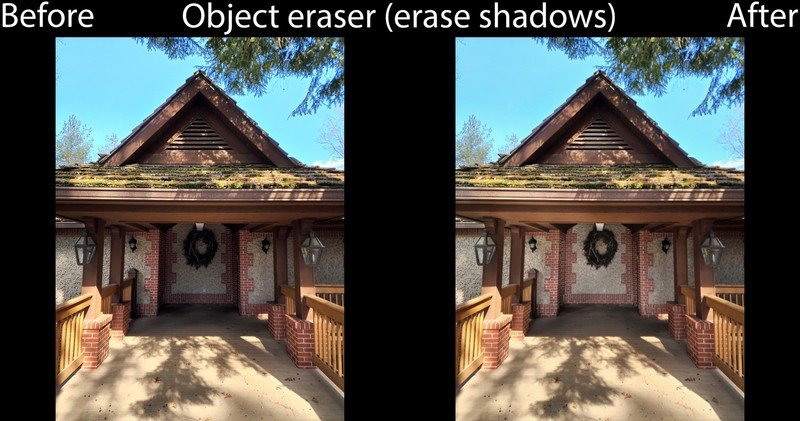

Since Samsung updated Object Eraser in One UI 4.1 with the release of the Galaxy S22, I wanted to get some refreshed images to see what has improved. Specifically, I wanted to highlight the two new tools that can help clean up photos just a bit after you've taken them.

In the two examples above, I used the automated "erase shadows" and "erase reflections" buttons that are now present in Samsung Object Eraser. In these two examples, the tools worked marvelously, erasing reflections and brightening up shadows with a single button click. Neither photo has been touched up any further after pressing either button.

So can we achieve similar results with Google's Magic Eraser, even without these buttons? If we're just erasing reflections, the answer is a resounding "yes."

In this image of one of my favorite new Star Wars characters, Krrsantan, the reflection of my living room light ruins what otherwise might be a great portrait shot of him. Both Object Eraser and Magic Eraser did a marvelous job of removing the reflection, but I like how Samsung's algorithm added some interesting texture and color to Krrsantan's head in place of the reflection.

Now, how about shadows? Google Photos on a Pixel 6 doesn't have an easy way to remove shadows, especially not with one single button click. Sure, you could bring up shadows across the entire image but there's no granular option like Samsung provides. There's really no way to put these to the test, so you'll have to look at the example above of Samsung's shadow erase utility to see how it can improve your photos.

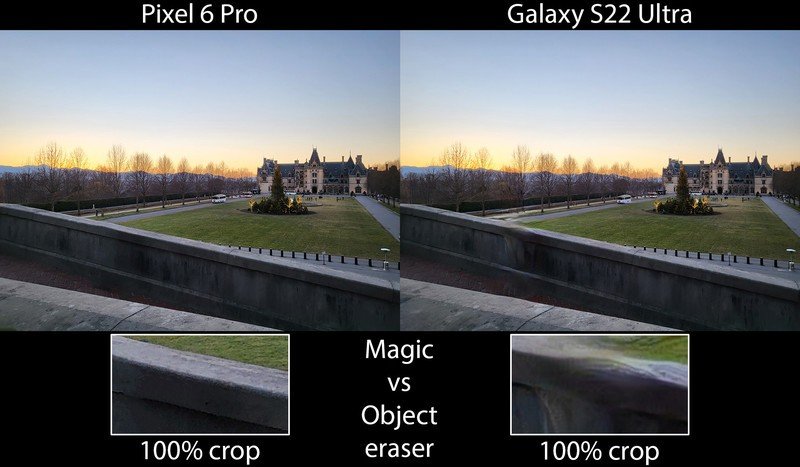

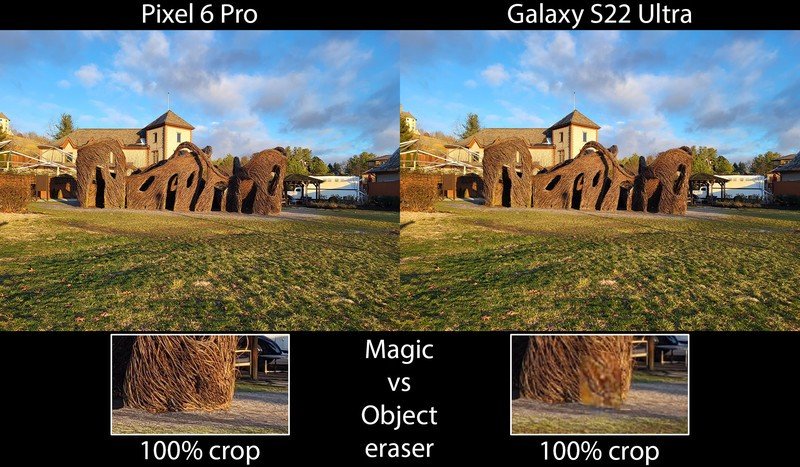

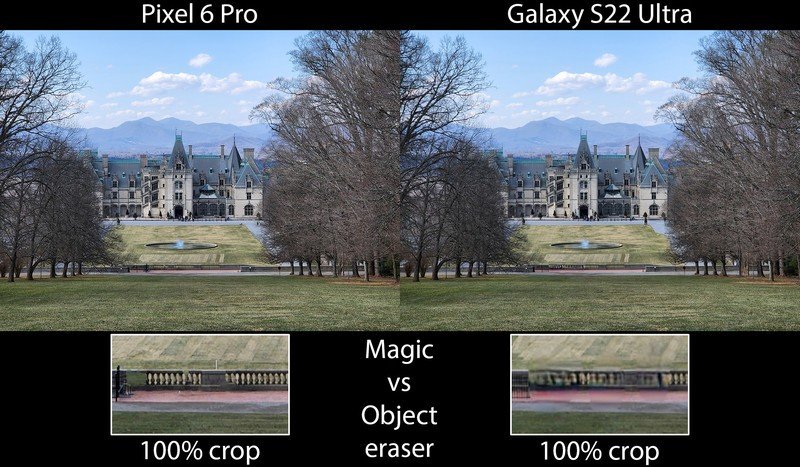

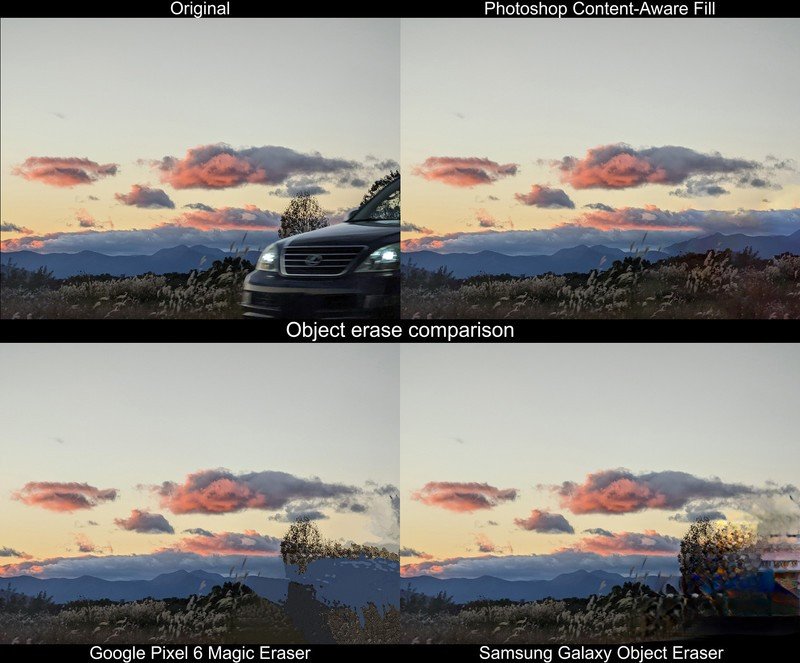

Now for actually erasing objects in photos. Did Samsung improve this with the release of the Galaxy S22, which features a much faster processor than before? Not really.

Above, you'll find the original photo followed by the result of both Magic Eraser and Object eraser. In every example, Google's algorithm did a better job of figuring out what the scene should look like without the objects and people I attempted to erase.

No matter if it's large foreground objects — as is the case in the first example with the person right upfront — or little background signs and other objects, Google's Magic Eraser seems to have little or no problem identifying the pattern that needs to be made to create an ideal optical illusion.

Removing people from photos

Most of us take selfies these days thanks to the general quality of front-facing cameras in phones. It helps us capture memories of events spent with other folks and loved ones, but that doesn't mean you want to include all those other people around you in the frame. I grabbed a few examples of when other people garbled up a photo I took of me and my family and tried to erase them from digital existence, Thanos style.

In all three of these examples, someone in the background was found to be a distraction to the people in the foreground. The algorithms each company uses to identify objects are a little bit different, and the results are affected by those methods.

In two out of the three examples here, the Pixel Magic Eraser does a better job than the competition. In my experience so far, I've found that, once objects are removed, Google's Magic Eraser does a better job of giving users the ability to clean up the photo afterward by continually drawing circles around an area.

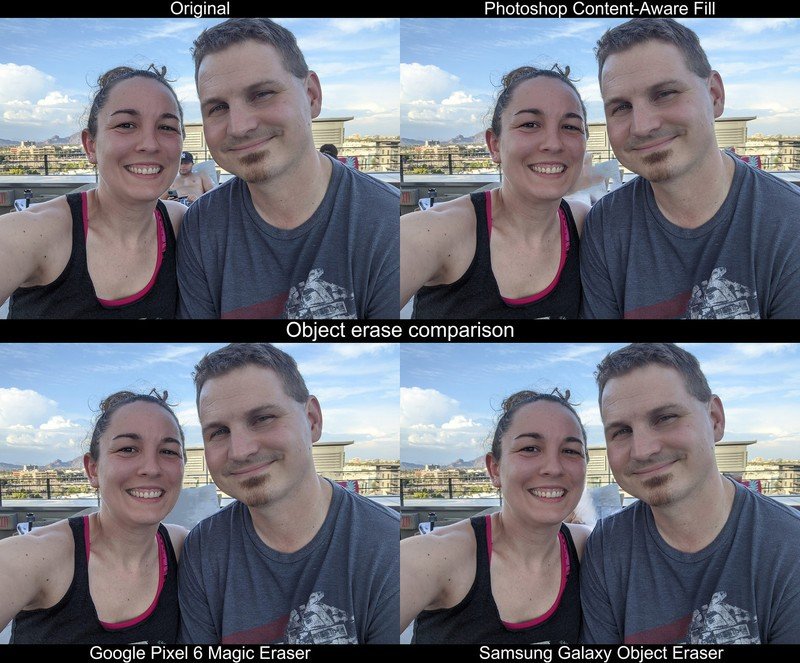

In the first photo with my wife and myself, Samsung and Google were pretty neck-and-neck in performance, while Photoshop's object selection tool ended up creating strange ghost-like remnants of the folks in the scene. Further circling of the background helped clean up the "floating" tent on the Pixel shot, while I just got more repeating noise in with Samsung's tool.

The second photo of my son shows similar results, although Samsung's tool did a better job of identifying the background pattern and more accurately repeating it in a convincing way, whereas it's easy to identify that something is wrong with the photo on Google's result. Photoshop's tool failed spectacularly, again.

The last photo of my wife and me at the pool was photobombed with an almost perfectly-framed shirtless dude in the background. No thanks. Google's method completely erased him from the scene in a convincing way, only leaving a small artifact around my wife's shoulder where mine meets hers.

Samsung's Object Eraser did a good job of erasing him but left a slight bit of a distracting pattern. Trying to clean this up by drawing around it only made it worse. Photoshop's method, once again, simply wasn't good. However, this time, the tool didn't leave that strange ghost-like halo around where the person used to be.

Google clearly won this round.

When things just get in the way

Photobombers aren't the only thing that you might want to erase from an image. Sometimes, powerlines get in the way. Other times, it might be an obnoxious red sign or a car that drove in front of the camera at just the wrong time. Either way, these tools can help erase those silly mistakes.

This first example was captured on my Pixel 6 Pro while the car was in motion and, as such, was impossible to just retake. That left me with two options: toss the photo, or attempt to remove the car. I ended up posting this one on Instagram using the Pixel Magic Eraser result, but only because Instagram's default aspect ratio is a square and largely cut off the part where the car was erased.

In this case, neither Samsung nor Google did a stellar job of removing the car and making the result look believable, although Google's result is a bit better. Photoshop, on the other hand, absolutely knocked it out of the park. Since the trees were left floating after erasing the car, I drew a shape around each of them and used the automated content-aware fill to fix it. Three clicks and it looks, quite literally, perfect.

This second example is a bit less cut-and-dry than the previous ones. The sunset was rather rosy and gorgeous on the day I took this (although I missed peak color by about 30 seconds), but those phone lines are just ugly and ruin the shot. Pixel Magic Eraser identified all of the power lines in the way automatically and removed them. On top of that, any artifacts left behind simply look like beautiful wisps of cloud.

Neither Photoshop nor Samsung's tool could identify the lines upon clicking them, so I was left to draw shapes around each power line and erase it. That's a far less efficient way to do things. In the end, Photoshop's result is a bit worse than Google's — especially where the power lines intersect with the trees — and Samsung's didn't turn out quite as well, either.

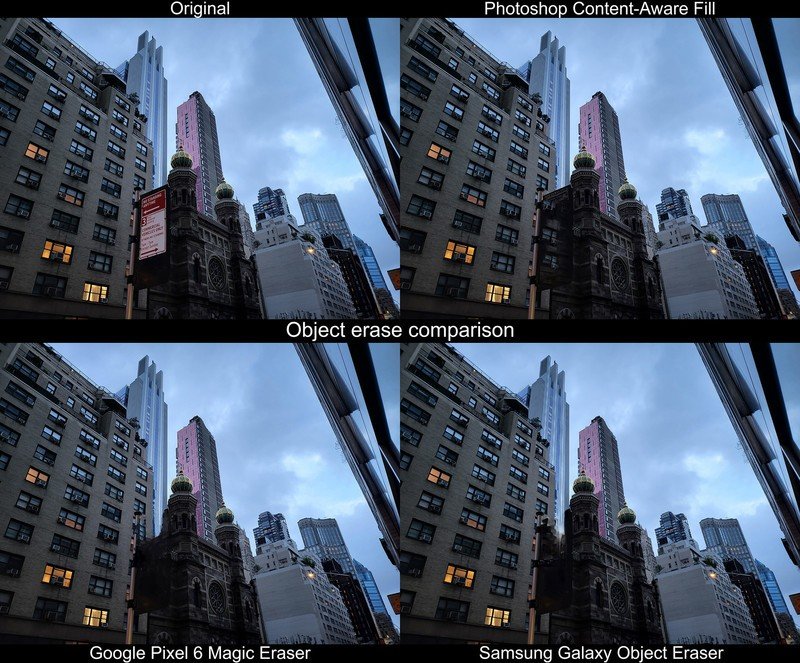

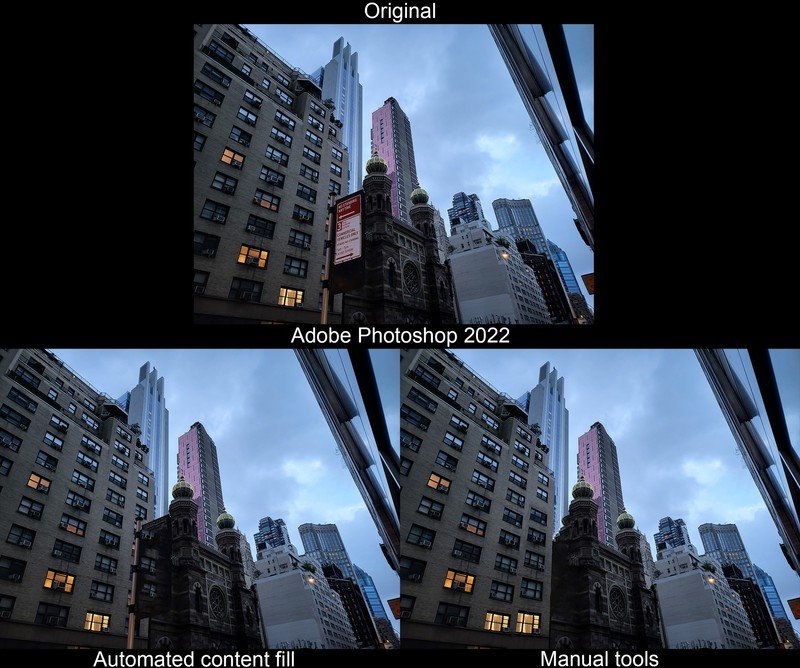

I took this on one of my trips to New York City and loved the way the tall buildings reflected the setting Sun framed the gorgeous architecture of the religious building below. Unfortunately for me, I didn't pay much attention to the red parking sign that sullied up the view a bit.

In this scenario, I'm not sure I would give any of these technologies much of a winning award for what I was originally intending. None of them could clear out the silver pole without significant visual artifacts, so I focused solely on the distracting red sign. Photoshop did the worst, as it repeated too many of the surrounding textures and ended up more distracting as a result.

Google and Samsung mostly darkened up the area, making it appear more like a shadow in the scene than anything else. In this case, Google's result is the winner and the least distracting of all three, although I wasn't super happy with any of the results.

This last one is the least modified of any of the photos, and it was to test how each technology handled harsh shadows in low-light environments. It's not much of a distraction at all given the rest of the scene, but the loudspeaker on the side of the building looked a bit out of place to me.

In this particular scenario, I prefer how Photoshop handled the pseudo shadow in place of where the loudspeaker used to be. It looks natural and not at all out of place if you don't know where to look. Google's Pixel Magic Eraser was runner-up, and the artifacts Samsung's Object Eraser created places it in a clear third place.

How does it all work?

To understand a little bit more of how our phones can suddenly identify actual objects in pictures without much difficulty, I had a chat with our own Jerry Hildenbrand, who is an expert in machine vision.

Modern phones have multiple cameras for many different reasons. While some of them are to provide a wider angle for photos or to grant better zoom detail, these sensors can also be used to approximate depth data since they provide a stereoscopic image, much like our eyes work.

Unlike the human brain, a computer doesn't really understand what it sees without significant amounts of training.

The difference is that, unlike the human brain, a computer doesn't really understand what it sees without significant amounts of training. In the case of Magic Eraser, Object Eraser, or Content-Aware Fill, none of these technologies are able to use this data and, therefore, have a perceptive disadvantage for precise object detection.

Despite this, however, Google's Tensor does an impeccable job of automatically identifying objects in a scene it thinks shouldn't be there. Part of this is done via edge detection, which Google has been mastering since it introduced portrait mode on the Pixel 3 many years ago despite only having a single rear camera.

While it had a hardware disadvantage, Google's algorithm proved to be the best way of detecting an object in the photo and falsely blurring the edges to make it appear like it was taken with a camera lens that has naturally shallow depth. There's little doubt that Google is using the same — if not an incredibly similar — algorithm to detect objects with Magic Eraser.

Edge detection works by identifying contrast between objects, as well as unnaturally straight or perfectly circular lines.

The second is machine learning itself, of which parts of Tensor are known to be built for this express purpose. As Jerry puts it, millions of objects are run through an algorithm until the computer — in this case, the processing chain inside your phone — recognizes different objects correctly.

Tensor is expressly built to process machine learning tasks better than other processors.

But "correctly" by machine learning standards isn't always the same as what humans think of when identifying objects. In the machine learning world, correct can actually be "wrong," yet, so long as it's consistent, it's still "correct." Calling a cat a hamster, as Jerry used in his example to me, is technically correct since it identified it as an animal and could easily be used to identify an object worth keeping or erasing from a photo.

Once those two parts are used to identify an object correctly, the last piece of the puzzle is successfully tricking the human eye.

Jerry details that "demosaicing is how colors (and thus edges in a 2D photo) are "created" by tricking the eye. If you balance the right amount of red, blue, green, and black you force the eye to see every color, including the ones that can't be reproduced 100%."

That same data can be used to "uncreate" an object, as Jerry says, and all that's left is to identify nearly patterns and colors to determine what might have been behind that object.

A "correct" identification in the world of machine learning doesn't mean the same thing as "correct" from a human perspective.

In the end, the results will only be as good as the algorithm itself, which was trained via machine learning. Where Magic Eraser — and other tech like it — gets funky is when it runs up against the parts of building a photo that our eyes can discern best: sharpening, white balance, and contrast.

Much of these difficulties are why Google says that Magic Eraser is really just intended for erasing background objects rather than ones in the foreground. Given the results in of our test here, I'd say that checks out.

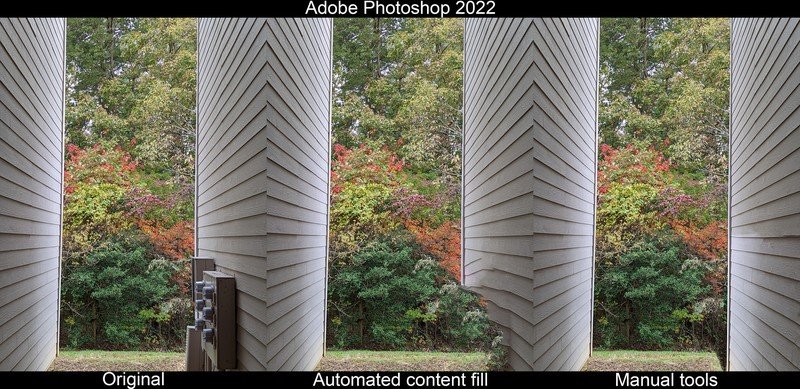

The manual way

So we've determined that Google's method is most certainly the best-automated method between all three of these, but what about the rest of the powerful tools available to Photoshop users? If you're trying to perfectly recreate a scene — as perfect as can be recreated, anyway — Photoshop is the only place to do it.

Photoshop's automated tools might not be as good as what you'll find on your phone, but the other ones make up the difference if you take enough time to learn them.

Being able to manually cut and paste, then use powerful tools like the healing tool in Photoshop can enable significantly better results than automated tools. That should be no surprise to anyone, and I really didn't spend much time at all on either of these photos for the manual comparisons.

Manually editing a photo achieves better results, but automated tools will help more people achieve positive results.

But here's the deal: almost no one is going to want to take the time to learn these tools well enough to do a good job of it. That's not even to consider paying for Photoshop and actually doing the work. Google and Samsung's automated eraser tools are incredible and show exactly how machine learning can be used in real-world situations that are actually useful.

Magic Eraser isn't quite magic, but it does an incredible job of helping users clean up photos without much effort at all. Samsung's own version of the same concept has been around longer and while it generally doesn't do as good of a job as Google's Tensor-powered one, it's certainly better than any automated tools people have had access to before it.