Here's why the Pixel 4's Neural Core chip could be a photography game-changer

There's a reason why the Pixel 3 has been lauded as the best camera phone. Google uses software algorithms inside its HDR+ package to process pixels, and when combined with a little bit of machine learning, some really spectacular photos can come from a phone that may have standard-issue hardware.

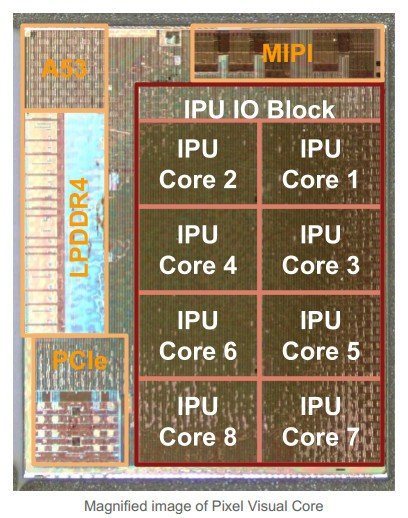

To help process these algorithms, Google used a specialized processor called the Pixel Visual Core, a chip we first saw in 2017 with the Pixel 2. This year, it appears that Google has replaced the Pixel VIsual Core with something called a Pixel Neural Core.

Google may be using neural network techniques to make photos even better on the Pixel 4.

The original Pixel Visual Core was designed to help the algorithms used by Google's HDR+ image processing which makes photos taken with the Pixel 2 and Pixel 3 look so great. It used some machine learning programming and what's called computational photography to intelligently fill in the parts of a photo that weren't quite perfect. The effect was really good; it allows a phone with an off-the-shelf camera sensor to take pictures as good or better than any other phone available.

If the Pixel Neural Core is what we believe it is, the Pixel 4 will once again be in a fight for the top spot when it comes to smartphone photography. Here's why.

Neural Networks

It seems that Google is using a chip modeled after a neural network technique to improve the image processing inside its Pixel phone for 2019. A neural network is something you might have seen mentioned a time or two, but the concept isn't explained very often. Instead, it can seem like some Google-level computer mumbo-jumbo that resembles magic. It's not, and the idea behind a neural network is actually pretty easy to wrap your head around.

Neural networks collect and process information in a way that resembles the human brain.

Neural networks are groups of algorithms modeled after the human brain. Not how a brain looks or even works, but how it processes information. A neural network takes sensory data through what's called machine perception — data collected and transferred through external sensors, like a camera sensor — and recognizes patterns.

These patterns are numbers called vectors. All the outside data from the "real" world, including images, sounds, and text, are translated into a vector and classified and cataloged as data sets. Think of a neural network as an extra layer on top of things stored on a computer or phone and that layer contains data about what it all means — how it looks, what it sounds like, what it says, and when it happened. Once a catalog is built, new data can be classified and compared to it.

Be an expert in 5 minutes

Get the latest news from Android Central, your trusted companion in the world of Android

A real-world example helps it all make more sense. NVIDIA makes processors that are very good at running neural networks. The company spent a lot of time scanning and copying photos of cats into the network, and once finished the cluster of computers through the neural network could identify a cat in any photo that had one in it. Small cats, big cats, white cats, calico cats, even mountain lions or tigers were cats because the neural network had so much data about what a cat "was".

With that example in mind, it's not difficult to understand why Google would want to harness this power inside a phone. A Neural Core that is able to interface with a large catalog of data would be able to identify what your camera lens is seeing and then decide what to do. Maybe the data about what it sees and what it expects could be passed to an image processing algorithm. Or maybe the same data could be fed to Assistant to identify a sweater or apple. Or maybe you could translate written text even faster and more accurate than Google does it now.

It's not a stretch to think that Google could design a small chip that could interface with a neural network and the image processor inside a phone and it's easy to see why it would want to do it. We're not sure exactly what the Pixel Neural Core is or what it might be used for, but we will certainly know more once we see the phone and the actual details when it's "officially" announced.

Jerry is an amateur woodworker and struggling shade tree mechanic. There's nothing he can't take apart, but many things he can't reassemble. You'll find him writing and speaking his loud opinion on Android Central and occasionally on Threads.