Google I/O 2025 Live Blog: Android XR, Gemini, Android 16 and the latest news

Join us during the Google I/O 2025 Keynote!

Google's biggest conference of the year kicked off today, May 20th at 1pm ET and we want to make sure you're caught up on everything we know so far.

Many upcoming features and news have already been announced during the Android Show: I/O edition that happened last week. We'll be walking you through what is coming to Android 16 and Gemini, but if you prefer an overview of everything announced during the Android Show, we have just the thing.

If you can't make it to Mountain View for I/O 2025, that doesn't mean you have to miss out on the Keynote. As has been the case for years, you can watch the event, and we have all of the details for you.

And if you want to get into the nitty-gritty, enough sessions are happening at I/O to make your head spin.

So grab a coffee or tea, and enjoy our Live Blog!

Morning everyone!

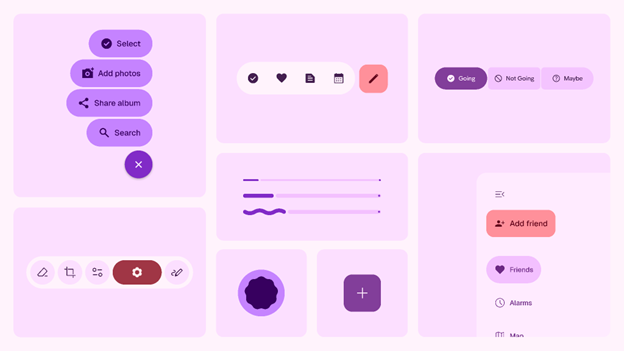

Technically, Google I/O 2025 is already underway, as Google gave us an early look at what's coming with The Android Show: I/O Edition. Material 3 Expressive brings about one of the more drastic UI overhauls that Google has undertaken, bringing it to our phones with Android 16 and smartwatches via Wear OS 6.

From everything we've seen so far, it's not just about making your phone and watch more personal, but aims to improve on aspects that you might not pay close attention to. This includes things like UI animations, buttons, all while retaining the essence of what Material You was all about.

With Wear OS 6, we're finally getting more customization options, and we don't mean more watch faces. Material 3 Expressive brings the ability to change color of the entire UI, so you can either match the color to your phone, or opt for something a bit different.

Not only that, but various UI elements are also being redesigned in order to make smartwatches easier to interact with. Buttons and notifications will now "hug the display," maximizing the screen real estate and hopefully resulting in a much better experience.

One of the more obvious focal points of I/O 2025 is going to be Gemini. The Android Show got the ball rolling, as Gemini will soon be available on the likes of Wear OS, Google TV, Android Auto, and Android XR. This comes following Google's replacement of Assistant with Gemini earlier this year, so the company must feel as though it's evolved enough to make it more widely available.

Arguably, the platform getting the biggest Gemini upgrade is Android Auto, with Google claiming it operates as a "more intuitive assistant." You'll still be able to perform simple tasks, but Gemini is also capable of understanding more complex requests.

It shouldn't come as too much of a surprise, but even Google TV is getting a Gemini upgrade, albeit one that's a bit smaller than other services. Once available, you'll be able to summon Gemini and ask it for specific recommendations.

In the announcement, Google said "you can ask for action movies that are age-appropriate for your kids." Plus, you can ask generalized questions, and along with a response, Gemini and Google TV will provide related videos for you to watch.

We know that Gemini is going to be a big deal at I/O 2025, but there's a pretty good chance Android XR steals the show. Despite there not being any Android XR-powered hardware just yet, Google gave us a glimpse at some of the things that Gemini will be able to do.

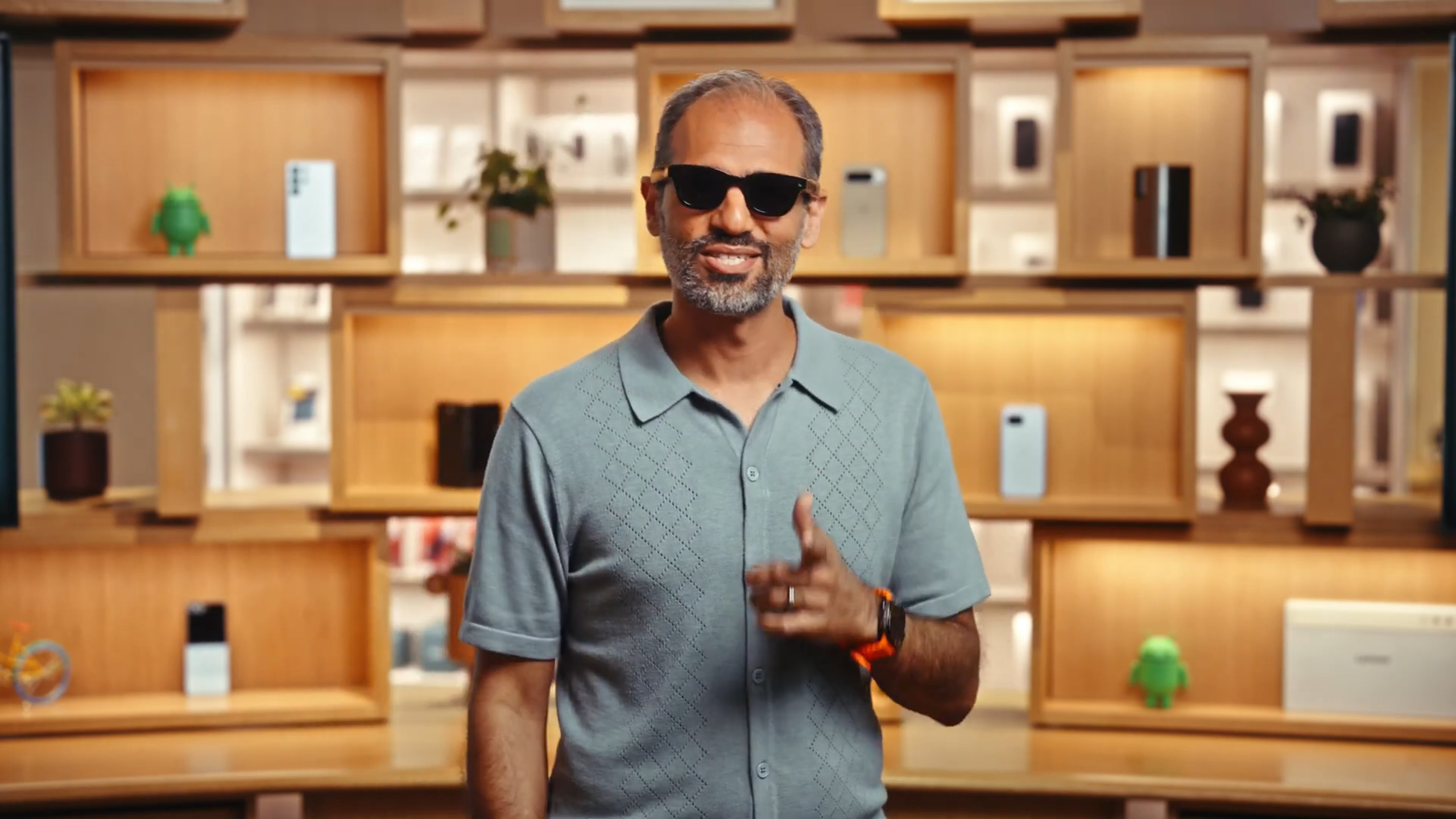

At one point during The Android Show, Android president Sameer Samat appeared on screen wearing some sunglasses that look similar to Ray-Bans. However, as my colleague Nick Sutrich points out, these are likely "Google and Samsung's upcoming smart glasses, complete with tinted lenses."

While the smart glasses are pretty awesome in their own right, that's not all we're expecting in the way of Android XR at Google I/O 2025. For a brief moment, Samsung's Project Moohan was spotted in the background during The Android Show.

Hopefully, this is where we will learn even more about the upcoming XR headset, as it's rumored to launch sometime in "mid-2025." And what better time to spill the beans than at Google I/O, when the platform will be on full display. Pun intended.

There was one surprising omission when it came to the most recent batch of announcements, and that's the lack of any mention regarding Nest devices. It's been over four years since the most recent Nest Hub was released, and even longer since the Nest Audio was introduced.

However, Google has hardly mentioned its smart home platform at all, besides announcing that it's dropping support for a variety of devices. We can't help but wonder what plans Google has for the platform, whether it be in the way of new hardware or not.

I'll be keeping my fingers crossed that this will be a surprise announcement during the I/O 2025 Keynote.

Something else that we're curious about is whether Google will share any upcoming plans for Chromebooks, tablets, or Android's revamped Desktop Mode. The latter made a surprise appearance at the end of The Android Show, but we aren't exactly sure what device it was being shown on.

And while rumors regarding a potential "Pixel Laptop" have subsided, we can't help but wonder what the future holds for Chromebooks and tablets. Neither are going away any time soon, but there hasn't been much on that front since the Samsung Galaxy Chromebook Plus and Lenovo Chromebook Duet 11 debuted last Fall.

It's pretty obvious that Google is trying to balance the fine line between catering to developers and just turning I/O into a party for everyone. That being said, we're also curious as to what the structure of the Keynote will actually be. With Material 3 Expressive out of the way, and Android 16 likely arriving in June, is there even anything else for Google to share?

On the flip side, if you just browse through the various planned sessions, you can see that we're going to spending quite a bit of time sifting through everything. Even with four main "Focus Areas," there are a bunch of sessions for each, with varying degrees of complexity.

Nevertheless, Google I/O is one of the more exciting events of the year, and we can't to see what's to come.

A lot has happened in Google’s world over the course of the last year. Google I/O 2025 will commence tomorrow at 1PM ET, where we’ll learn much more about what we can hope to see from the confines of Mountain View over the next 12 months.

Yesterday, we covered pretty much everything that was shown off during The Android Show, with Google pulling back the veil on a bit for the first time. As we try to figure out what the next year will offer, all we really have to do is jump in the time machine to last year’s I/O.

Last year wasn’t quite as bad as I/O 2023, when Google said “AI” more than 120 times over the course of its 2-hour-long Keynote. However, AI and Gemini were still the primary focus at I/O 2024, with the Gemini 1.5 Flash and 1.5 Pro models being.

This was also where Google first debuted Gemini Live, which essentially lets you have a conversation with Gemini, including being able to interrupt it mid-sentence. At first, this was only available for Gemini Advanced subscribers, but it’s now available for everyone.

Google even recently updated Gemini Live, offering the “ability to share your Android camera or screen within your Gemini Live conversation.” And the best part is that you don’t need to sign up for Gemini Advanced, as it’s available for everyone.

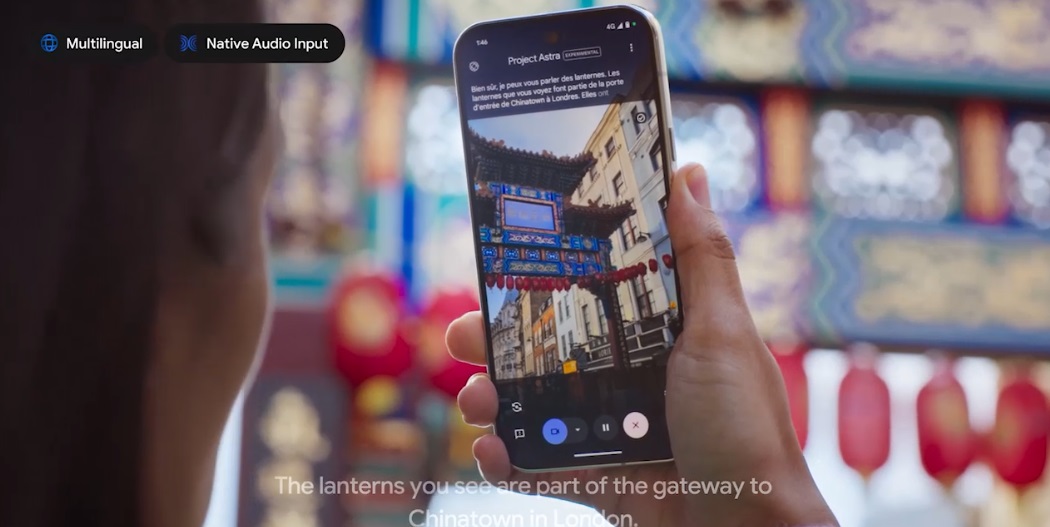

I/O 2024 also provided some rather concrete evidence that Google was working on something revolutionary for the AR/VR/XR space. Project Astra was announced during the Keynote, which is simply “a multimodal AI assistant.” In the promo video, a person is shown wearing glasses with built-in cameras and microphones, making it possible to ask Gemini questions and get answers without needing to use your phone.

Fast forward to now, and Android President Sameer Samat wore sunglasses that look similar to the Meta Ray Bans. These weren’t explicitly said to be the rumored Gemini-powered smart glasses, but it doesn’t take rocket science to put two and two together here.

Google AI Overview has been one of the biggest visual changes to Search in years. Whether you’re looking up the answer to a question or trying to do a bit of research, AI Overview is right at the top of the page.

Instead of just throwing a bunch of links at you, AI Overview attempts to summarize the information provided within the most popular search results. More importantly, this feature also provides quick access to whatever websites were cited within the explanation.

A recent report revealed that “Google’s AI Overview have led to a 49% increase in search impressions.” Although click-through rates on provided links are down by as much as 30%, Google’s commitment, along with regular updates, means that we’re a far cry from Gemini telling us to put “glue cheese on pizza.”

It wouldn’t be for another four months before the final version of Android 15 would be released, with Android 15 Beta 2 being released on Day 2 of I/O 2024. The update was relatively minor, but it introduced new features such as “Private Space,” letting you hide apps in a separate area on your phone.

Android 15 Beta 2 also brought about the floating taskbar that could be pinned to the bottom of the screen. It’s a feature that has become absolutely essential for Pixel 9 Pro Fold and Android tablet owners, providing quick access to the apps in your Dock. Along with being able to create App Pairs, these combos can be pinned to the dock, making multitasking even more seamless.

You didn't think that Google would make it through I/O 2024 without bringing some AI magic to Android, did you? Circle to Search, first introduced in January 2024, was updated to handle "complex math and physics problems." But Google didn't just implement the upgrade and call it a day, instead, it ensured that you could see the steps, which could help you learn how to solve the problems on your own.

Additionally, Google announced that Gemini Nano with multimodality would be arriving on select Pixel phones later in the year. At the time, Google focused on how this functionality would improve Android's "TalkBack" feature by making it so devices could "process text with a better understanding of sounds, sights, and even spoken language."

To accompany Android 15, Wear OS 5 was introduced, which is actually based on Android 14. Nevertheless, this update offered a mixture of customization and health and fitness tracking enhancements while also aiming to improve battery life.

A variety of new watch faces were made available, in addition to four running form measurements: Ground Contact Time, Stride Length, Vertical Oscillation, and Vertical Ratio. This, along with new workout watch face compilations and "debounced goals," showed us that Google was attempting to appeal to the Fitbit user base. As Fitbit wearables waned, the company hoped these changes would result in more interest in the Pixel Watch lineup.

Now that we've recapped what I/O 2024 had for us, it's time to try and figure out what I/O might have in store. As mentioned yesterday, we know that there will be as much AI and Gemini that Google can fit, but we expect to also learn more about the Android XR platform.

We don't need to go far in order to start putting the pieces of the puzzle together. The Google I/O 2025 website has a page dedicated to the variety of Keynotes and Sessions that will be on tap. Of these, there are four "Focus Areas," including AI, Android, Cloud, and Web.

Of course, these are geared towards developers, as this is a developer conference after all. But Google also makes these sessions available for anyone, even if you couldn't make your way to the Shoreline Amphitheater to be there in person.

We know Google will have more to say about Android 16, especially after showing off Material 3 Expressive during The Android Show. However, we also can't help but wonder if the company will take the time to explain why Android is now on an "accelerated timeline."

Traditionally, a new version of Android is released in the Fall, with the first beta usually arriving a month or so before I/O. Android 16 changes all of that, as the first Developer Preview landed in November 2024, just a little more than a month after Android 15 dropped.

While we don't know yet for sure, leaks and rumors suggest an early-June release for the final Android 16 build.

Initially, we didn't think that Android 16 was going to be a massive update. With Beta 1, Google implemented changes that allow apps to better adapt to fit larger screens, such as when you're using a foldable and go from the cover screen to the inner screen.

Another new feature is called "Live Updates," which is similar to Apple's Live Activities. This puts a constantly-updating notification in your notification shade, providing up-to-the-minute information from various apps.

Beta 2 was focused on the camera, as a new "hybrid auto-exposure mode" was made available for developers. Plus, Google added support for AVIF formatting and UltraHDR support for HEIC-formatted images.

Earlier this month, Google announced that Gemini 2.5 Flash would be powering NotebookLM, while stating that the NotebookLM mobile app would be launching at I/O 2025. It seems that Google couldn't stand to wait just one more day, as the NotebookLM app is now available to download from the Google Play Store.

The upgrade to Gemini 2.5 Flash is important, as it brings "advanced reasoning techniques," and provides "more comprehensive answers, particularly to complex, multi-step reasoning questions." Until now, NotebookLM was only available on the web, with many hoping that a mobile app would be released. Thankfully for some, the wait is officially over.

Android 16 Beta 3 was an interesting one, as it primarily felt like a "bug fixes" update. This was also the first "Platform Stability Update", where Google confirmed that we would see another update in April before the final release sometime after that.

Besides squashing some bugs, Beta 3 added some new accessibility features, such as making it easier to read text for those with low vision.

Auracast support was also added, which is an "audio-sharing protocol that allows the host device to send audio to multiple outlets." This has been in the works for years, with Android Central Managing Editor saying "it feels like Bluetooth's final form."

Lastly, and perhaps most surprisingly, Google hid a Terminal app in the Developer Options. It was fairly limited, but after a few taps, you would have a full Linux terminal right on your Pixel phone.

Can you believe it's been four years since Material You was first shown off as part of the Android 12 update? After making a variety of tweaks and minor changes over the years, "the most massive Android redesign ever" is on the way courtesy of Material 3 Expressive.

As you might suspect, the idea is to make your phone feel even more like an extension of yourself. But this isn't just about new color palettes or widgets, that's only part of the story. M3 Expressive will bring about overhauled designs to a variety of apps, while providing developers with the necessary tools to do the same.

Google didn't stop there, though, as it also peeled back the veil a bit, sharing how it came to the final design. According to the blog post, it underwent "46 rounds of design tweaks" and received feedback from more than 18,000 people.

To go along with these new changes, Android 16 and M3 Expressive will also introduce new floating toolbars, progress bars, buttons, and more. Some of these elements have even started appearing in apps, despite M3 Expressive not yet being rolled out to the masses.

Unfortunately, Google confirmed that Material 3 Expressive won't be arriving with the release of Android 16. Instead, it's said to begin rolling out to Pixel devices sometime "later this year."

One of the biggest complaints that some have with Wear OS is that it falls a bit short in the "polish" department. No, I don't mean to say that it doesn't look good, but Wear OS 5 doesn't take full advantage of the larger screen with the Pixel Watch 3.

Wear OS 6 looks to change that, and much more, as it too is getting the Material 3 Expressive upgrade. This means that your smartwatch is about to go a lot more personal, AND more customizable.

The best part of this is that Google is redesigning the interface so that it can truly take advantage of the entire screen. As pointed out by Senior Editor of Wearables & AR/VR, Michael Hicks, "Scrolled content will 'trace the curvature' of the rounded Pixel Watch display for smoother transitions, shrinking or expanding to match the available space."

Beyond all of the AI talk and whatever else Google has planned for the Keynote, there's a level of building excitement for something else entirely. Android XR made its official debut in a surprise announcement in December 2024, with Samsung's Project Moohan making its first appearance at the same time.

If you need any proof that Android XR will be a talking point, just watch The Android Show. During the video, Android president Sameer Samat tossed on a pair of sunglasses, which was a weird flex, until you realized they're probably Google's smart glasses.

Of course, more specifics weren't shared, besides Samat teasing more announcements were to come at I/O, "and maybe even a few more really cool Android demos."

In the lead up to Google I/O 2025, Shahram Izadi, Google's Head of AR and XR, appeared at TED 2025 donning a set of glasses that looked rather conspicuous. It was later confirmed that Izadi was wearing the Samsung Project HAEAN smart glasses, which are rumored to be released in Q3 2025.

Throughout the presentation, Izadi provided a few demos of what the smart glasses are capable of. Much of which, unsurprisingly, is powered by Gemini, with one example being when the glasses were able to tell Izadi where she left her hotel room key.

Basically, the TED 2025 presentation was Google's way of telling Meta to get ready for a battle. The Ray-Ban Meta glasses have dominated the space since their introduction in 2021, with that lead continuing to grow following the release of the second generation in late 2023. Since then, Meta has remained committed to releasing regular and useful updates. Meanwhile, recent rumors suggest the next iteration is in line to debut sometime next year.

However, Samsung isn't the only company we're expecting Google to partner with for Android XR hardware. Xreal has been a leader in the space for years, continuing to gain popularity, while pushing the envelope in terms of what's possible given current hardware and software limitations.

But no matter what, tomorrow may signal a massive shift in the future of wearables.

But what about Project Moohan? Well, that’s a different beast entirely, as it's not designed with “Everyday Carry” in mind. Instead, it’s more of a competitor to the Apple Vision Pro and Meta Quest, powered by the Android XR platform.

There are a lot of similarities between Project Moohan and the two aforementioned headsets, but that’s for good reason. Apple and Meta have figured out the best way for everyone to enjoy various content formats in a headset, so as a result, we end up with a design that is tweaked to Samsung’s liking.

Rumors suggest that Samsung is implementing 4K Micro-OLED displays, offering “better visual fidelity than Apple Vision Pro.” This is made possible due to the rumored displays featuring a resolution of 3,552 × 3,840, compared to the 3660 x 3200 resolution of the Vision Pro.

That alone is enough to provide some confirmation that this won’t be as affordable as the smart glasses. Instead, we’re expecting to see it come in anywhere between $1,000 to $1,500.

Get ready for another round of betas. Android 16 Beta 4.1 just rolled out, and while the beta program is limited to Pixels, that's likely to change over the course of this week. Most manufacturers start revealing their plans for the next version of Android at I/O, and it shouldn't be any different this time.

OPPO, Vivo, Honor, Xiaomi, Motorola, Tecno, and iQOO will likely announce that their latest devices will be eligible for the Android 16 beta, significantly increasing the accessibility of the build. This gives users a rare chance to run vanilla Android on devices that otherwise have overt customization, and considering how stable Android 16 has been up to this point, we're excited to see how it turns out on non-Google devices.

With Google already sharing Android news at The Android Show last week, I/O 2025 will be all about AI. We might see plenty of new announcements around Gemini, and how the AI assistant is being integrated into Google’s vast product portfolio, including Search, Gmail, and Photos.

Google is once again making a play for the connected ecosystem, and we will likely be hearing about Android XR, Android Auto, and wearables. There may even be new hardware to demo at the event, but we’ll only know more about that after the keynote.

Talking about AI, Google may roll out new AI plans to take advantage of its latest models. A leak from the start of May highlighted a Gemini Ultra plan coming to Google One, and we’ll be seeing if Google announces anything around this later today.

AI is pivotal to Google’s long-term strategy, and while it didn’t have early-mover advantage, the search giant made considerable progress with its latest Gemini models. It remains to be seen how much an Ultra plan would cost; for context, OpenAI’s Pro model with unlimited access to its reasoning models and GPT‑4o costs $199 a month.

Google will obviously need to undercut OpenAI in this regard, and we’ll have to wait and see what the brand decides to do. Google’s current plans include Google One storage as standard, and it will be interesting to see if the brand decides to roll out a limited plan that just includes access to its Gemini models.

Google will also likely detail what’s new with Project Mariner, the AI agent it showcased at the end of last year. Google says Mariner will deliver the “future of human-agent interaction,” with the agent able to undertake actions on a browser on behalf of the user. Actions include booking tickets, buying something online, or looking up a subject.

Google is obviously being cautious about how it rolls out this tech, and it is using humans to evaluate the AI agent’s actions and provide confirmation. But outside of a demo touting the Chrome extension, we didn’t get much in the way of information around the feature, and that may change today.

The big day is here! In just a few hours (okay, more than a few), the Google I/O 2025 Keynote will kick off, where we will learn about everything Google has been working on. After the Keynote, there are a boatload of developer sessions, many of which will provide further context to whatever is announced.

If you’re like us and want to sit back and see what will be announced, we’ve got you covered. Then, just bookmark the page, catch up on everything we’re expecting, and set an alarm for 1 PM ET. Until then, we're not stopping with our Live Blog. Stay tuned !!

Last year a bunch of us were in attendance including Managing Editor Derrek Lee, Editor-in-Chief Shruti Shekar, Senior Contributor Brady Snyder, and Senior Editor Nick Sutrich.

This year we've got Senior Editor Michael Hicks and Senior Contributor Brady Snyder on the floor and will be reporting live for us!

Besides AI, we’re expecting to learn more about Android 16, Material 3 Expressive, and, of course, Android XR. If The Android Show was anything to go by, Michael and Brady might even be able to get some hands-on time with some new hardware.

For a few of us here who couldn’t make the trek out to the Shoreline Amphitheater, we’ll be diving deep into the myriad of I/O Sessions. As of our most recent count, there are four focus areas comprised of more than 70 sessions in total, not including the Google Keynote or the Developer Keynote that takes place at 4:30 PM ET.

Google's annual developers' conference is just hours away! You can catch all the action online by tuning into the official live stream via Google's official page and its YouTube channel.

To make sure you don't miss a moment, simply click the livestream link below once the conference begins, and consider hitting the "Notify Me" for a quick reminder.

In the meantime, here's what you can expect to see at I/O this year.

There is sure to be some overlap between sessions, but Google tries to do a pretty good job at keeping things separate when possible. If you’re wondering what sessions we’re interested in, here are a few:

What’s new in Android: Explore new Android 16 features and the future of Android development.

Android notifications and Live Updates: Android 16 introduces Live Updates, a new class of notification templates that helps users keep track of user-initiated journeys for things like rideshares, deliveries, and navigation.

Under the hood with Google AI: Learn how Gemini is reshaping knowledge and productivity, building with AI, revolutionizing search, and more.

Accelerating Smart Home innovation with Home APIs: Explore Google Home's latest developer tools and technologies for building innovative and engaging smart home experiences.

Unlock user productivity with desktop windowing and stylus support: Desktop windowing lets users manage multiple tasks, while the Ink API simplifies support stylus input, enhancing precision and creativity.

Adaptive Android development makes your app shine across devices: Learn why and how to build apps and games that create delightful experiences across the Android mobile device ecosystem — including phones, tablets, foldables, Chromebooks, cars, and XR.

Besides the obvious topics, such as Android and Gemini, we will hopefully be learning about even more. For instance, there are actually quite a few sessions about Chrome, and we can’t help but wonder whether any of these will have ties to ChromeOS.

On the flip side, Google TV and Google Home only have one listed session each, which doesn’t leave us feeling great about any potential new advancements being announced. Even still, Android 16 for TV will be arriving this year, so the corresponding session is definitely on our watchlist.

Personally, I’m quite interested in the idea of AI agents, but I have practically zero experience when it comes to coding. When looking through the list, I did notice a session titled “Build no-code intelligent Agents with AppSheet, Gemini, and Vertex AI.” Rest assured, I’ll be watching that when time permits to see what that’s all about.

As we get closer to kick-off, I figured now would be a good time to talk about a few things that I'm either excited about or hoping to see. The first of which should come as little surprise, but I'm curious about whether Desktop Mode in Android 16 will be shown off.

Earlier this week, Jerry wrote about how "Android 16 is Google getting serious about foldables," and I couldn't agree with him more. In the piece, Jerry explains how we've been seeing a lot of focus put on foldable phones, with tablets largely taking a back seat. And as a fan of both form factors, I can't help but wonder what Google has up its sleeve.

Oh, would you look at that! Apple picked a rather conspicuous time to announce that its own developer conference, WWDC 2025, is taking place on June 9. Speaking of which, recent reports suggest that Apple won't be putting much of a focus on Siri. Instead, it will be more about Apple Intelligence, with Siri taking a back seat. Meanwhile, we already know that Google will have Gemini front and center throughout I/O.

Sometimes you can't help but chuckle seeing how companies and others in the industry react or try to grab headlines before a competitor makes an announcement. Shortly after Apple announced WWDC, OpenAI CEO Sam Altman had the following to say:

chatgpt daily active users have increased >4x over the last year. messages/day by much more than that.at the same time, the engineering team has greatly increased reliability and is now making real progress on speed.significant scale to be doing this at; great work!May 20, 2025

Obviously, Altman isn't calling out Google directly, the timing of such a statement is kind of funny. It's almost as if OpenAI is a bit worried about what improvements are set to come to Gemini.

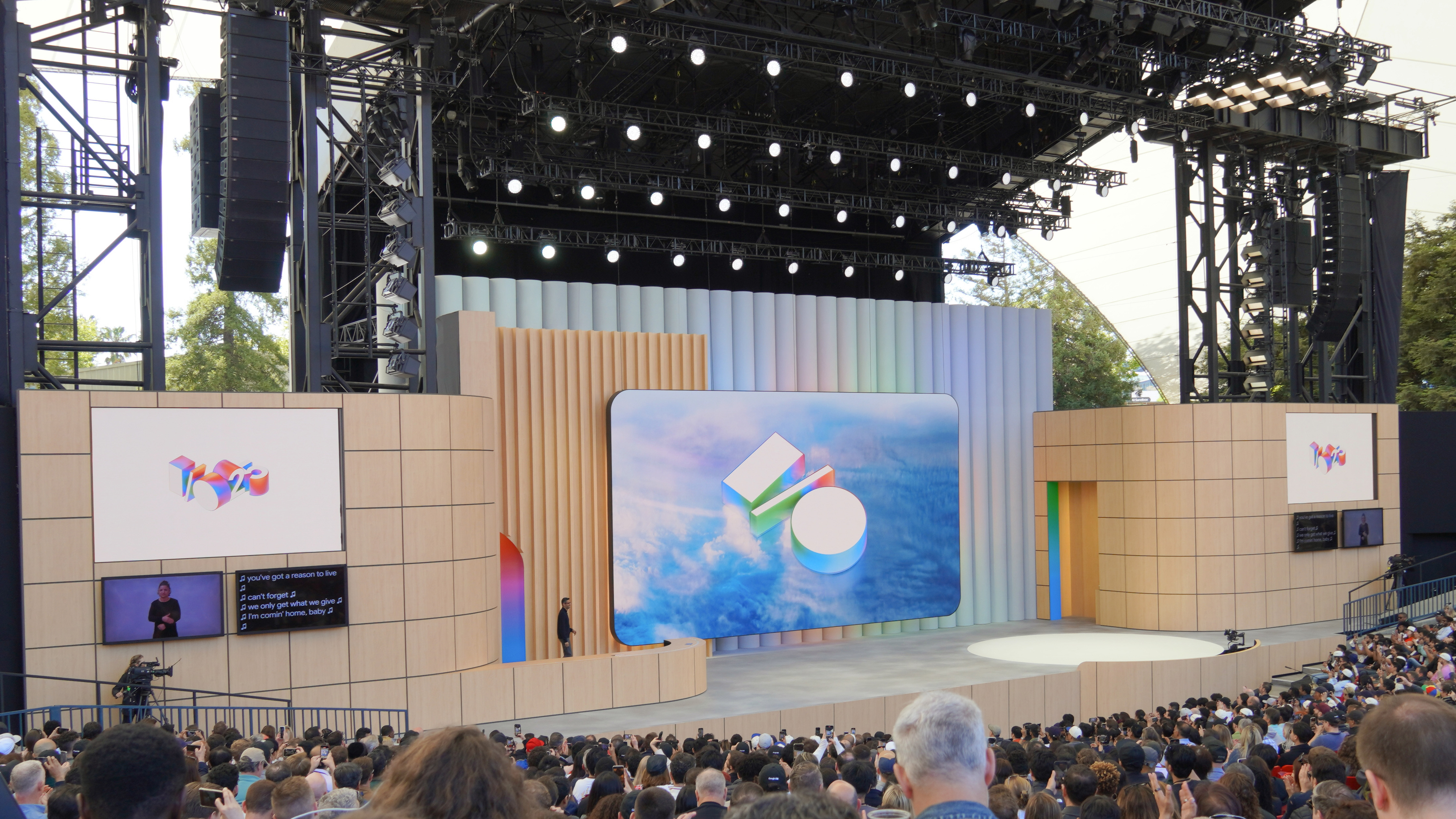

You know what they say, early bird gets the worm. AC Senior Contributor, Brady Snyder, is already on site and decided to tease those of us who couldn't make it by showing off the stage at Shoreline. This one's on me, but yesterday, Michael sent a picture after he registered for I/O.

Just look at how happy he is!

One hour to go! And to celebrate, the Google I/O 2025 Livestream has officially started over on YouTube. There's not much going on with it at the moment, but we wouldn't be surprised to see something like an interactive game to help you kill time until the Keynote begins.

Did you know that there's actually a second Keynote today? The Developer Keynote starts at 4:30 PM ET, and is where Google will share "the latest updates to our developer products and platforms.

Not only that, but there's actually a Livestream Playlist on YouTube, offering a way to easily watch the available live sessions for different "Focus areas."

30 minutes to go and now we have Toro y Moi on stage to provide a "performance and an experiment." Basically they'll be "jamming with the computer, and the computer will be jamming with me." This looks pretty awesome.

Don't mind us, we're just enjoying the vibes. 15 minutes left to go now. Nothing major has leaked, but we're just seeing a lot of excitement for today's announcements over on social media.

It's TIMEEEEEE! The Google I/O 2025 Keynote has officially commenced! The gang is ready to go, are you?

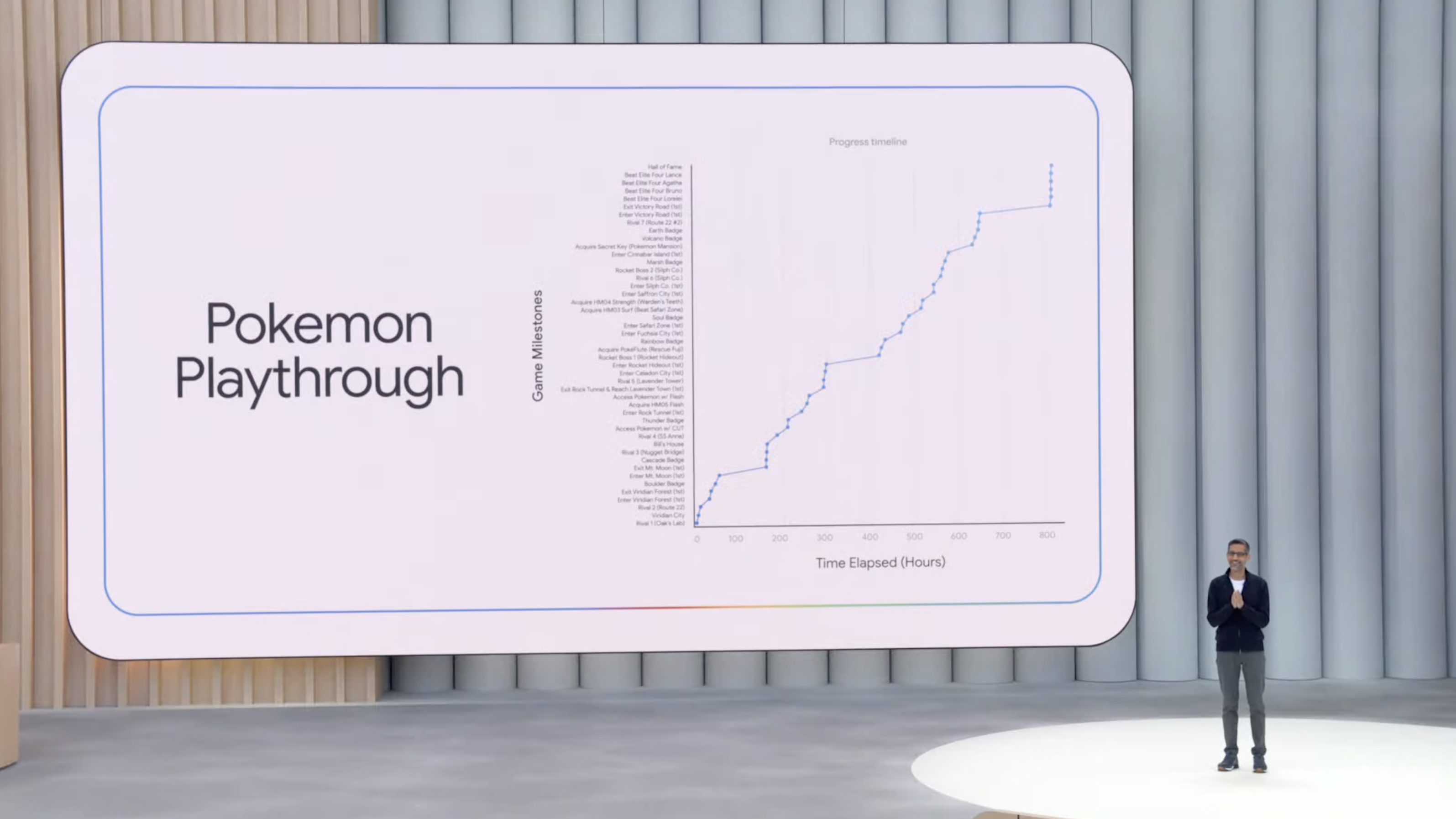

Did you know Gemini beat Pokemon Blue? I sure didn't. That's pretty incredible.

Holy crap. The Gemini app has more than 400 million users, and AI Overview has more than 1.5 billion users every month.

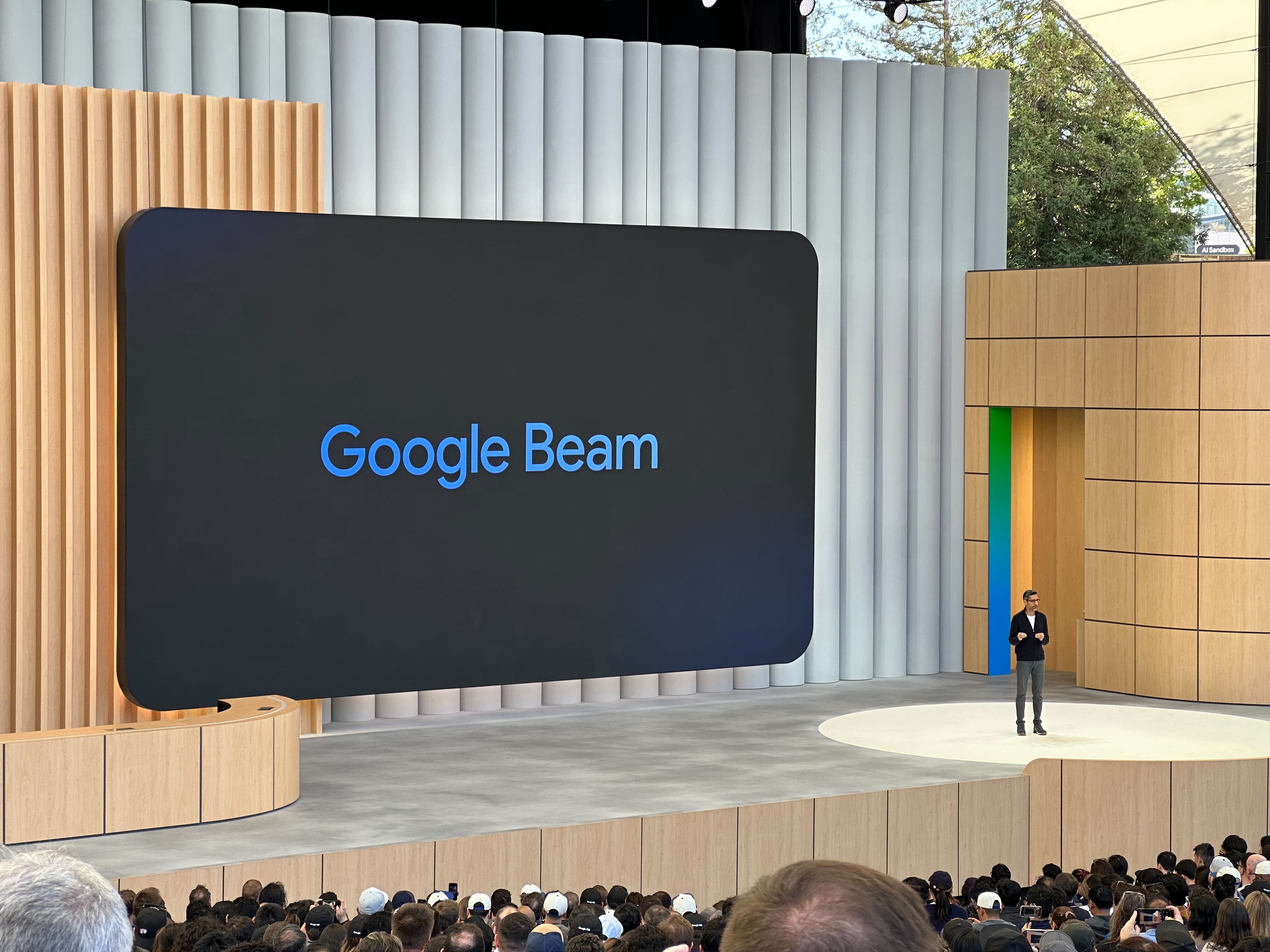

Google Beam is an "AI-first communications video platform." It converts a 2D video stream into a 3D stream, rendering you on compatible screens, and being precise down to the millimeter.

The first device will be available in partnership with HP later this year.

Google Meet is also getting an upgrade later this year with real-time translation.

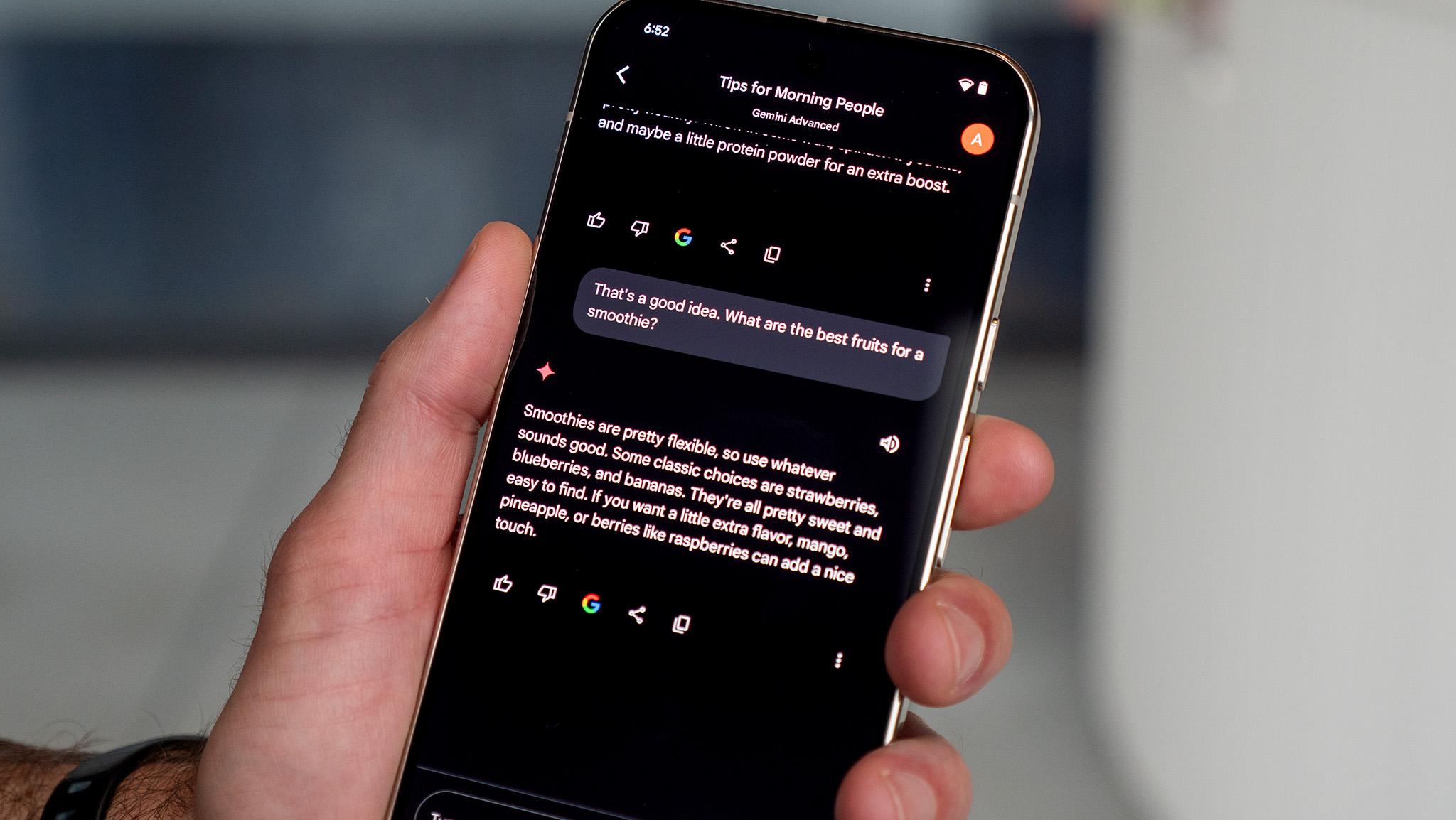

Gemini Live camera and screen sharing is coming to both Android and iOS starting today. I can't wait to start playing around with it and seeing what Gemini Live can do.

Google announced Project Mariner at I/O last year, and now, its features will be coming to the Gemini API soon. This is the feature that allows you to enable Gemini to perform tasks on your computer, without any input from you.

Speaking of which, Agent Mode is coming soon, which is another way to let Gemini perform tasks based on what you're trying to achieve, without doing it yourself.

Gmail is getting Personalized Smart Replies this summer for Gemini subscribers. We expect a lot of the newest features announced will be limited to Gemini subscribers first, before rolling out to the masses later.

It's Google DeepMind time, as Demis Hassabis is introduced by Sundar.

Gemini 2.5 Flash is being introduced and is "better in nearly every dimension" and will be available alongside Gemini 2.5 Pro sometime in June. However, you can also try this out new model in Google AI Studio, Vertex AI, and the Gemini app.

New previews are coming for text-to-speech thanks to Gemini 2.5 Flash and Pro. It works in 24 languages, and can switch between languages (and switch back) "all with the same voice."

Native audio output is available today in Gemini

Gemini 2.5 Flash is now 22% more efficient, meaning that you'll get the same responses but using fewer tokens. Plus, Google is adding "Thinking budgets," letting you set the number of tokens it can use before responding.

Since Gemini is multi-modal, you can update your code using nothing more than a sketch. All without forcing you to learn about complex APIs and coding languages. Google is bringing this functionality from Gemini 2.5 Pro to a bunch of AI-coding apps, along with its own suite of coding products.

Gemini Diffusion generates codes five times faster than Google's lightest 2.5 model. It's able to parse and analyze the prompt in a blink of an eye. Seriously, if you blinked, you missed Gemini solving the problem that was shown on screen.

It looks like Project Astra is getting ready to come to phones, as Google wants Gemini to be a "Personal AI Assistant." It also plans to bring this to Android XR, which is the first mention of the new platform today! I'm sure Nick and Michael got pretty excited there.

Now, Sundar is back on stage, talking about Google Search, starting with AI Overviews. It's being used in more than 200 different countries and regions. Sundar says this is "one of the most successful launches in search in the past decade."

Google Lens apparently grew by more than 65% over the past year, which is simply incredible.

AI Mode is looking to transform the way you interact with Google Search. It's almost like having a Gemini chat right from the Search bar. With just a click or a tap, AI Mode can answer more complex questions, and you can even ask follow-up questions.

AI Mode will soon get personalized suggestions based on previous questions. It will also provide recommendations based on your interests, along with what's in your emails.

AI Mode Deep Search is coming later this year, and is able to provide a "full cited report in just minutes." It scans thousands of URLs based on your search terms, and double-checks itself before providing a response.

Complex analysis and data visualization are coming to Google Search via AI mode later this year.

Project Mariner capabilities are coming to AI mode this summer. In the demo, Search was able to find the right seats for an upcoming baseball game, taking them to the ticket checkout in just a few moments.

Search Live uses your camera to "see what you see" giving you answers to any questions that you have in real time.

Google Search will soon be able to track prices, send you a notification when its reached a target price, and you can buy whatever it is with just a tap. This feature will begin rolling out later this year.

And to help you get a better idea about whether something will fit, Google Search now lets you try on clothes virtually.

Personal, Proactive, and Powerful are the three pillars of Gemini 2.5 Pro. Five things are being launched in Gemini today:

- Gemini Live: Includes camera and screen sharing for free on Android and iOS starting today. In the coming weeks, Gemini Live will be able to connect to your favorite Google apps, such as Keep or Calendar.

- Deep Research: Starting today, you can upload your own files to guide the research agent. It will "soon" let you research across Google Drive and Gmail.

- Canvas: Interactive space for co-creation. Transform a report with just a tap, and can even "vibe code."

- Gemini in Chrome: "Your personal AI assistant for browsing the web." Automatically understands the context of what's on the page. Rolling out this week to Gemini subscribers in the US.

- Imagen 4: Coming to Gemini app. "More nuanced colors and fine-grained details."

I was told that I had no choice but to tell you this joke from Nick:

"When you put a bunch of Gemini-made videos together they call it an Imagen Heap"

Veo 3 is available TODAY! It now comes with native audio generation, generating sound effects, dialog, and background sounds, all with a prompt.

The cat's out of the bag for everything that Google announced today, but you might be surprised to learn that Google wasn't the only one with something to share today. Here's what you might've missed:

- Xreal unveiled its Android XR-powered smart glasses at I/O, but that wasn't the only announcement

- Google just laid out its game plan to make you (and developers) care about Android XR

- Google introduces new AI tools to help creatives direct like a pro

- New Google AI Pro and $249/month Ultra subscription announced at I/O

- Google Workspaces gets supercharged with Gemini to help you work smarter

Google used Veo 3 to make a video of a giant chicken helping a car fly over the Grand Canyon and now I've officially seen enough.

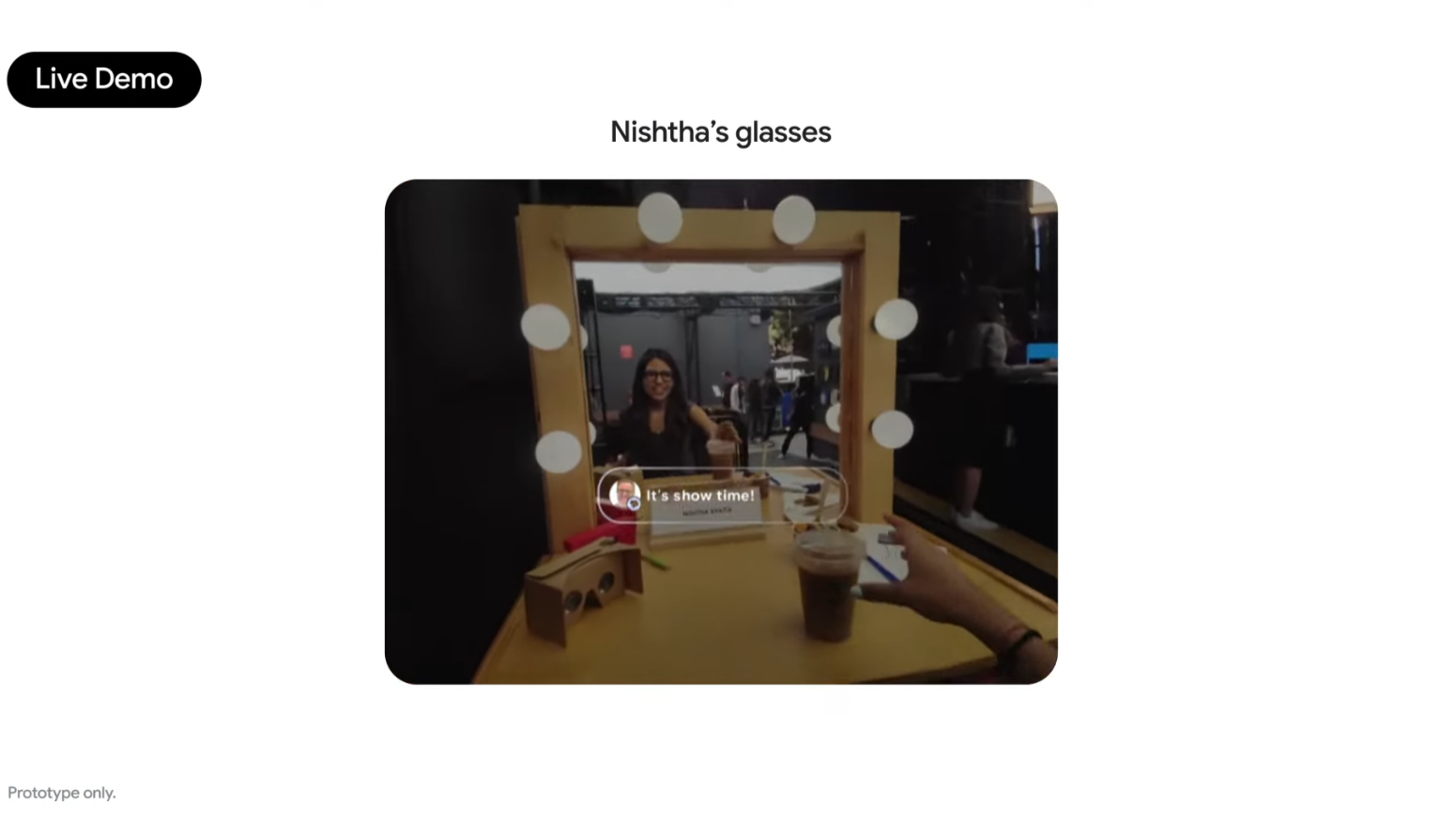

Shahram Izadi just took the stage and is talking about Android XR. He's wearing what looks like the same pair of AR glasses he wore to TED 2025 last month. The demo showcased the Gemini-powered capabilities of the glasses, including how the glasses can overlay a UI onto the real world so you can get notifications, turn-by-turn directions, and even live stream what you're seeing to the world via your glasses.

Nishtha Bhatia is currently live demoing a livestream using these Android XR-powered glasses. During the demo, she asked Gemini if it remembered the name of the coffee shop on the mug while she was walking through the backstage area at I/O. It pulled up the name, plus a description of the shop, all while it was still livestreaming. Impressive!

Shahram confirmed he is wearing the same glasses and said they act as his personal teleprompter, something you can't tell at all while watching him on stage. Nishtha also took a photo from the glasses using a voice prompt, and Gemini took the photo and uploaded it to Google Photos.

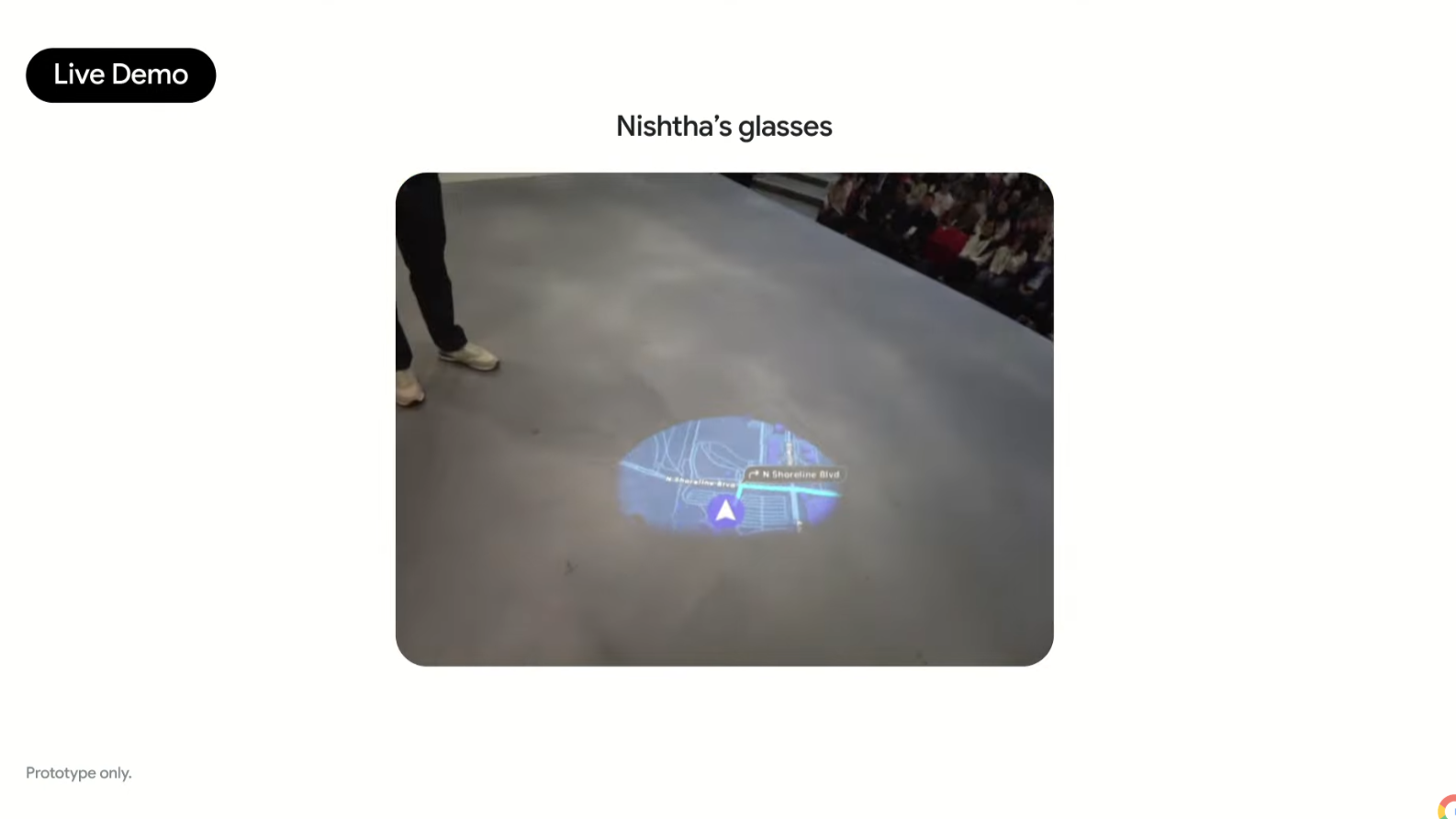

Nishtha also showcased what turn-by-turn directions look like when viewing them through the Android XR glasses. They look a whole lot like a Star Wars-style hologram with real-time movement and direction changes that should make it safer to navigate while walking around (instead of running into something while looking down at your phone).

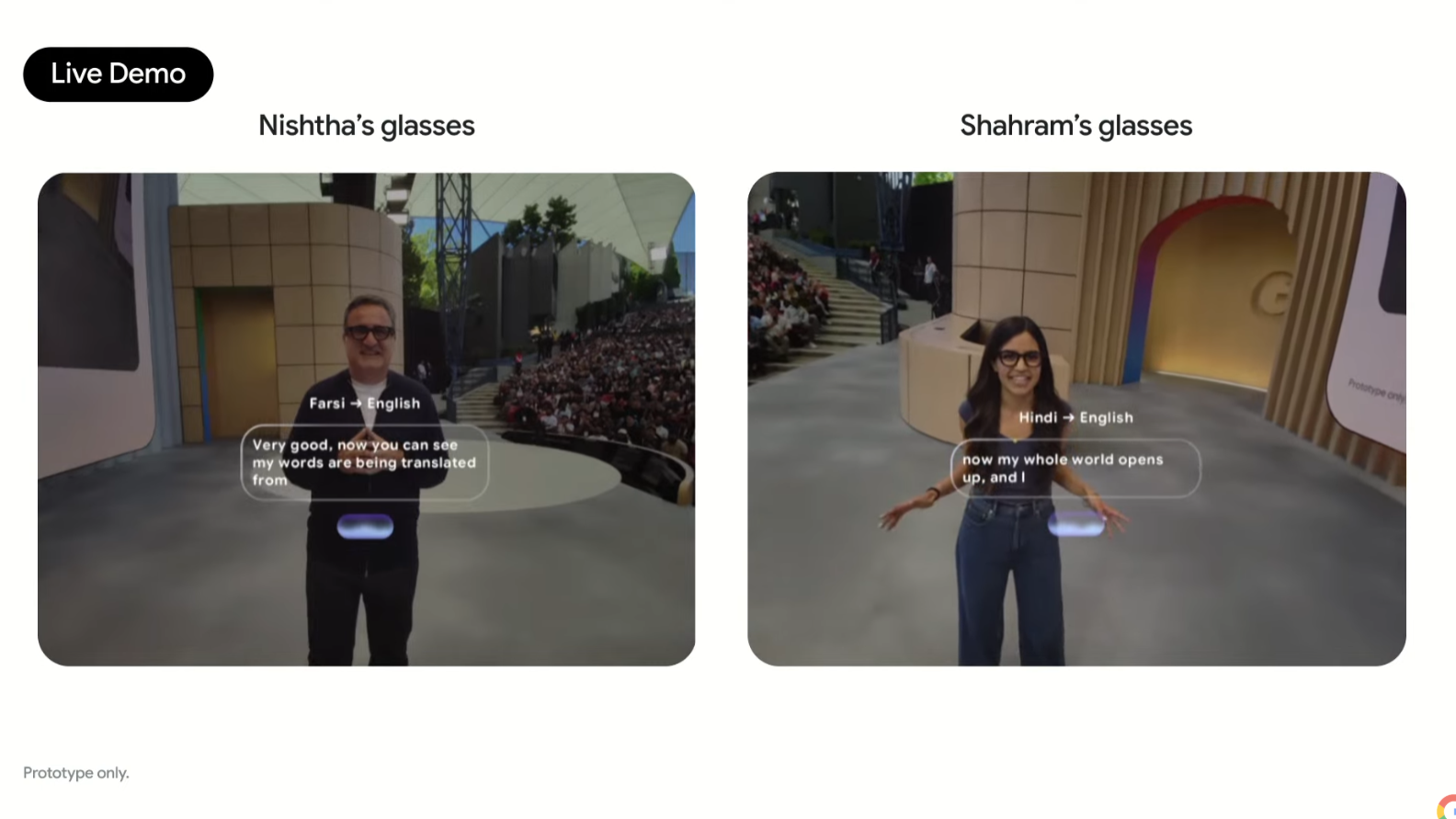

Both Nishtha and Shahram attempted to do a live translate demo while looking at each other through the glasses. As expected, Wi-Fi was sketchy and the noise was probably a bit too much to attempt this, but it was cool seeing how the UI looks and how live translation with a screen on smart glasses will work when these glasses debut sometime in the next year.

Xreal announced that it will be the first Android XR smart glasses to market, likely launching later this year or in early 2026. Android XR was built for headsets first but is coming to smart glasses, as Google just showed off. Xreal Project Aura glasses will be the first ones released and are designed to be tethered to your smartphone to keep them light and fast.

The glasses that Shahram and Nishtha were wearing are likely ones designed by Samsung and Google, but eyewear brands like Gentle Monster and Warby Parker will design Android XR glasses starting next year. It sounds like we're going to have a serious smart glasses war on our hands between Google and Meta!

And that's a wrap for Google I/O 2025's live keynote! Sundar finished up by recapping some of the most important announcements, including Android XR, tons of Gemini updates, Veo 3, Imagen 4, Google's new AI subscription models, AI-powered search results and shopping, and plenty more.

We're going to head off to the demo areas to see what Google's got cooking all around the Shoreline Ampitheater, so keep your eyes peeled here for all the info as we find it, live!