How pixel binning works on smartphone cameras

If you've been paying attention to mobile photography trends and specs lately, you've probably come across the term "pixel binning" somewhere along the way. If you're confused as to what it is or what it does for your photos, here's what you need to know.

Why pixel size matters

Image sensors on smartphones are limited by the space manufacturers and engineers have to work with. Unlike DSLR and mirrorless cameras that use considerably larger sensors, phones have to be creative in maximizing available space to try to achieve similar results.

Since pixels are the dots that make up a digital image, they are doubly important when it comes to how the pixels in an image sensor capture light. Technically speaking, the proper term for the light cavities recording images is "photosites." Still, the industry and marketing lingo tends to sway toward "pixel" to describe these elements, so we'll go with that.

The more crammed into an image sensor, the smaller each pixel tends to be.

There is a caveat that comes with all this, and that's the size of each individual pixel. The more crammed into an image sensor, the smaller each pixel tends to be. That may theoretically help capture more detail, but it may also adversely affect how well they capture light. Micron pixels in phone cameras, which refers to their size, are usually a mere fraction of what a good DSLR or mirrorless can do.

It's a conundrum phone makers have been facing for years. How do you squeeze in a larger sensor, pack in more pixels, and yet still make them large enough to capture more light? It's not always practical to make the phone thicker or wider to accommodate the physical dimensions of a larger sensor. Nor is it realistic to make lenses larger, or include a mechanical aperture that covers a wide range of f/stops. This is where pixel binning comes in to try and close the gap.

How pixel binning works

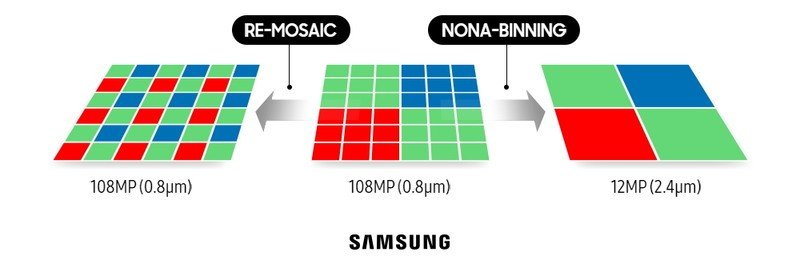

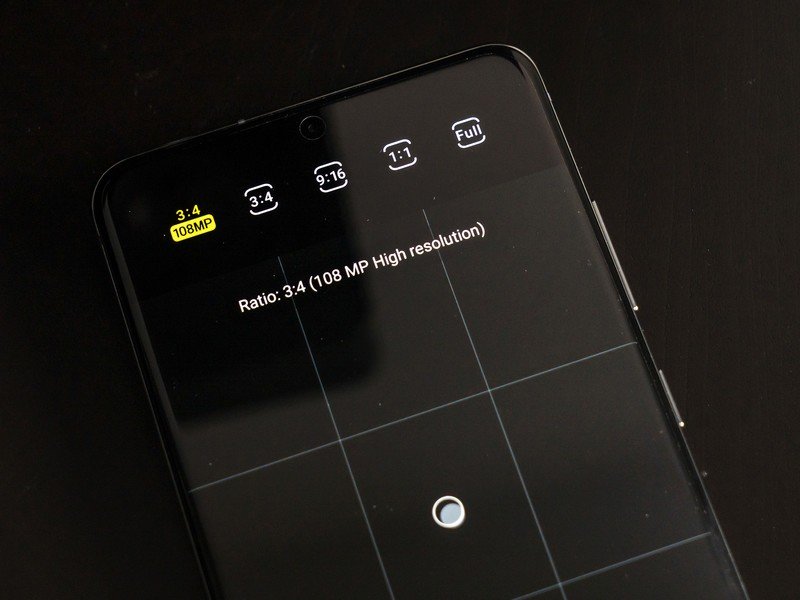

There's a lot that goes into this, and that's why it's better to look at it from a slightly non-technical perspective. Let's take the Samsung Galaxy S20 Ultra as an example. It has a 108-megapixel image sensor, though it only works with the standard lens and regular photo mode.

With a 9:1 ratio, binning can knock that down to a 12-megapixel image, which means each pixel is considerably bigger, and therefore, better able to capture more light. It's the primary reason why that phone's other modes, particularly Night and Pro, shoot at 12-megapixels, rather than the full 108.

Be an expert in 5 minutes

Get the latest news from Android Central, your trusted companion in the world of Android

With a 9:1 ratio, binning can knock that down to a 12-megapixel image, which means each pixel is considerably bigger, and therefore, better able to capture more light.

All of this creates a trade-off that manufacturers conveniently don't mention when marketing their phones. While binning can help provide two shooting options from the same sensor, they aren't otherwise working in tandem. For example, when you shoot at a resolution as high as 108-megapixels, you're capturing a lot of detail in each frame. So much so, in fact, that you can crop in a lot more than you ever could with a 12-megapixel shot. The catch is that you need to shoot at the full resolution in optimal conditions where light is bright (think daylight or really well-lit indoor environs), or else you may find they turn out dark and dull. Samsung knows this, and that's why it keeps a leash on the full resolution.

But it's not the only brand using pixel binning. The practice goes back years, with the HTC One coming to mind in 2013 when the company used pixel binning to take a 16-megapixel image sensor and coined the resulting 4-megapixel shots as "UltraPixels." Huawei, LG, OnePlus and Xiaomi have all used it with different models, and still do now.

Benefits and alternatives to pixel binning

That's not to say there isn't some noble intent in developing pixel binning because it's not entirely about capturing more light. It's also about reducing noise and increasing dynamic range to make low-light images look better. Ever notice that whenever you shoot in low-light using the main photo mode on any phone, the highlights are often blown out? It cranks up the ISO and lowers the shutter speed, leading to a noisy shot devoid of any real beauty.

Phone makers are limited by physics and physical constraints, so getting more out of the sensor is one of the only ways to shoot better in low-light — still a major challenge for any smartphone. With larger sensors, the idea would be that you could shoot at full resolution during the day and binned resolution in low-light. It's simple when you put it that way, but every brand has done a poor job outlining those parameters because more significant numbers stand out in marketing materials.

With larger sensors, the idea would be that you could shoot at full resolution during the day and binned resolution in low-light.

You may have noticed that Google, one of the best in mobile photography, has yet to use pixel binning with any of its phones. Instead, its engineers have leaned on their own proprietary computational software to process and render images. That includes burst shooting and bracketing in rapid succession to produce the results its Night Sight mode can provide. The sensor pulls in the same light regardless of the day or time, but the AI and software involved are very different based on the mode you shoot with.

Apple also hasn't gone the binning route, choosing to use its own algorithms to help improve its otherwise shoddy night photography over the years. Even Huawei, which uses a 4:1 ratio with the P40 Pro 50-megapixel main camera, also uses burst and HDR bracketing when shooting in its own Night mode. The combination works well, more often than not, and is truly impressive by mobile standards.

Knowing the limits

That's why it's important to measure expectations and not view pixel binning as a saving grace. Zoom in on any 12-megapixel photo, particularly with the sensor and lens sizes associated with phones, and you're likely to see a lack of detail along the way. It's normal under the circumstances, but the point is not to crop a low-light shot, it's to actually get it to look good.

Pixel binning alone is unlikely to be the answer. More than likely, it will be part of a series of complementary technologies and advancements that help deliver better photos in tough conditions. Binning has to show significantly better results when compared to phones that don't use it at all in order to stake a claim as being truly groundbreaking. We're not there yet, but perhaps we will in time if the breakthrough comes from more than just putting pixels together.

Hosted by Android Central's Alex Dobie

Join us for a deep dive into everything you need to know to take better photos. Composition, software features, and editing are just some of the features we'll be tackling together in this course.

Ted Kritsonis loves taking photos when the opportunity arises, be it on a camera or smartphone. Beyond sports and world history, you can find him tinkering with gadgets or enjoying a cigar. Often times, that will be with a pair of headphones or earbuds playing tunes. When he's not testing something, he's working on the next episode of his podcast, Tednologic.