Google's latest AI ethics controversy is a product of its own hubris — even if it enforced the rules

Recently, Dr. Timnit Gebru, recognized as one of the best ethical AI researchers Google had ever hired, parted ways with the company. It wasn't a very cordial breakup, either.

It all stems from a paper Gebru was intending to present at a computer science conference in March 2021 about ethics in AI — the kinds of things Android uses inside the best phones every day. Google claims it requires 14 working days to approve any writing or presentations of this type if an employee advertises themself as working for Google. This is so the company has a chance to make sure all the data about it is correct and current. That isn't a bad thing and most companies will have similar policies surrounding research papers.

Where things differ is how each side portrays what happened next.

Gebru says that there really isn't any hard and fast policy about research papers or presentation reviews, and Google has always been flexible in this particular area. After the paper was reviewed, Google then pushed back about some of the details that didn't portray it in the best light. The company then fired her over the incident, according to her version of the events. Google Walkout, a group of ex-employees that keeps tabs on Google's missteps, has published a deep look at Gebru's side of the story.

There are two sides to every story. Both make Google look bad.

Jeff Dean, head of Google's AI division, has been tasked with drafting Google's side of the story, even though he isn't the person who let Gebru go. According to Dean, once Gebru was told the presentation needed corrections and inclusion of Google's plans to change its AI program, she presented a list of demands to the company that would need to be met before she returned to work. Google was unable to meet those demands and Gebru's resignation was accepted.

Both sides have declined to offer public comment past these online posts, but Google CEO Sundar Pichai has sent an internal memo to the entire company apologizing for how the company handled itself. From Pichai's memo:

First - we need to assess the circumstances that led up to Dr. Gebru's departure, examining where we could have improved and led a more respectful process. We will begin a review of what happened to identify all the points where we can learn -- considering everything from de-escalation strategies to new processes we can put in place. Jeff and I have spoken and are fully committed to doing this. One of the best aspects of Google's engineering culture is our sincere desire to understand where things go wrong and how we can improve.Second - we need to accept responsibility for the fact that a prominent Black, female leader with immense talent left Google unhappily. This loss has had a ripple effect through some of our least represented communities, who saw themselves and some of their experiences reflected in Dr. Gebru's. It was also keenly felt because Dr. Gebru is an expert in an important area of AI Ethics that we must continue to make progress on -- progress that depends on our ability to ask ourselves challenging questions.

It's impossible to know exactly what happened unless you were involved during the process because both sides of this controversy offer a turn of events that make sense on their own, even though the two tellings are very different. Pichai says finding out what happened and why is a top priority so he can rectify the issues and that's great to see — the CEO is doing the right thing this time and we wish him luck. But really, that makes little difference when it comes to the bigger issues of Google's own ethical practices: this happened, and it shouldn't have.

Be an expert in 5 minutes

Get the latest news from Android Central, your trusted companion in the world of Android

Dr. Gebru's list of demands looks like a list of how Google could be better.

Google has a right to consider a list of demands as a resignation letter. But let's have a look at those demands for a minute. Gebru's conditions were 1) finding out who actively called for the retraction of her paper, 2) have a set of meetings with Google's Ethical AI team about the issues, and 3) finding out exactly what Google considers acceptable research. If your company bothers to even have an Ethical AI division, it would seem that these "demands" are beneficial to everyone involved in it.

Dean also points out that Gebru's paper omitted details about how Google has plans to change how it approaches AI, and felt it was fair to want those details included. This is because Google knows it doesn't look so great when it comes to the ethical considerations of what are known as large language models. It made excellent progress training one of the most complex models but hasn't helped it evolve.

Large language models are trained using an absurdly large amount of text. This text is collected from the internet and can be filled with racist, sexist, and all manner of other offensive and abusive language that has no place in a model designed to interact with customers. The problem is that language evolves and any AI language model would need to be constantly retrained to keep up with the times.

This is where Google hasn't been diligent and its large language models need more training to include some things, ignore other things, and better represent groups of people who traditionally have little access to the internet. In short, Google's AI was trained using text written mostly by people who look and act the same. That's a big part of what ethical AI is about — making sure everyone is equally represented and that models evolve with changing times.

"AI-generated language will be homogenized, reflecting the practices of the richest countries and communities" warns the MIT Technology Review.

Of course, Google would rather have glowing words about its proposed changes to make the AI that powers things like an online search more ethical. But it was willing to let one of the best at doing just that exit the company because of a paper that focused on the here-and-now instead of talking about the future. It sounds like the company ethics also need some adjustment. But it's also easy to understand why Google is so worried about appearances — it's under a lot more scrutiny than other companies that are building a foundation around AI.

When you think about tech companies that use AI and have a less than stellar track record when it comes to how customers are treated, you probably have three names in mind: Amazon, Facebook, and Google. These companies are in your face because each provides consumer services that we touch and interact with every day. That means they should be subject to very intense scrutiny, especially when it comes to new technology like artificial intelligence.

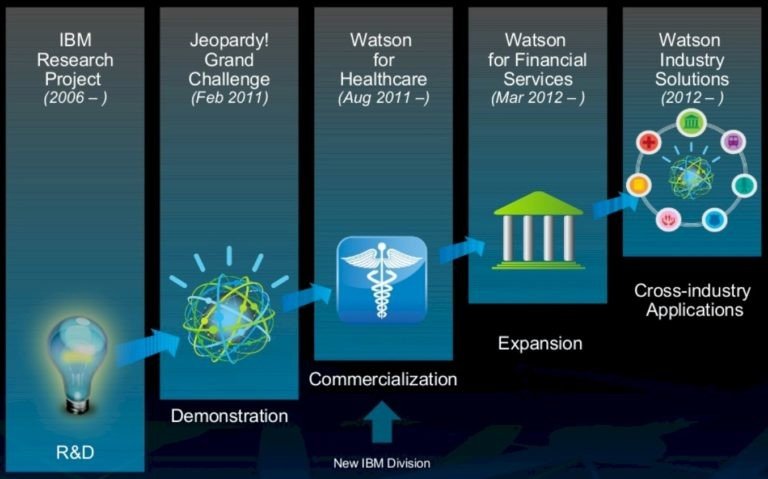

But you might also know that other tech companies like Microsoft, NVIDIA, and IBM (there are plenty more, too) are also deeply involved with AI yet under much less scrutiny. It was just a few years ago that Microsoft allowed an AI model to be trained on antisemitic ideas. That's completely unacceptable, but it didn't earn Microsoft the same level of scrutiny that Google has.

We should want to see all tech companies under intense scrutiny.

I'm not saying Google is being treated unfairly here. I'm saying that all companies building out AI models that affect our lives in any way should be under a microscope for every single thing it does. You care about Google's AI division because it powers your searches and your inbox. Facebook has a really bad track record of its AI models amplifying untrue posts. Amazon uses AI to keep tabs on what its competition is doing and how it can undercut their businesses.

We see these things, but we don't see how IBM uses AI for financial services, healthcare services, or customer support applications. Surely your medical care and your bank account are as important as your Facebook timeline or your Gmail inbox.

These are real issues that shouldn't be pushed aside. In fact, we need more people like Dr. Gebru overseeing how work in AI is done with our best interests in mind. But in the here and now, it certainly looks like Google once again faces controversy over the handling of an employee who didn't toe the company line and it's a really bad look for the company.

Jerry is an amateur woodworker and struggling shade tree mechanic. There's nothing he can't take apart, but many things he can't reassemble. You'll find him writing and speaking his loud opinion on Android Central and occasionally on Threads.