What is Google Duplex?

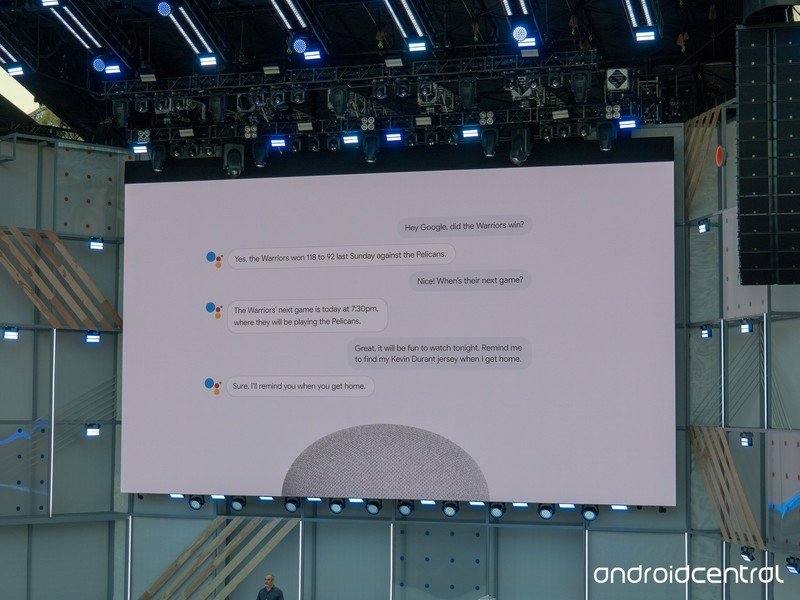

At Google I/O 2018 we saw a demo of Google's latest new cool thing, Duplex. The 60-foot screen onstage showed what looks just like your Google Assistant app with a line-by-line playback of Assistant making a phone call to a hairdresser and setting up an appointment, complete with the pauses, the ummms and ahhhs, and the rest of the idiosyncrasies that accompany human speech. The person taking the appointment didn't seem to know they were talking to a computer because it didn't sound like a computer. Not even a little bit.

That kind of demo looks amazing (and maybe a little creepy) but what about the details? What is Duplex, exactly? How does it even work? We all have questions when we see something this different and finding answers spread across the internet is a pain. Let's go over what we know so far about Google Duplex.

What is Google Duplex?

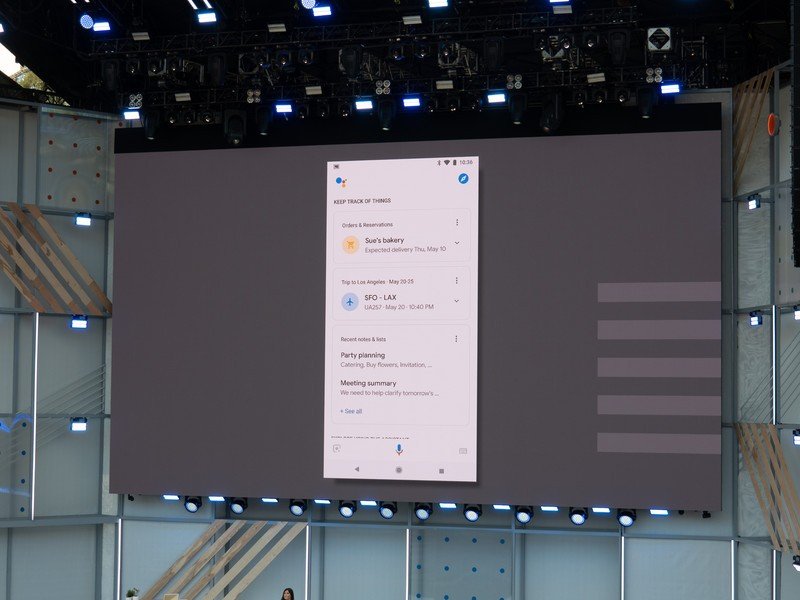

It's a new tool from Google that aims to use Artificial intelligence (AI) to "accomplish real-world tasks over the phone" according to Google's AI researchers and developers. For now, that means very specific tasks like making appointments, but the tech is being developed with an eye on expansion into other areas. Spending billions to create a cool way to make dinner reservations sounds like something Google would do but isn't a great use of time or money.

Duplex is also more than we saw in a demo and if it ever leaves the lab will be a lot more than we see or hear on our end. There are huge banks of data and the computers to process it involved that aren't nearly as cool as the final result. But they are essential because making a computer talk and think, in real time, like a person is hard.

Isn't this just like speech-to-text?

Nope. Not even close. And that's why it's a big deal.

Duplex is designed to change the way a computer "talks" on the phone.

The goal for Duplex is to make things sound natural and for Assistant to think on the fly to find an appointment time that works. If Joe says, "Yeah, about that — I don't have anything open until 10, is that OK?" Assistant needs to understand what Joe is saying, figure out what that means, and think if what Joe is offering will work for you. If you're busy across town at 10 and it will take 40 minutes to drive to Joe's Garage, Assistant needs to be able to figure that out and say 11:15 would be good.

Equally important for Google is that Duplex answers and sounds like a person. Google has said it wanted the person on the phone not to know they were talking to a computer, though eventually decided it would be best to inform them. When we talk to people, we talk faster and less formal (read: incoherent babbling from a computer's point of view) than when we're talking to Assistant on our phone or the computer at the DMV when we call in. Duplex needs to understand this and recreate it when replying.

Be an expert in 5 minutes

Get the latest news from Android Central, your trusted companion in the world of Android

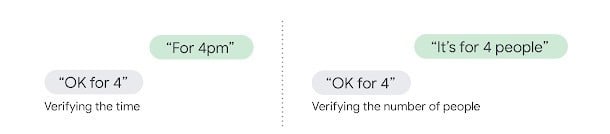

Finally, and most impressive, is that Duplex has to understand context. Friday, next Friday, and Friday after next week are all terms you and I understand. Duplex needs to understand them, too. If we talked the same way we type this wouldn't be an issue, but we umm you know don't because it sounds just sounds so stuffy yeah it's not like confusing though we have heard it all our lives and are used to it so no we don't have problems you know understanding it or nothing like that.

I'll administer first aid to my editor after typing that while you say it out loud, so you see what this means.

How does Duplex work?

From the user end, it's as simple as telling Assistant to do something. For now, as mentioned, that something is limited to making appointments so we would say, "Hey Google make me an appointment for an oil change at Joe's Garage for Tuesday morning," and (after it reminded us to say please) it would call up Joe's Garage and set things up, then add it to your calendar.

Pretty nifty. But what happens off camera is even niftier.

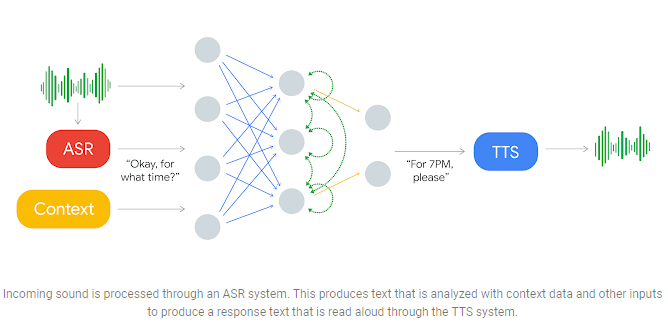

Duplex is using what's called a recurrent neural network. It's built using Google's TensorFlow Extended technology. Google trained the network on all those anonymized voicemails and Google Voice conversations you agreed to let it listen to if you opted in with a mix of speech recognition software and the ability to consider the history of the conversation and details like the time of day and location of both parties.

Essentially, an entire network of high-powered computers is crunching data in the cloud and talking through Assistant on your phone or other product that has Assistant on board.

What about security and privacy?

It comes down to one simple thing: do you trust Google. On-device machine intelligence is a real thing, though it's constrained and relatively new. Google has developed ML Kit to help developers do more of this sort of thing on the device itself, but it's all a matter of computing power. It takes an incredible amount of computations to make a hair appointment this way, and there's no way it could be done on your phone or Google Home.

You have to trust Google with your data to use its smart products and Duplex will be no different.

Google needs to tap into much of your personal data to do the special things Assistant can do right now, and Duplex doesn't change that. What's new here is that now there is another party involved who didn't explicitly give Google permission to listen to their conversation.

If/when Duplex becomes an actual consumer product for anyone to use, expect it to be criticized and challenged in courts. And it should be; letting Google decide what's best for our privacy is like the old adage of two foxes and a chicken deciding what's for dinner.

When will I have Duplex on my phone?

Nobody knows right now. It may never happen. Google gets excited when it can do this sort of fantastic thing and wants to share it with the world. That doesn't mean it will be successful or ever become a real product.

For now, Duplex is being tested in a closed and supervised environment. If all goes well, an initial experimental release to consumers to make restaurant reservations, schedule hair salon appointments, and get holiday hours over the phone will be coming later this year using Assistant on phones only.

Where can I learn more?

Google is surprisingly open about the tech it is using to create Duplex. You'll find relevant information at the following websites:

- Google AI blog (Google)

- Deepmind

- Tensorflow.org

- The Cornell University Library

- Google Research (Google)

- The Keyword (Google)

- ML Kit (Google)

Of course, we're also following Duplex closely, and you'll hear the latest developments right here as soon as they are available.

Jerry is an amateur woodworker and struggling shade tree mechanic. There's nothing he can't take apart, but many things he can't reassemble. You'll find him writing and speaking his loud opinion on Android Central and occasionally on Threads.