Google explains how Astrophotography on the Pixel 4 works

What you need to know

- Astrophotography is an automatic mode within Night Sight on the Pixel 3 Pixel 4.

- Google goes deep in detail as to how its Night Sight and Astrophotography works.

- Night Sight was first debuted on the Pixel 3, with Astrophotography showing up with the Pixel 4 devices.

Pixel cameras are great for many reasons, and one of the big ones is their Night Sight feature. This year when Google released the Pixel 4 and 4 XL, it made that feature even better with the inclusion of the Astrophotography mode. In a new blog post, Google goes deep into the details of how it managed to get a photo mode that was previously only thought to be possible for DSLRs.

If you're unfamiliar with Night Sight, in simple terms it makes photos in low-light — or almost no light — look like they were taken with significantly more light. Google details the process of dealing with the lack of luminescence as such:

To overcome this, Night Sight splits the exposure into a sequence of multiple frames with shorter exposure times and correspondingly less motion blur. The frames are first aligned, compensating for both camera shake and in-scene motion, and then averaged, with careful treatment of cases where perfect alignment is not possible. While individual frames may be fairly grainy, the combined, averaged image looks much cleaner.

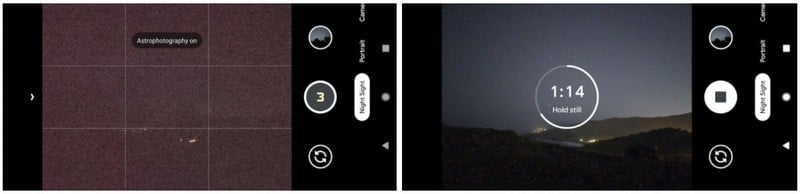

As for the fantastic star shots that can be captured using a Pixel 3 or newer, Google has added the new Astrophotography mode. While Night Sight photos can be taken with the user holding the phone, your pics of the Milky Way will need to be taken with the phone on a tripod or propped against something.

Few are willing to wait more than four minutes for a picture, so we limited a single Night Sight image to at most 15 frames with up to 16 seconds per frame.

When taking these great shots of stars, it can be a bit of a challenge to frame the shot on the screen properly. This is because when you open your phone and switch to Night Sight, what you see on your screen is well — black. Once you set your phone up where it will be unmoved for the next four minutes and press the shutter button, the countdown starts, and the view changes.

Something else Google takes into consideration when it comes to low light photos are "warm pixels" and "hot pixels." Because of something called dark current, which sounds like something out of a Harry Potter book, causes CMOS image sensors to see small amounts of light even when there isn't any. This issue becomes a bigger problem as the exposure time of a photo extends. When this happens, those "warm pixels" and "hot pixels" become visible in the photo as bright specs of light. Here's how Google tackles that issue:

Warm and hot pixels can be identified by comparing the values of neighboring pixels within the same frame and across the sequence of frames recorded for a photo, and looking for outliers. Once an outlier has been detected, it is concealed by replacing its value with the average of its neighbors. Since the original pixel value is discarded, there is a loss of image information, but in practice this does not noticeably affect image quality.

Once the night photo is in its final processing, Google adds another trick to give you an amazing photo, and that's sky processing. Knowing that images of very dark images can appear brighter than they really are and affect the viewer's interpretation for the time of day the picture was actually taken — Google has a solution. Sky processing effectively knows that the sky should appear darker in the photo than it's going , so that portion adjusted without changing the non-sky parts.

This effect is countered in Night Sight by selectively darkening the sky in photos of low-light scenes. To do this, we use machine learning to detect which regions of an image represent sky. An on-device convolutional neural network, trained on over 100,000 images that were manually labeled by tracing the outlines of sky regions, identifies each pixel in a photograph as "sky" or "not sky."

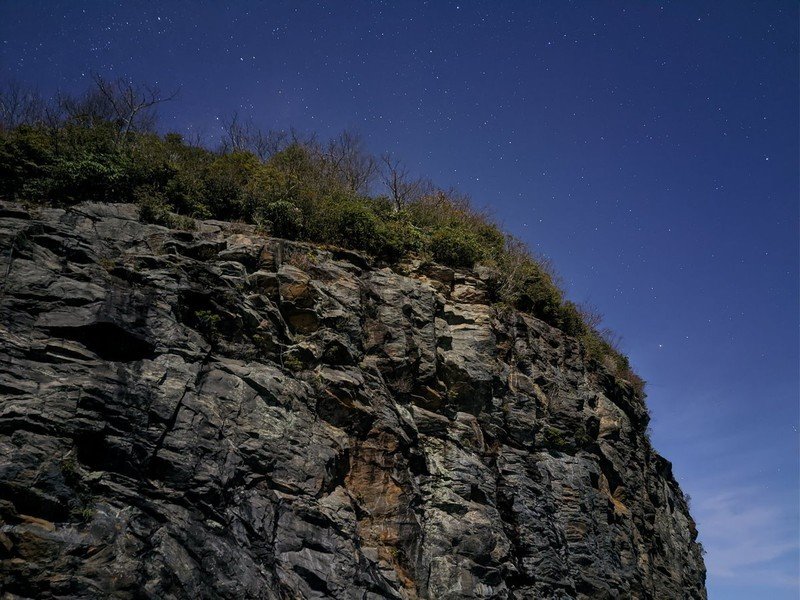

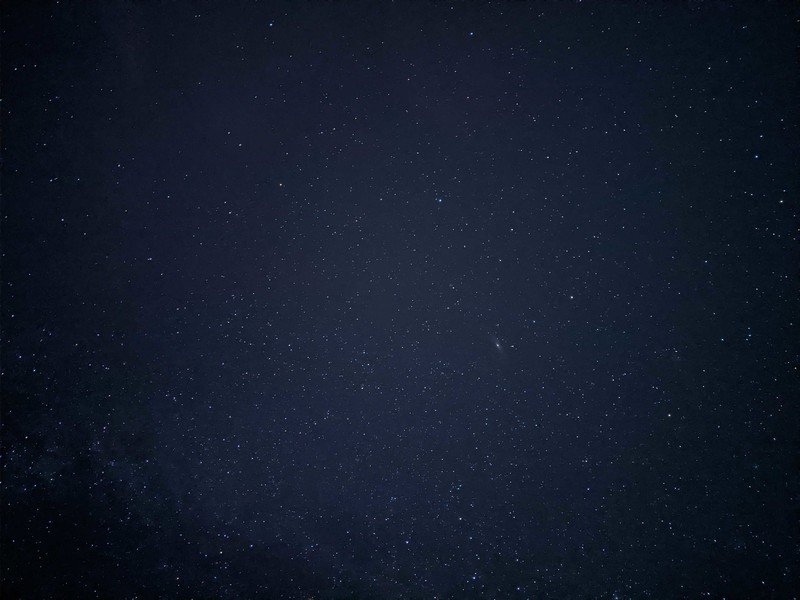

Here are some photos that were taken by some of the writers here at Android Central:

Get the latest news from Android Central, your trusted companion in the world of Android

The best Black Friday Deals for 2019