Zuckerberg says brain signal-reading wearable is 'kind of close' to product-ready

Zuckerberg's EMG device will interpret your brain signals to turn subtle finger gestures into controls for your VR headset or smart glasses.

What you need to know

- Meta is developing an electromyography (EMG) neural interface wristband for tracking gesture controls via your brain waves.

- Zuckerberg mentioned the neural band on a podcast when asked about a future AI application that would "blow their minds."

- He says that Meta is "kind of close to having something here that we're gonna have in a product in the next few years."

Appearing on the Morning Brew Daily podcast last Friday, Meta CEO Mark Zuckerberg noted that his company's futuristic neural interface band — which interprets your brain's signals to the muscles in your hand — is "close" to being "in a product in the next few years."

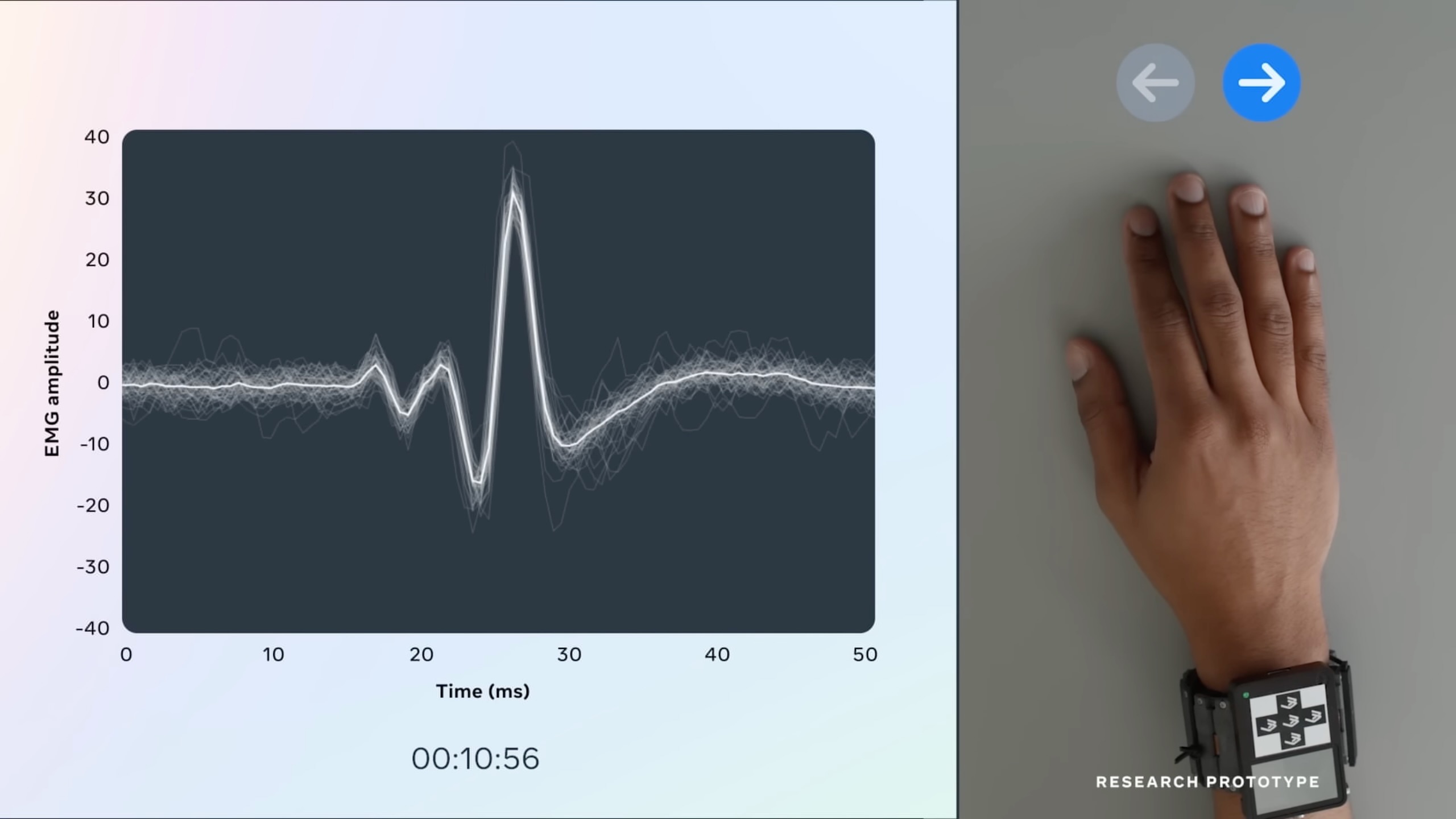

Asked by the host to exemplify the future "power of AI" for skeptics, Zuckerberg described his company's future wearable electromyography (EMG) band. Essentially, the band will interpret the "nervous system signals" sent from your brain to your hands and convert the data into more accurate gesture controls than a camera-based AI can interpret.

"In the future, you'll essentially be able to type and control something by thinking about how you want to move your hand, but it won't even be big motions, so I can just sit here, basically typing something to an AI," Zuckerberg explained.

At Meta Connect 2022, Meta first gave an in-depth look at the technology (we've timestamped the video above to the relevant portion). Essentially, the EMG concept addresses the fact that most people perform gestures erratically — which leads to false results for camera-based tracking — but the brain signals are the same, so the "neural interface continuously gets better over time at understanding each person."

Eventually, Zuckerberg says, "You'll have this completely private and discreet interface" because even the tiniest of finger motions can deliver a strong enough brain signal for the EMG to pick it up and interpret it as a specific motion.

The challenge will be proving that this technology can go beyond simple left-right swipes, as shown in the video above, and progress to the point of actually typing and interacting in mid-air with just your hands, Minority Report-style.

A post shared by Mark Zuckerberg (@zuck)

A photo posted by on

The Meta CEO made headlines recently when he claimed the Meta Quest 3 is a "better product, period" than the Apple Vision Pro. During his Instagram video roast, he noted that future Quest headsets will bring back eye tracking to compete with Apple, but that eye and hand tracking alone isn't accurate enough without a physical keyboard, controller, or "neural interface."

Be an expert in 5 minutes

Get the latest news from Android Central, your trusted companion in the world of Android

If Meta truly intends to make this neural technology part of a product within the "next few years," that might coincide with the future Meta Quest 4, which could use wearable brain signals for more accurate hand-tracking while gaming or typing on a virtual keyboard.

They'd also undoubtedly pair with future versions of the Meta Ray-Bans since Zuckerberg's in-podcast example of using your fingers to type out a message to the Meta AI while outside would only make sense with smart glasses.

Meta is also allegedly working on an Android smartwatch with a built-in camera, LTE connectivity, and close syncing with Meta's apps. We assume that the neural interface band is an entirely different product, however.

Michael is Android Central's resident expert on wearables and fitness. Before joining Android Central, he freelanced for years at Techradar, Wareable, Windows Central, and Digital Trends. Channeling his love of running, he established himself as an expert on fitness watches, testing and reviewing models from Garmin, Fitbit, Samsung, Apple, COROS, Polar, Amazfit, Suunto, and more.