Smart glasses are becoming useful in a way I didn't expect

It's nearly time to start caring, I promise.

Let's be real. Most "smart glasses" you can buy today suck. At best, they're useful in a handful of niche situations and, even then, most of them don't do their job very well. Even the best smart glasses are pretty mid, but that tide is changing, and it's changing fast.

When I first got to try out a pair of Ray-Ban Stories, I had mixed feelings. They were the first pair of smart glasses that felt like they were designed with current technological barriers in mind, but they still didn't feel like enough.

In his weekly column, Android Central Senior Content Producer Nick Sutrich delves into all things VR, from new hardware to new games, upcoming technologies, and so much more.

Fast forward a year later, and Meta Ray-Ban Smart Glasses — that's the renamed, second-generation product — garnered hugely positive reviews from everyone who used them, and it made me realize that we're finally on the cusp of smart glasses greatness.

The smart glasses craze began over a decade ago with Google Glass, which was a product unbelievably ahead of its time in every way. From the form factor to the UX, Google got everything right except for the cultural timing. Now that we're all used to having cameras watching us at all times, these things are becoming more acceptable.

But what if we thought of smart glasses differently? What if smart glasses weren't just a way to make cool new social media posts or listen to music without earbuds? What if they could help the blind see or help deaf people hear in a new way? Some new smart glasses concepts do just that, and I think that's where the real demand begins.

Give me sight

The most intriguing pair of smart glasses I've yet seen isn't for social media or media consumption at all. It's a headset called OcuLenz that's designed to help folks with Age-related Macular Degeneration (AMD) see better.

Right now, OcuLenz looks more like a VR headset than anything, but the company's rapid development roadmap shows it's already working on a version that's more like a pair of glasses instead.

Be an expert in 5 minutes

Get the latest news from Android Central, your trusted companion in the world of Android

I had a great sit-down chat with Michael Freeman, co-founder, CEO, and CTO of Ocutrx — the company behind OcuLenz — to discuss this fascinating breakthrough and how glasses like OcuLenz can change the lives of people who use them.

OcuLenz began as a piece of software, but the company had to make its own hardware to fit its vision (pun fully intended).

Freeman tells me that Ocutrx "started out to be just a software company, and we were going to use somebody else's hardware." Other vision-correction VR solutions like Neurolens use headsets like the Pico Neo 3 with custom software written for a specific purpose.

But Freeman says they couldn't find a headset that would do what they needed. "In dealing with macular degeneration, people have lost up to 20% of their central vision. If you've got [a headset with] 40 degrees field of view, that's not enough to do anything with. So we had to create our own."

Headsets like OcuLenz help people with vision issues see more normally again.

But let's go back to the basics first. AMD is a vision problem that causes people to slowly develop blind spots, normally in the center of their vision. It's the leading cause of vision loss and blindness for Americans age 65 and older, and it makes it incredibly difficult to read as you get older.

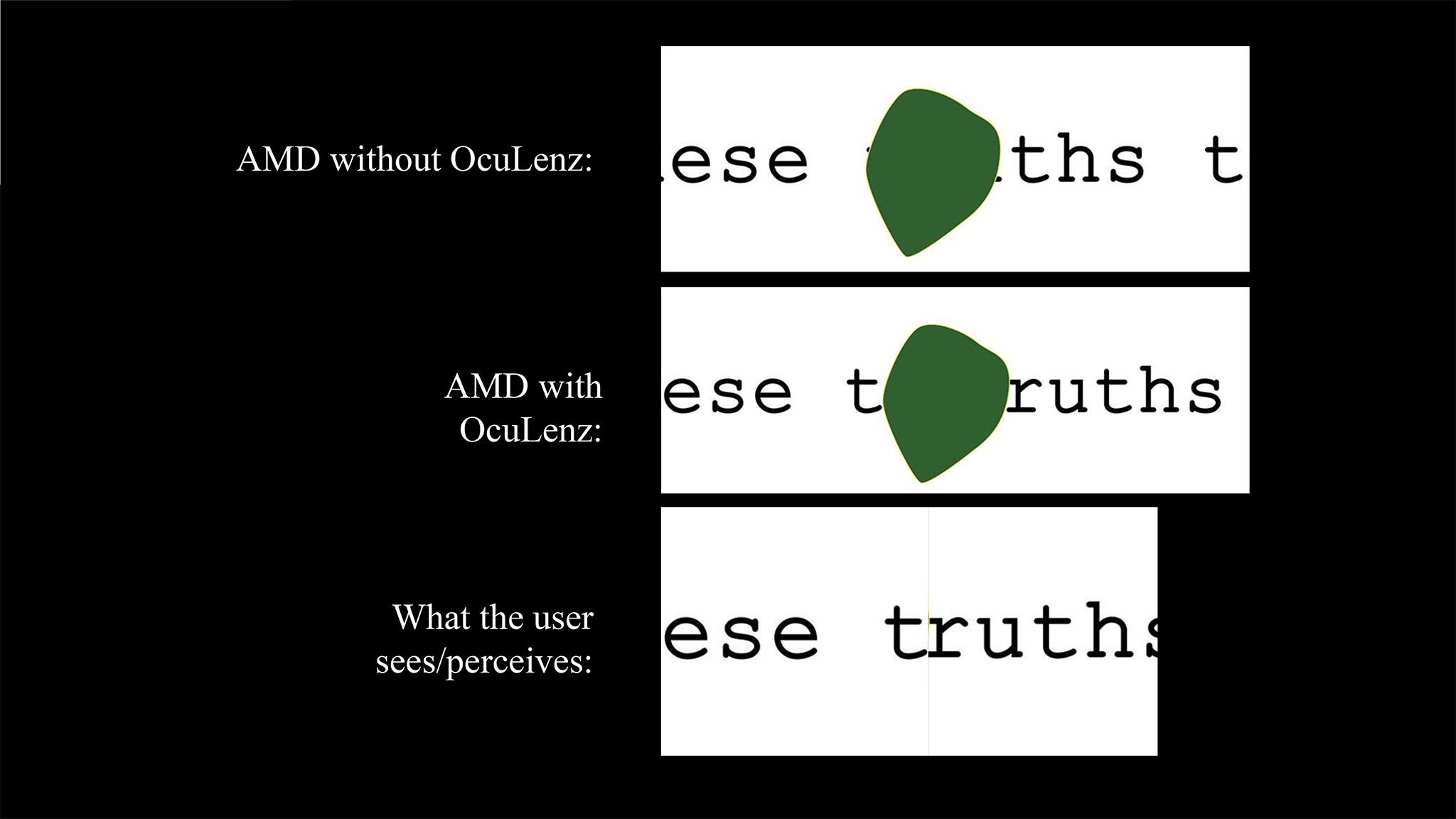

AMD begins with a blurry spot, which later evolves into a black spot that fully obstructs a portion of the person's vision. This spot doesn't move as it's relative to the part of your retina that's being affected by the disease. Because of that, the OcuLenz headset can correct for this visual anomaly and provide users with a view that looks more like they remember before AMD kicked in.

But it's particularly interesting how OcuLenz achieves this outcome. Freeman tells me that the human brain actually "shuts off" a portion of the vision once AMD gets really bad. I recreated an example Freeman showed me of this effect in the GIF below.

Imagine the piece of paper as your field of vision. With full-on AMD, your brain effectively cuts out the middle portion to remove the blindspot AMD created. What you're left with is the left and right portions of your eyesight, with the middle completely missing, but your brain doesn't "know" this. You just end up seeing differently.

OcuLenz uses an ingenious combination of cameras and optics to help recreate the entire image your brain used to see, effectively giving you back your full range of sight all over again.

The green blob in the example below — provided by Ocutrx — represents a patient's AMD blindspot and how it affects reading. The cameras on the OcuLenz see the words and intelligently move them around the blindspot at 60 frames per second (FPS), projecting them onto the lenses in front of your face.

The headset is able to do this because it's powered by the same Qualcomm Snapdragon XR2 Gen 2 found in the Meta Quest 3. The cameras up front sample the world at 60 FPS each, and the CPU is able to process the images and overlay the composite images onto the headset's lenses with only a 13-millisecond delay.

Plus, OcuLenz's field of view is substantially wider than headsets like the HoloLens 2 and Magic Leap 2 provide — 60 degrees horizontally, 40 degrees vertically, and 72 degrees diagonally — giving users a much wider augmented view that feels more natural. It also doesn't use Waveguide optics like the HoloLens, so the feed you see through the lenses is substantially clearer.

Finally, a pair of smart glasses with a display that has an actual IPD adjustment mechanism!

On top of having the widest FoV of any product like it, OcuLenz is the only pair of smart glasses with an actual IPD adjustment mechanism. "Everyone else just has a one-size-fits-all solution. OcuLenz can be up to 12 degrees offset" to help people with narrow or wider eye spacing.

That's a huge deal for someone like me who has yet to find a pair of smart glasses with a display that doesn't give them headaches after a few minutes.

Practical smart glasses

OcuLenz is being tested with plans to fully launch in the first half of 2024. Hot on its heels is a new design featuring a new optical engine that's half the size. Ocutrx says this one is still considered "a headset" rather than a pair of smart glasses.

And here's where my surprise came in. "Real" smart glasses have been delayed for years, so imagine my surprise when Freeman told me the company is already working on a proprietary optical engine that reduces the size by another 50%, giving Ocutrx the ability to deliver a Gen 3 OcuLenz that's more like a pair of smart glasses.

To fit in nicely with that, Meta and MediaTek recently announced a partnership that'll deliver the first silicon able to make consumer smart glasses a reality. OcuLenz is beyond impressive, and its future products are going to work wonders for folks with vision issues, but it feels like the Meta and MediaTek partnership is what we've been longing for in a pair of consumer smart glasses.

After the MediaTek Summit, Anshel Sag, Principal Analyst at Moor Insights & Strategy, told me, "While details are still murky, we know that Meta is specifically partnering on AR devices, which leads me to believe that we could see the next generation of Meta Ray Ban glasses powered by MediaTek rather than Qualcomm."

That's an incredibly exciting look at the rapid evolution of smart glasses and what we can expect from products like Meta Ray-Ban Smart Glasses in the future.

The first two generations of these glasses haven't featured a screen of any kind, but Mark Zuckerberg's recent demonstration of multi-model AI running on the 2nd generation glasses shows how we could still go for another few years without needing a display baked into the lenses.

AI assistants and vision-repairing glasses have me excited about the future of smart glasses as a viable product category.

It's demonstrations like this — and OcuLenz — that have me excited for the future of smart glasses in a way that I wasn't before. Up until now, the concept felt more like a hokey sci-fi idea than anything realistic or intriguing.

Now that I can see the details coming together into cohesive products that make a difference in the lives of users, I feel like I can get behind the idea of wearing a pair all day long. I surmise that most of the world will, too.