Google hid the future of AR in plain sight at I/O 2024

Holograms, teachable AI, and the melding of digital and physical worlds in a pair of glasses.

As I approached the demo booths for Project Starline at Google I/O 2024, I saw the poetic words written on each door. “Enter a portal into another world,” “Feel like you’re there even when you’re not.”—these words are often associated with VR experiences as they all have that magical “presence” factor that tricks your brain into thinking you’re somewhere you’re not, but Project Starline isn’t VR at all.

Instead, Starline is a sort of conferencing table with a magic portal that leads to another Starline booth somewhere in the world. You sit at the table as you might any other desk or conference room table and talk to the person (or people) on the other end, but the trick is that Starline isn’t just a flat screen. It’s a Lightwave projection that displays a 3D projection of the person on the other end, a realism I simply wasn’t prepared for.

HP recently recognized Starline’s impressive nature and possibilities in this world of remote work and cross-continental business opportunities, but Starline represents more than just the future of connecting offices for better remote meetings. It goes hand-in-hand with other projects Google showed off at Google I/O 2024, like Project Astra, a new AI routine built on Gemini that can see the real world and talk to you about it as if it were another person looking at the same things.

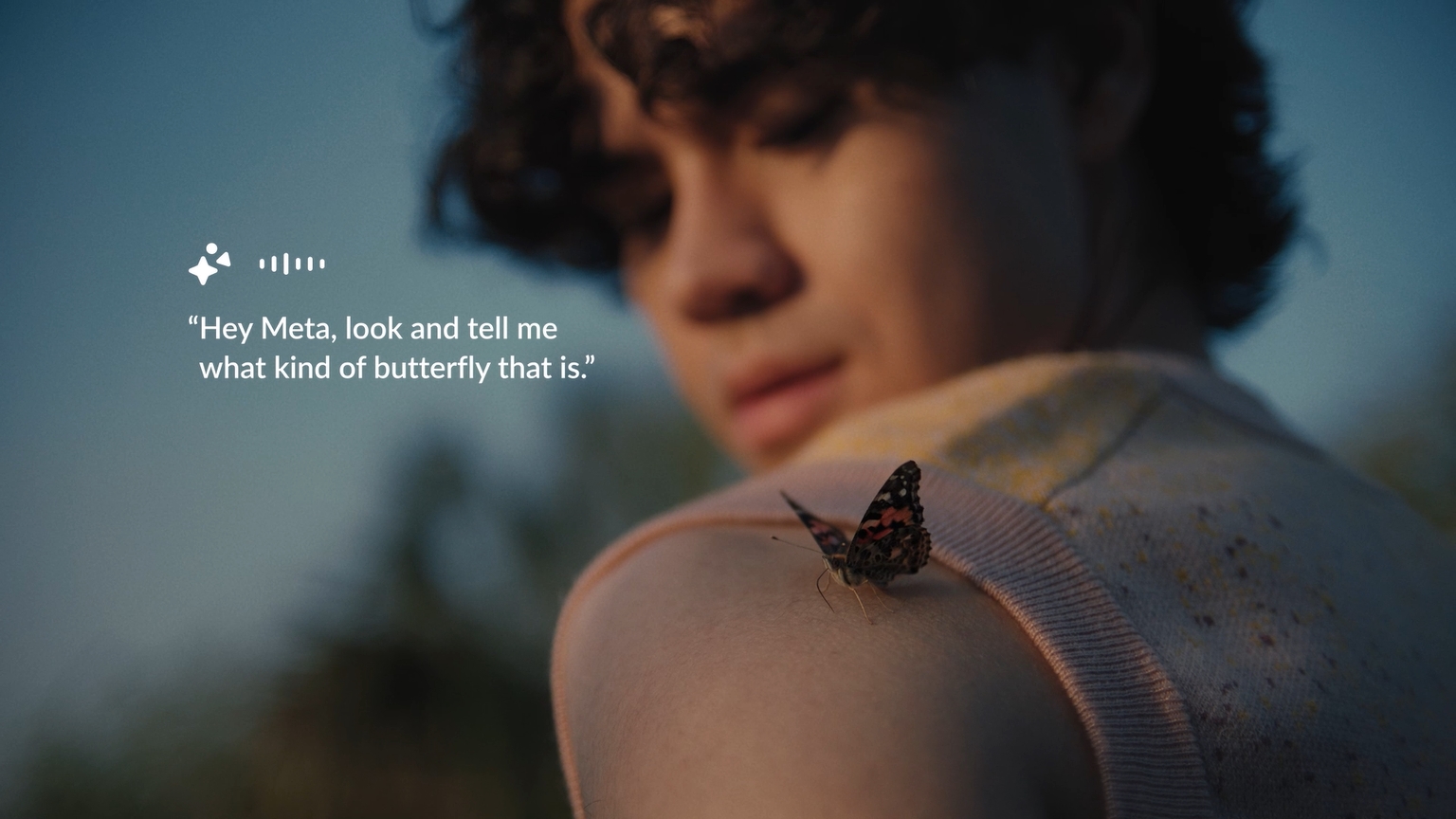

We’ve seen Meta launch this kind of technology already on its excellent Ray-Ban Meta smart glasses, but Google’s tech takes this a step further by not only making things even more conversational but also by adding memory to the experience. No, I don’t mean RAM or storage. I mean, the AI can remember what it has seen and recall facts and details as if it were a living being.

The intersection of AI and XR

To say Google is working on a lot of different projects right now is an understatement. The company talked about what felt like an endless supply of Gemini-related AI projects during its Google I/O 2024 keynote, but one project was purposefully glossed over to make sure we were paying attention.

We’re still not sure if this is the pair of Google AR glasses that were previously thought to be canceled, but Google certainly demoed a pair of AR glasses with multimodal Astra AI built in.

While I didn’t get to demo the glasses myself, I did get to check out Project Astra in person using a single camera and a large touchscreen TV.

Be an expert in 5 minutes

Get the latest news from Android Central, your trusted companion in the world of Android

The demo consisted of four different sections: storytelling, alliteration, Pictionary, and free form. During the storytelling portion, we were able to place stuffed animals within the line of sight of the camera, and the AI would then come up with a story about each animal on the fly. The stories were surprisingly convincing and, if nothing, would make a fantastic children’s book.

Alliteration builds upon the storytelling by confining the AI to only use the same letter or sound at the beginning of adjacent or closely connected words for its stories. In Pictionary, you use the touch screen to draw something, and the AI has to guess what it is. This was another particularly impressive one, as there seemed to be no real limitation between what you could draw and what the AI might guess.

Project Astra is not only able to identify what it sees on a camera but can also remember them for an indefinite period of time.

While all three of these modes were impressive and interesting in and of themselves, the free-form mode allows you to do anything with the AI, including recreating the other three modes by giving it a simple voice command. Free form mode is what particularly interested me because of its ability to not only correctly identify what Astra sees through the camera but also the AI’s ability to remember what it saw and recall the information quickly in a later conversation.

During the keynote, the most impressive example of Astra’s use was asking where the narrator’s glasses were in the room. Astra remembered the previous location of objects, even when those objects weren’t interacted with. In the case of the video, the person demoing the tech never interacted with the glasses until she asked Astra where they were last seen.

It’s this kind of technology that I can see making a real and meaningful difference in the lives of people who not only need vision assistance - Google’s Guided Frame on Pixel phones and Meta’s multimodal AI on Ray-Ban Meta glasses already do this to some extent - but it will create very real quality of life improvements in the lives of its wearers in subtle ways.

Spatial memory is something once reserved for living things and it creates an entirely new paradigm in AI learning.

Being able to find your glasses or car keys by just asking an AI built into a pair of smart glasses is game-changing, and it will create an almost symbiotic relationship between the person, the AI, and the AI itself.

Aside from battery life limitations, though, there are storage limitations. Remembering where objects are, and all the spatial data associated with that virtual memory takes up a lot of drive space. Google reps told me there’s theoretically no limitation on how long Astra can remember spaces and things, but the company will scale memory limitations depending on the product using them.

The demo I used had a memory of only one minute, so by the time I moved on to the next scenario, it had already forgotten what we had previously taught it.

The future of communication

I recently interviewed Meta’s head of AR development, Caitlin Kalinowski, to discuss Meta’s upcoming AR glasses and how they’ll improve the experience versus current AR products. While she didn’t directly say that the glasses were using light-field displays, the description of how Meta's AR glasses overlay virtual objects on the real world sounded at least somewhat similar to what I experienced from Project Starline at Google I/O 2024.

Starline delivers an incredibly impressive, lifelike 3D projection of the person on the other end that moves and looks like that person is sitting across the table from you. I gave my presenter a high-five "through" the display during the demo, and my hand tingled in a way that said my brain was expecting a physical hand to hit mine. It was convincing from every angle, something that's possible thanks to Starline's use of six high-resolution cameras placed at various angles around the display's frame.

If Meta or Google can compress this experience into a pair of glasses, we'll have something truly reality-altering in a way we haven't seen before from technology.

I gave my presenter a high-five "through" the display during the demo and my hand tingled in a way that said my brain was expecting a physical hand to hit mine.

As with anything AR or VR-related, it's impossible to capture imagery of what Project Starline felt like using traditional cameras and displays. You can see a picture — or even a moving image like you see above — of someone sitting at a Starline booth and recognize that they're talking to another person, but you don't truly understand what it feels like until you experience it in person with your own eyes.

If you've ever used a Nintendo 3DS, you'll have at least a passing idea of what this feels like. The display is wholly convincing and, unlike the 3DS, is high resolution enough so that you're not counting pixels or even worried about the tech behind the experience at all.

Google representatives told me that while the technology behind Starline is still secretive, more will be revealed soon. For now, I was only told that Starline's light-field technology was "a new way to compute" that didn't involve traditional GPUs, rasterizers, or other similar methods of virtually rendering a scene.

Rather, the light-field tech you see in front of you is a clever amalgam of footage from the six cameras onboard, all stitched together in real-time and moving according to your personal perspective in front of it. Again, this is a bit like the revision of the 3DS with the front-facing camera that allowed you to shift your perspective a bit, just all grown up and running on supercharged hardware.

Maybe more impressively is the technology's ability to create a 3D avatar complete with real-time backgrounds. Forget those terrible cutouts you see on a Zoom call; these were moving, authentic-looking backgrounds that seamlessly blended the person into them, even creating real-time shadows and other effects to make it even more convincing.

Imagine being able to see and share experiences with a volumetric display, letting you actually feel like something is right in front of you even if it's thousands of miles away (or not even real at all).

For me, this kind of thing is a dream come true for the work-from-home lifestyle. If this technology were to be implemented on a pair of AR glasses like the ones Google or Meta are building, it would be easy to work from anywhere without losing that "professionalism" that some people feel only comes from working in an office. It would also make sharing things with distant relatives and friends a substantially more rewarding and memorable experience for everyone.

This, paired with a remember-all AI like Astra, would certainly help meld the physical and digital worlds like never before. While companies like Meta have often waxed poetic about ideas like the Metaverse in the past, I saw the genuine article — the future of augmented reality — coming to life at Google I/O this year.

It's amazing to dream up science fiction ideas that seem at least remotely possible, but it's another to see them come to life before your eyes. And that, for me, was what made Google I/O 2024 a truly memorable year.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.