GDC 2022 provided a glimpse into the future of PS VR2 games

Eyes are the window to the soul, and VR devs are going to peek through yours to make their PS5 VR games more responsive

Sony may have been an official no-show at GDC 2022, but we know its developers were there behind the scenes in San Francisco, allowing game devs to try out the PS VR2. Anacrusis developer Chet Faliszek tweeted that "the world just feels different" after trying the headset, courtesy of former president Shuhei Yoshida and head of Playstation Creators Greg Rice.

Had one of those VR moments today playing in the new PSVR2 hmd… You know where the world just feels different when you return? Sooooo good… thanks @yosp and @GregRicey for the demo and chat.March 24, 2022

While the media obviously wasn't privy to these backroom demos, I did get to attend an intriguing GDC panel: 'Building Next-Gen Games for PlayStation VR2 with Unity.' Headed by Unity developers who ostensibly have PS VR2 dev kits of their own, the panel ran through the differences coding VR games for PS5 VR compared to current headsets like the Quest 2.

Sony already announced the PS VR2 would use eye tracking to support foveated rendering, consolidating the highest graphics in your line of sight. But Unity provided the first hard statistics of how much this improves performance: GPU frame time improvements are up to 2.5x faster with foveated rendering and up to 3.6X faster with both rendering and eye tracking.

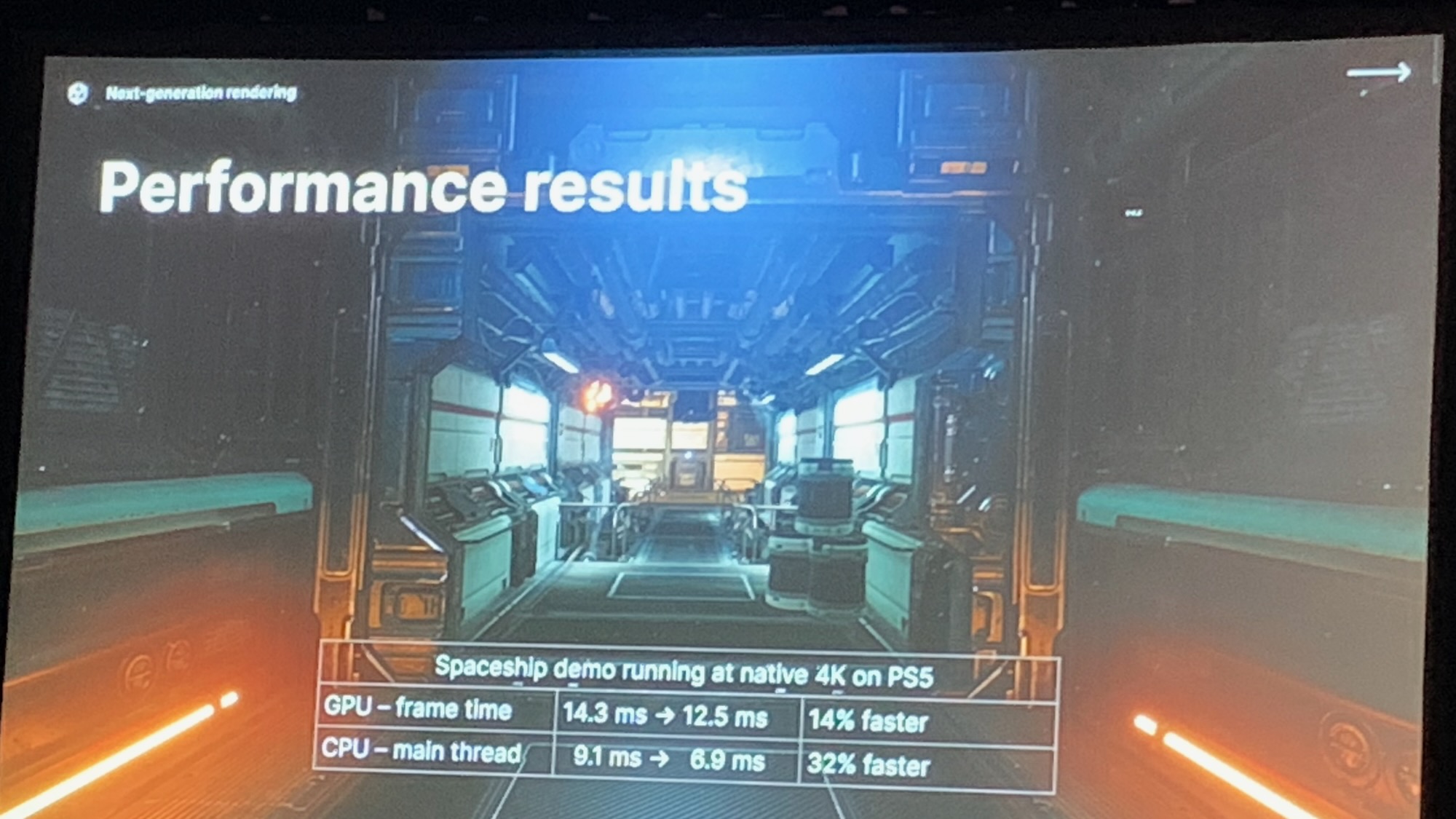

Running the popular VR Alchemy Lab demo with demanding graphics like dynamic lighting and shadows, rendering and tracking dropped frame time from 33.2ms to 14.3ms, a 2.3X improvement. And running a 4K spaceship demo, CPU thread performance and GPU frame time were 32% and 14% faster, respectively.

In practical terms, developers will be able to optimize their games to make the most out of the PS5's raw power, and you'll never notice the dips on the periphery.

According to the Unity devs, eye tracking can also provide UI benefits beyond graphical enhancements. You can magnify whatever a player is looking at, both visually and auditorily. Or players can look at a specific object and squeeze the trigger to interact with it, ensuring players don't grab the wrong thing and get frustrated.

The PS VR2 tracks "gaze position and rotation, pupil diameter, and blink states." That means that a PS VR2 could know if you wink or stare at an NPC, triggering some kind of personalized reaction if the devs program it. Or eye tracking can offer actual aim assist, so a poorly aimed throw course corrects closer to where you were looking.

Be an expert in 5 minutes

Get the latest news from Android Central, your trusted companion in the world of Android

And, of course, eye tracking will allow more realistic avatars for social VR games.

Unity (and other game engines, most likely) will also use PS VR2 eye tracking to create in-game heat maps that tell them exactly where players look in a given scene. Devs can use this during playtesting or public betas to see why players are struggling with a puzzle or what interests them the most in the environment.

It's fair to hope eye tracking will make games better while also feeling a bit weirded out by the amount of physical data the headset can collect from you. I'm curious if games will let players know their eye tracking data will transmit to the developer, either in the ToS or more openly with an opt-in request before playing.

The Unity developers also made an interesting point that feels obvious in hindsight: PS VR2 games can support asymmetric, couch-co-op multiplayer with non-VR gamers in the room. You can cast the Quest 2 or other headsets to your TV, but the PS VR2 will constantly connect to a console that's always hooked up, making multiplayer a more natural fit.

Developers can create multiple in-game cameras and point one feed to the headset and another to the TV for other players, ensuring they're experiencing the same game with different perspectives. It'll make VR much more social and less isolating from the other people in the room — while also preventing the VR or non-VR players from having an intrinsic (dis)advantage.

It'll be exciting to see which of the best PS5 games can support this kind of hybrid VR/2D play.

Lastly, the PS VR2 controllers' haptic feedback, finger tracking, and trigger resistance will give devs more realistic, differentiated reactions based on whatever the player is doing. They gave an example of spatial haptics, where an explosion on the player's right could provide the most feedback in the right controller and scale down the intensity on the headset and left controller.

The Unity devs warned that "heavy trigger resistance should be used mindfully" to avoid fatiguing users. If players face resistance every time they draw a bowstring in Horizon Call of the Mountain, immersion can turn to frustration depending on the physical capabilities of the gamer.

While I couldn't sneak into a developer session and try the PS VR2 for myself, I felt like this panel gave me an idealized sneak peek at how the headset could stand apart from other headsets, assuming devs take advantage of these tools.

Both the PS VR2 and the Oculus Quest Pro (which will also support eye tracking) have the potential to make VR much more immersive and reactive to players than ever before. And PS5 VR, in particular, will invite families and loved ones to join in on the excitement without needing to have their own headsets.

Michael is Android Central's resident expert on wearables and fitness. Before joining Android Central, he freelanced for years at Techradar, Wareable, Windows Central, and Digital Trends. Channeling his love of running, he established himself as an expert on fitness watches, testing and reviewing models from Garmin, Fitbit, Samsung, Apple, COROS, Polar, Amazfit, Suunto, and more.