Android XR headsets will take a vital camera option from Android phones

You'll be able to grant developers permission to access your Android XR headset or glasses cameras for more dynamic mixed-reality apps.

What you need to know

- Google confirmed that Android XR will have camera permissions near-identical to Android phones.

- Devs can request access to the "rear camera" to contextualize your surroundings and place mixed-reality objects accordingly.

- They can also see a real-time "avatar" of your "selfie-cam" feed for eye and face tracking.

- Meta Quest headsets currently don't allow this, though devs will get optional access sometime in 2025.

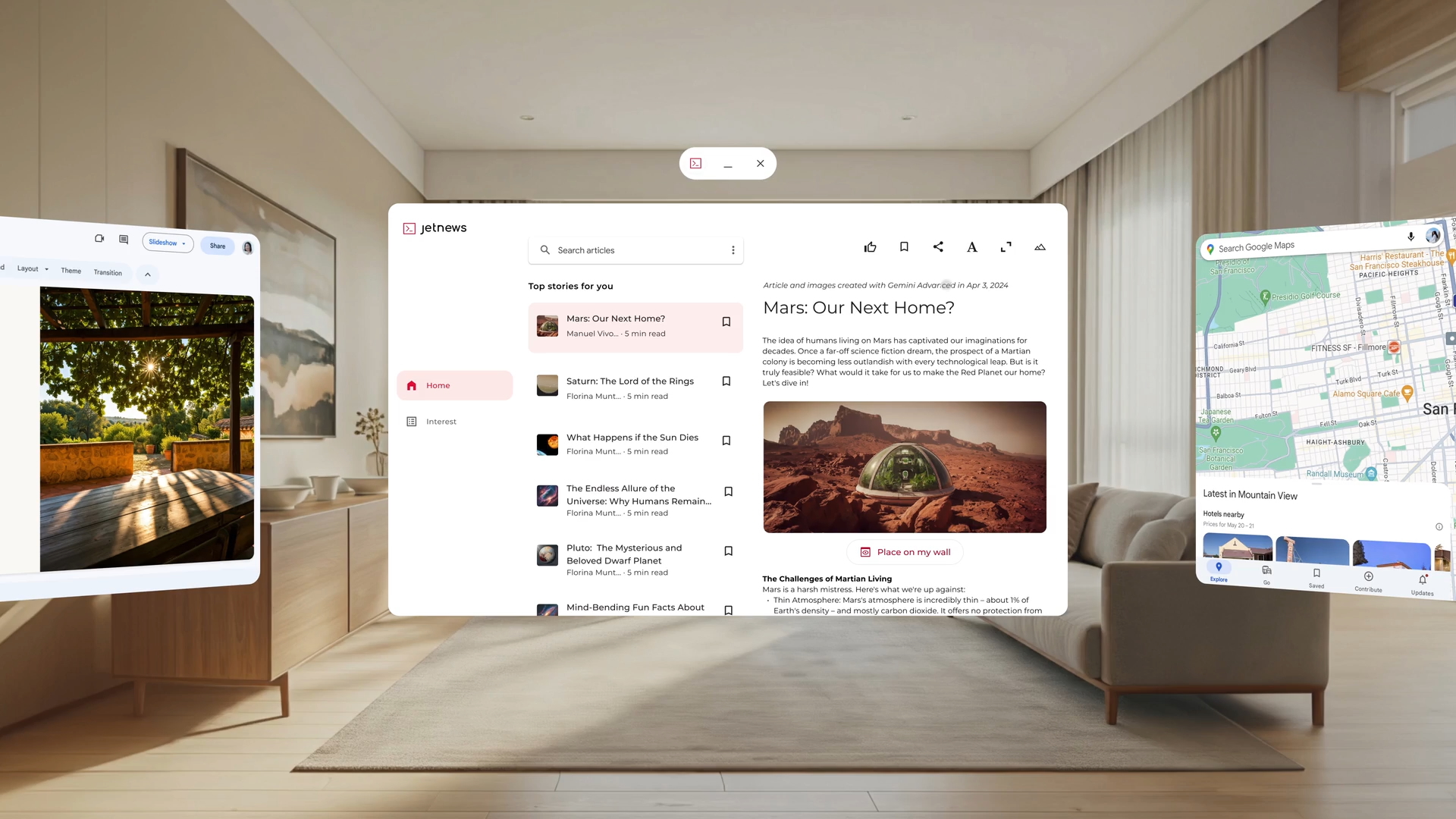

Android apps must seek permission before using your camera. Now Android XR will preserve your privacy — or give devs access to your living room feed — based on your preferences. Headsets like Samsung Project Moohan will analyze your living room surroundings and adjust mixed-reality games and apps using that context.

Developer Skarred Ghost (via UploadVR) asked Google whether devs would get Android XR camera access, given that Quest 3 headsets still don't allow access for privacy reasons — at least until later in 2025.

A Google representative responded that "similar to any Android app, a developer can use existing camera frames with user permission for XR."

Specifically, the application can seek access for the "main world-facing camera stream" and the "main selfie-camera stream" that points at your face; Google likened this to accessing the "rear" and "front cameras" on your Android phone.

On the Android Developers page, we can see the specifics: Developers can request access for "Scene Understanding," aka use cases like "Light estimation; projecting passthrough onto mesh surfaces; performing raycasts against trackables in the environment; plane tracking; object tracking; working with depth for occlusion and hit testing; persistent anchors."

While Android XR will enable "basic" hand tracking by default like "pinching, poking, aiming, and gripping," devs can request advanced tracking through the rear camera for "hand joint poses and angular and linear velocities," creating a "mesh representation of the user's hands" that sounds like it'll boost immersion and make hand-tracked VR games more enjoyable.

The Android XR developer page also explained that games will need selfie camera permissions to access your "eye pose and orientation" for avatars, "eye gaze input and interactions," and for "tracking and rendering facial expressions."

Be an expert in 5 minutes

Get the latest news from Android Central, your trusted companion in the world of Android

Thankfully, granting selfie-cam permissions won't actually give devs a real-time close-up of your goofy expressions and pores. Android XR will only send an "avatar video stream" generated on-device to developers.

As with any Android app, Android XR will require a certain level of trust between user and developer, that your living room data won't be used for nefarious purposes. We assume that Android XR will let you review app permissions and check the Privacy Dashboard to confirm apps aren't using camera data when they shouldn't.

Why this is so promising for Android XR

Currently, mixed-reality games on Quest rely on whatever boundaries you draw using system software; it can ask you to draw a tabletop space for building XR LEGO sets, but it can't see what you're looking at and interpret that data in creative ways.

Meta Quest headsets will enable a Passthrough API in early 2025 to open camera data up to devs, and it's promising to see that Android XR will enable this tool from the start.

Developer Skarred Ghost described how he used a "hacky camera access trick on Quest" to "prototype an AI+MR application that can help people do some interior design of their houses," something that "wouldn’t have been possible" without camera permissions.

With Android XR's camera permissions, you could transform your house with holographic designs to make it look cooler, or scan something in real life and then put that object into your living room.

Mixed-reality games like Laser Dance use general headset boundary data and object detection to create XR obstacles, but more exact living room data would allow for more creative challenges (like recognizing a table as something you could crawl under, instead of just a massive block taking up space).

Project Moohan and other XR headsets will certainly benefit, but it's AR glasses that'll really need this kind of camera access for apps, simply because you'll wear them all day in unfamiliar environments, where analyzing your surroundings is the whole point! Apps like Google Maps and Translate will need camera access, but Android XR will let third-party apps piggyback on your feed, too.

Beyond all of the possible applications of camera access, it's reassuring to know that Android's privacy focus will carry over to Android XR, unless you're certain you trust a developer enough to peek into your living space.

Michael is Android Central's resident expert on wearables and fitness. Before joining Android Central, he freelanced for years at Techradar, Wareable, Windows Central, and Digital Trends. Channeling his love of running, he established himself as an expert on fitness watches, testing and reviewing models from Garmin, Fitbit, Samsung, Apple, COROS, Polar, Amazfit, Suunto, and more.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.