AAA gaming has a 3 Body Problem that only No Man's Sky can save

Generative AI is the key to creating truly open worlds for everyone.

Massive virtual science fiction worlds all tend to revolve around a similar core concept. If they weren't made by aliens, then they must have been made by AI. Movies like The Matrix or shows and books like "3 Body Problem" all use this crux as a way to explain how a human with finite resources could somehow make something that feels so infinite.

Look at any of the biggest games, and you'll quickly understand what I'm talking about. GTA V, The Legend of Zelda: Tears of the Kingdom, and Starfield all feature massive virtual worlds that eventually end and largely have some sort of odd restrictions within them. It all comes down to a real-life three-body problem: human time, skill, and money.

In his weekly column, Android Central Senior Content Producer Nick Sutrich delves into all things VR, from new hardware to new games, upcoming technologies, and so much more.

But something like No Man's Sky, which is procedurally generated and therefore quite literally infinite, gives us a look at what a fully generative AI-based game could look like and how this tool could solve the problem in one fell swoop. The latest update is a masterclass in development, showing how you can add new systems and items to never-ending worlds to make them deeper and even more complex. No Man's Sky doesn't use generative AI like Meta or Google do, but it uses an algorithm that can feel similar to building the worlds you explore in the game.

And it doesn't stop with open-world titles. Generative AI plays a huge part in making metaverse-style games like VR Chat and Rec Room accessible to more people. It makes it far easier to build your own worlds and places, removing the cost and tools that professional developers use to craft exquisite games. A few games are giving us a glimpse of this future, and as we've seen plenty of times before, science fiction is driving our vision of what future VR games will look like.

The 3 Body Problem

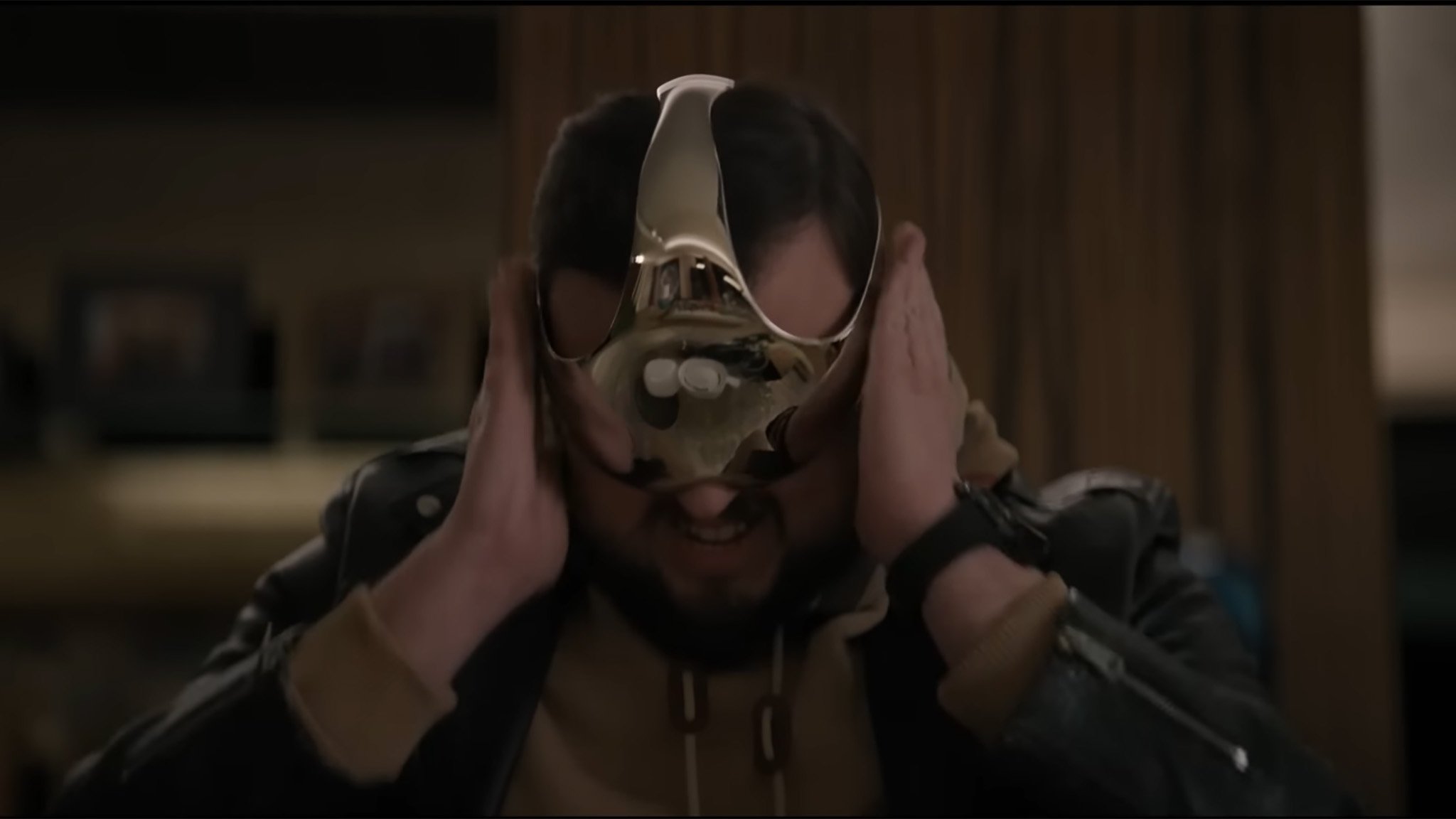

While sites like The Verge have been focused on the VR headset in the new Netflix show "3 Body Problem," I'm far more interested in the virtual worlds experienced by the show's characters. These worlds are realistic and convincing. They feature dynamic content unlike anything we've seen in modern games, and they represent a leap I think can only occur once we fully embrace generative AI in gaming.

Many gamers love their AAA games — titles like Cyberpunk 2077, Spider-Man 2, Call of Duty: Modern Warfare 2, and The Last of Us: Part II — but they all have a huge problem in common. They require hundreds of millions of dollars, teams of hundreds of people, years of development, and bookoo overtime hours. None of this is sustainable, and it's absolutely out of reach for the vast majority of development studios.

The problem compounds itself when you think about a game like Rec Room, VR Chat, or Roblox, titles that rely almost entirely on user-created content to keep players interested and coming back regularly. While all three of those examples feature tools that help players and would-be creators make their own worlds, these tools may require more time and effort than you have as an individual. That's where generative AI comes in.

Be an expert in 5 minutes

Get the latest news from Android Central, your trusted companion in the world of Android

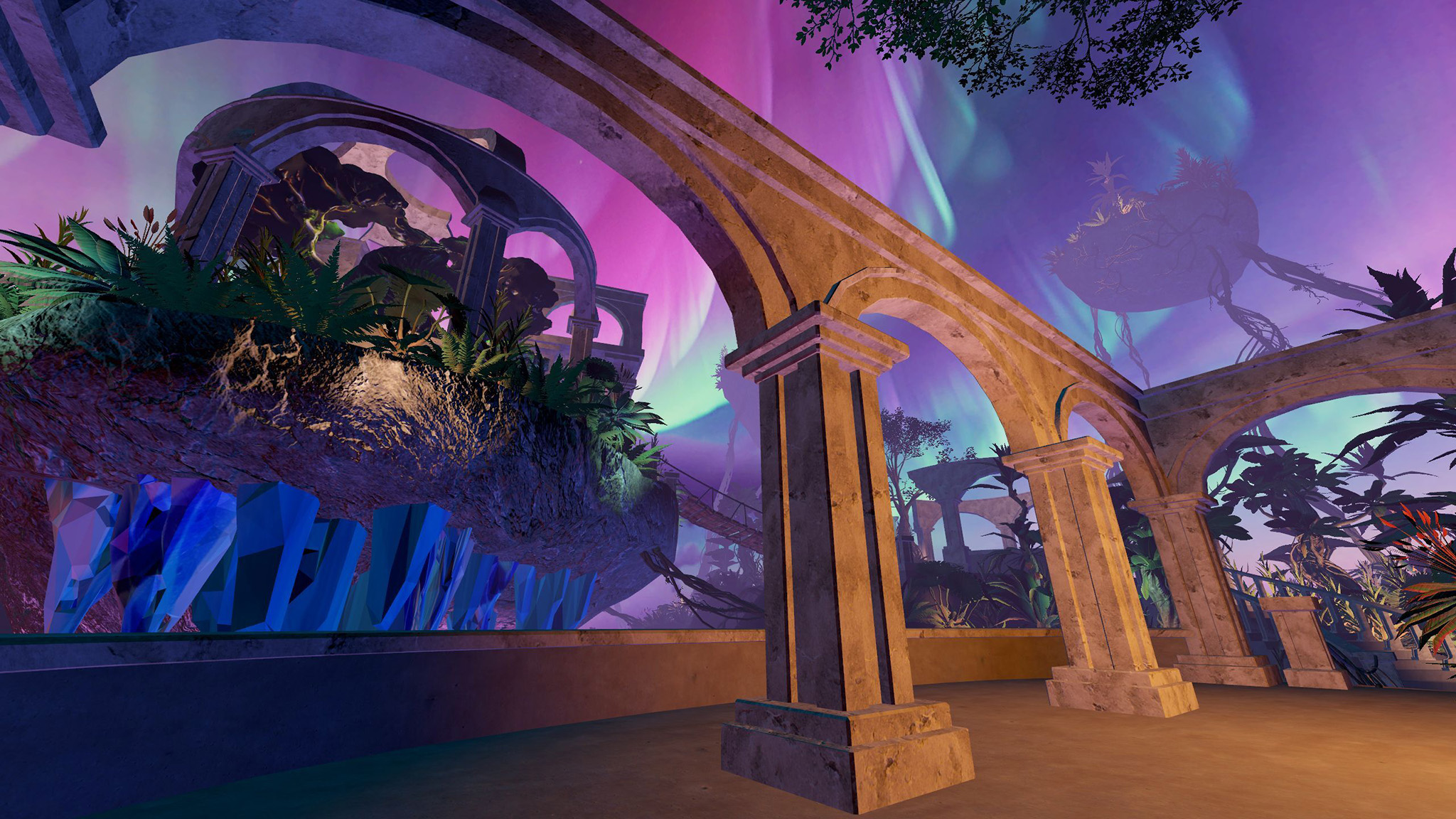

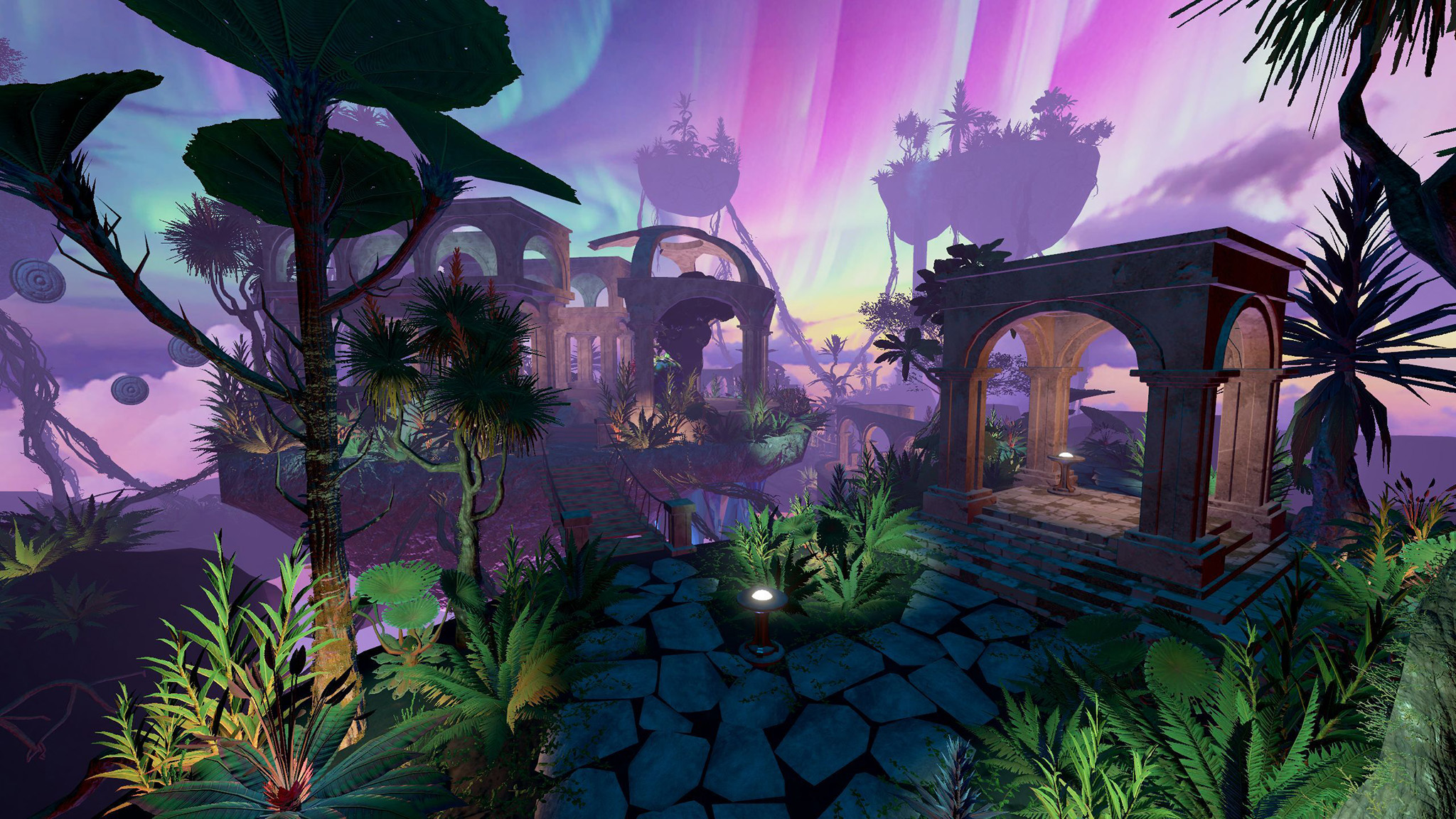

Late in 2023, the popular social game Rec Room posted about how its new generative AI tools could help creators make even better worlds. Most player-made Rec Room worlds are visually simple and typically rely on placing pre-made assets in different ways to mimic the look of more complex objects, but Fractura is different.

The team at Rec Room used a series of generative AI tools to make a world substantially more complex than anything I've ever seen in Rec Room, and the performance of the game on my Quest 3 certainly proved that. Part of the problem is that the assets in-game aren't performance optimized, but that and many other explanations are offered when you walk around the virtual world.

Apart from some impressive visuals made by generative AI tools and placed by human hands is a fire sprite that poses questions using ChatGPT. The idea is that players would hang around the sprite and use it as a conversation starter to break the ice in what could otherwise be an awkward social situation.

I'm an introvert at heart and don't typically start up conversations with strangers, but a tool like this would certainly make that easier! Not only that, but being able to come up with a world concept and spend just a few hours making it with these new tools is a lot more attractive than needing to put hundreds (or thousands) of hours into more traditional methods. Ironically, Google is already pushing developers to do exactly this.

Because of this, player-made content is about to see a massive boost in quality and quantity, and it follows on the heels of more accessible development environments from engines like Unity and Unreal.

But what if you're not interested in making your own games? Generative AI can still play a part in enhancing your gaming experience in surprising ways. Last year, Golf Plus, one of the best Meta Quest games, teased an update that uses a ChatGPT-powered virtual caddy to create courses specifically tailored to help you conquer a problem.

🤯AI caddy is 🔥Totally convinced that this is the future of interaction in #VR!Huge shoutout to @batwood011 and @SindarinTech for getting the API ready in record time. The implications here are incredible. pic.twitter.com/EDMfyS9w8oMarch 31, 2023

Golf Plus creator Ryan Engle told me the update still "isn't ready for prime time" but that the team uses "it for inspiration and our in-game medals." Engle has been extremely positive about the concept of an AI-driven caddy to help players and has openly stated that he feels this is the future of interaction in games.

Similarly, Nvidia's virtual characters have already seen massive improvements in just a few months, graduating from what The Verge called "a fairly unconvincing canned demo" to something that "exceeds what I expect from the average denizen in 'The Elder Scrolls' or 'Assassins Creed'."

That's not to say that AI needs to entirely replace what human hands and voices have been creating for decades. I think there's still a very real place for human developers and voice actors in video games, particularly if we're talking about main characters. But, as I said before, humans have finite resources and, often, things like in-game NPCs are the first things to showcase that limitation.

That brings me back full circle to the virtual worlds in "3 Body Problem." It's clear that the aliens in the story can't stop time or alter it in any meaningful way, so that means they probably had the help of some super intelligent AI when creating the realistic world the story's scientists encounter when putting on that iconic shiny headset.

But this isn't a game full of just AI-controlled NPCs or procedurally-generated worlds. It's also filled with human players — much like No Man's Sky — who can explore and solve problems on their own without impacting the experience of others. If anything, their presence enhances the impact by creating an even more believable game to play.

We're at the very beginning of our journey with generative AI, and while it can be scary to some folks — myself included as a journalist — this technology has the potential to substantially positively impact the lives of so many people who will inevitably use it in the future.

Some game developers have worried about their own jobs when these tools evolve, but I don't think these will be tools to completely replace humans. These tools will be the building blocks of bigger and even better things, allowing us to expand the possibilities of gaming beyond the scope of human time, effort, and money. If nothing, that's how you solve a real-life three-body problem.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.