You'll soon be able to control Chromebooks with just your face

Face gesture control is coming to all Chromebooks soon.

What you need to know

- A new Chromebook accessibility feature will allow users to control the mouse cursor and virtually map keyboard keys with facial gestures.

- All Chromebooks are said to be getting the feature automatically in a future Chrome OS update.

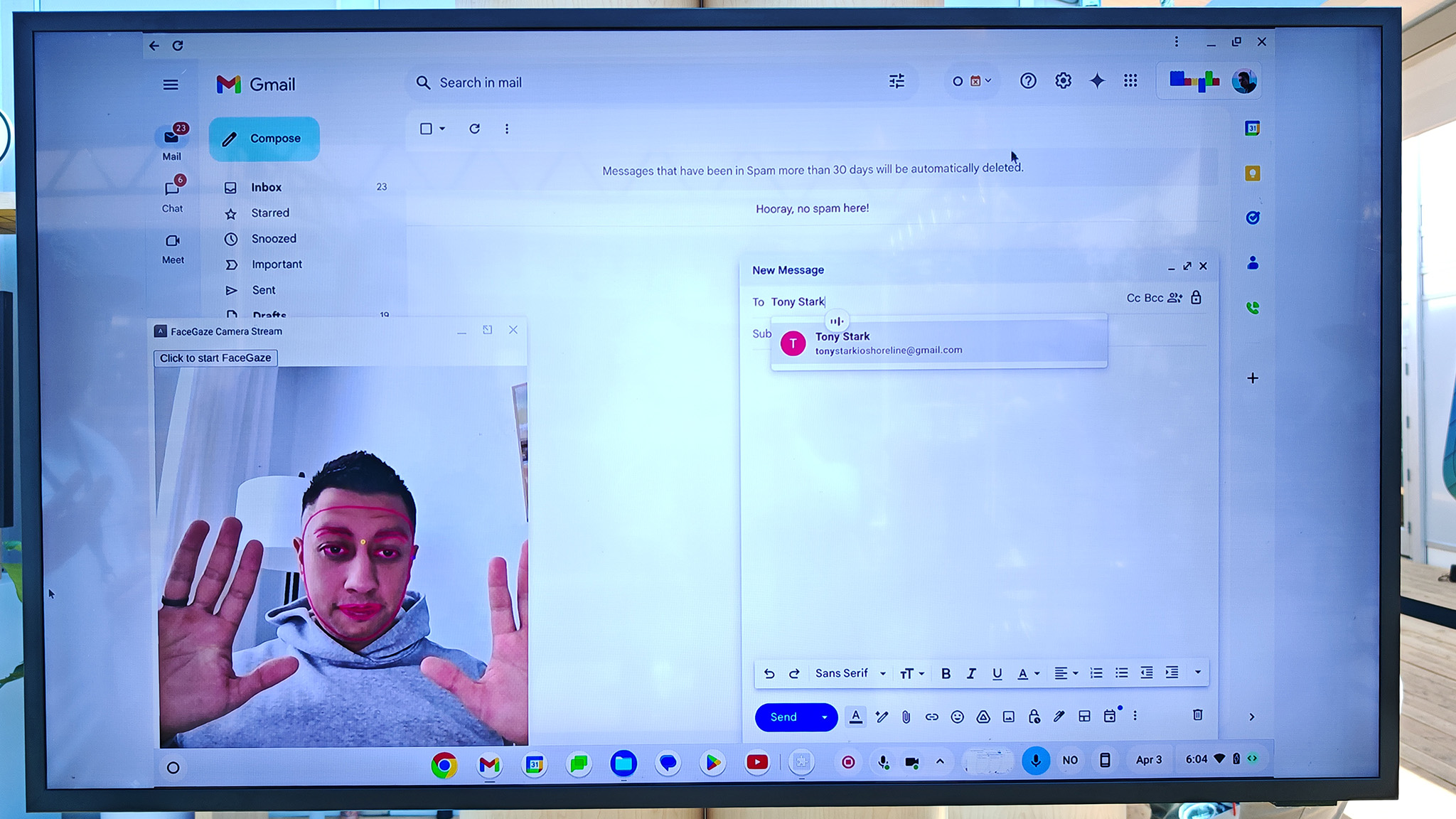

- We got to try it out in person at Google I/O 2024 on different Chromebooks, and an open-source preview is available on GitHub now.

All Chromebooks are getting a brand-new feature that lets users control their Chromebooks using just their faces. The new tool allows users to move the mouse cursor by tilting their heads, click by scrunching their lips, and even play games by smiling or shrugging their eyebrows.

Google I/O 2024 was certainly a year where AI reigned supreme, but this new AI-powered feature won't require specialized hardware or even a new Chromebook. It all works with your existing Chromebook's front-facing camera. Google calls the feature Project Gameface and it's coming sometime this year, but you can try a preview of the open-source tool at the Project Gameface Github.

The new accessibility feature is built with Google MediaPipe and was "inspired by the story of quadriplegic video game streamer Lance Carr," making it possible to control the entire operating system without the need for traditional input tools or expensive head-tracking mice.

Google often launches new accessibility features for its Chromebooks and Android products, but features like this tend to be restricted to newer hardware with dedicated AI co-processors. Instead, this new feature will run on all Chromebooks, according to a Google representative at the show. That means you don't even need one of the best Chromebooks to get it!

At Google I/O 2024, Derrek Lee (pictured above) and I got to try out the new feature running on two different brands of Chromebooks. One computer had the popular mobile game Geometry Dash running, and users could jump just by smiling at the camera.

Likewise, the other Chromebook was running the famous Chrome dinosaur game, and you would just need to shrug your eyebrows to make the dino jump.

The program supports gestures like open mouth, smile, scrunch your mouth to the side, look left, right, or up, and raise either eyebrow. These gestures can be mapped to any input action supported by the OS, like moving the mouse, clicking mouse keys or buttons, and even pressing key combinations. You can even invoke Google Assistant with any gesture to type with your voice and do anything else on the computer that Assistant can help with.

Get the latest news from Android Central, your trusted companion in the world of Android

That made it possible for us to navigate the entire operating system using head movements to move the mouse cursor around. It's a bit like the way to control an Apple Vision Pro, except that you'll tilt your head to move the cursor on a Chromebook instead of looking where you want to click as on a Vision Pro.

Face gestures were incredibly responsive and worked very accurately on the Chromebooks we used.

Performance and accuracy is likely going to depend on the Chromebook used. The team seemed confident that it would be just fine even on the cheapest Chromebooks, though. In our demo, the performance was impressive and I was surprised at how quick and accurate the input gestures were. Playing something as twitch as Geometry Dash shows just how responsive this can be.

Lots of new accessibility features have been rolling out in 2024, including live caption support on the Meta Quest 3, eye tracking for iOS 18, and Google launched an upgraded Guided Frame feature on Pixel phones so vision-impaired users can use their Pixel's camera and have the phone describe what it sees. It's good to see more features like this rolling out to more and more phones, laptops, and other devices to help users with specific accessibility needs.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.