What is Agentic AI, and why is it such a big deal on the Galaxy S25?

Analyze, think, and act.

Samsung and Google mentioned something we haven't heard yet when it comes to smartphones during the Galaxy Unpacked 2025 presentation: Agentic AI.

You might have missed it because they didn't say anything more about it except to say the name, but they talked a lot about what it should be able to do inside the new Galaxy S25 phones. It plays a big part in all the newer, smarter Galaxy AI stuff.

Agentic AI is a new technology, but it's not brand-new cutting-edge stuff. It's been around for a minute and is currently running inside factories and manufacturing plants that use a lot of automation.

What it is sounds kind of boring: probabilistic tech that's adaptable to changing environments and events. But it's not boring! This means it's a mix of LLMs, machine learning, and automation (either software or hardware) that can create semi-autonomous AI, which will analyze data, set goals on the fly, and take the appropriate action to meet the goals it set for itself.

This sounds like a fresh step towards the scary humanization of AI, but we're not quite there. Yet. But it can create an AI agent that seems more like a person when you interact with it and can better serve your needs, within boundaries, of course.

Samsung mentioned that the new AI tools and features are available because of a new specialized Snapdragon chip with an ultra-powerful NPU (Neural Processing Unit). That's a big part of it all.

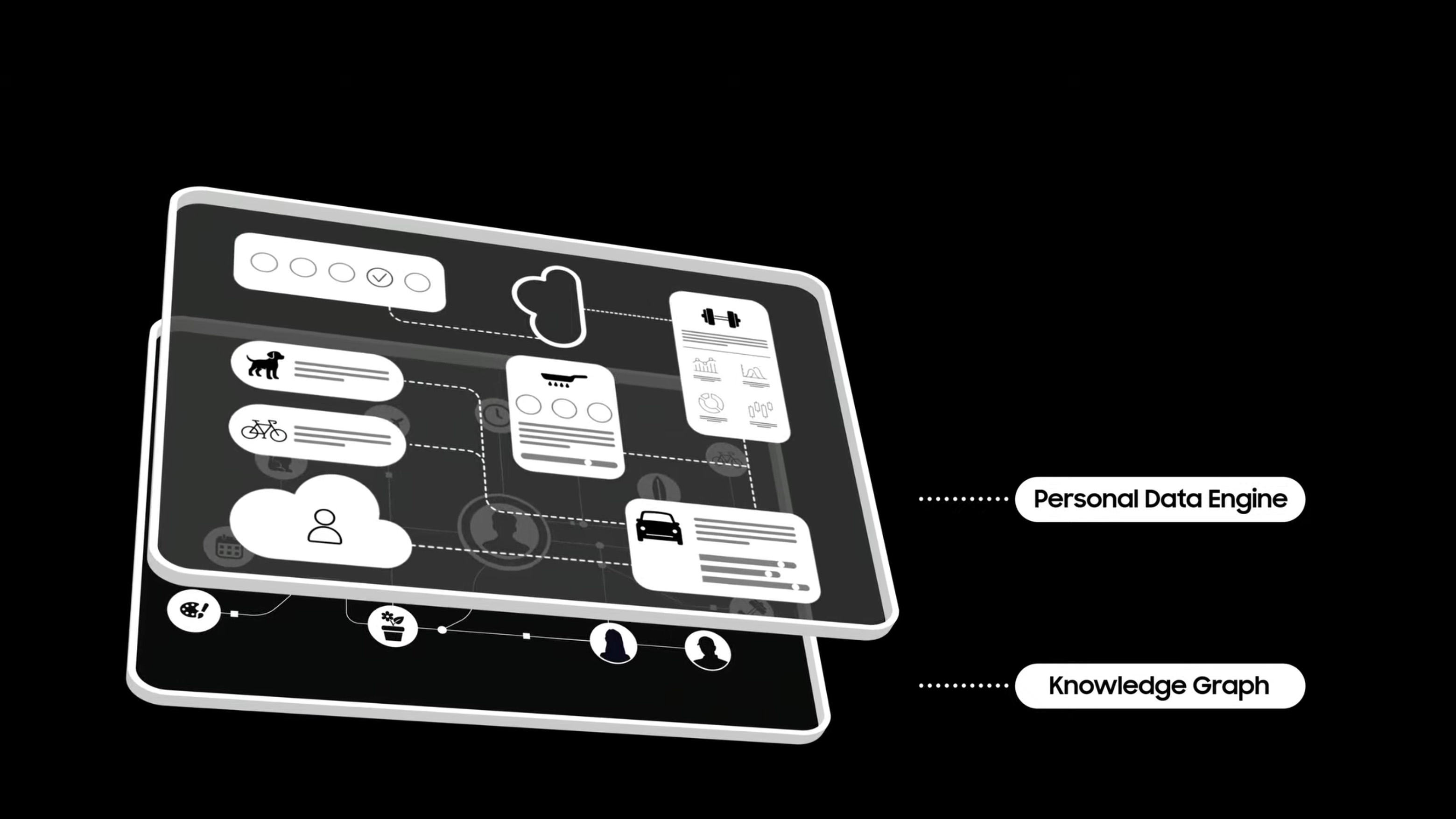

Another thing mentioned was a knowledge graph built on the things you do and where, when, and how you do them. Both are used to bring Agentic AI to life inside a tiny smartphone.

Be an expert in 5 minutes

Get the latest news from Android Central, your trusted companion in the world of Android

Consider how Agentic AI works in a factory since it's already in place there. Someone lets the software know what they need, say 20,000 widgets ready and boxed for shipping in five days.

Workers program individual automation lines that perform the various jobs needed to build a widget. This is like the data you add to your own knowledge graph on your phone — you're telling the software the recipe and the ingredients for the end result.

Agentic AI oversees the whole operation. It knows how many widgets need to be finished and when they are needed, so it adjusts individual parameters to meet the goal in the most efficient way. If one line making a part is down for maintenance, it can ramp up the capability of another line that makes the same part until the maintenance is complete, for example. If the packaging line goes down, it can stop whatever needs to stop so it doesn't overwhelm it while it's being serviced.

These are all routine tasks, but the software was programmed to watch them, analyze the data it gets, and make informed decisions based on it.

On your phone, Agentic AI has all the data from everything you do with your devices in one place. When you ask the AI for something, say a photo of a sunset at Nags Head on July 4, or how much you need to run every day to burn off a few more calories, it rifles through that data and analyzes what it finds.

It then decides what data is more useful, what data shouldn't be used at all, and which data is the most important to provide you with what you requested. If it sees 100 photos of Nags Head, 1,300 photos taken on July 4, and 71 photos of sunsets it cross-checks the data to see if any of it comes from the same photo. If it does, that's what it shows you. If it doesn't, it returns whatever it was programmed to respond with when there is no answer.

This works autonomously so you can get a notification telling you to leave for work 20 minutes early because there is an accident on your normal route and you need to go a different way. And bring an umbrella even though it looks sunny right now; it will rain later this afternoon. You can also get your coffee here because the Dunkin you normally stop at isn't on your new route.

It remains to be seen how well this new Galaxy AI will work. On the surface, it really doesn't seem so different than the things our phones can already do for us or tell us. But the technology is a big step up, and a lot more will be possible once some bright engineer dreams of doing it.

Jerry is an amateur woodworker and struggling shade tree mechanic. There's nothing he can't take apart, but many things he can't reassemble. You'll find him writing and speaking his loud opinion on Android Central and occasionally on Threads.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.