Meta AI gets a voice and will be able to 'see' your photos

At Meta Connect, the company also announced that their AI model is being used by 400 million people on a monthly basis.

What you need to know

- WhatsApp, Facebook, Messenger, and Instagram users can talk directly to Meta AI and receive responses in familiar AI celebrity voices.

- They can further share their photos and ask Meta AI to remove the background or ask for what's in the image.

- Meta AI translation tool also gains new capabilities, such as automatically translating Reels' audio.

Meta AI has announced new means of communication for its users, who can now talk to it. It now responds in familiar AI voices, including some celebrities. Additionally, it can answer questions about users' shared photos and edit them in interesting ways.

For the unaware, Meta AI is accessible through Messenger, Facebook, WhatsApp, and Instagram DM. Users can ask assistant questions through their voices and get answers or casually listen to jokes.

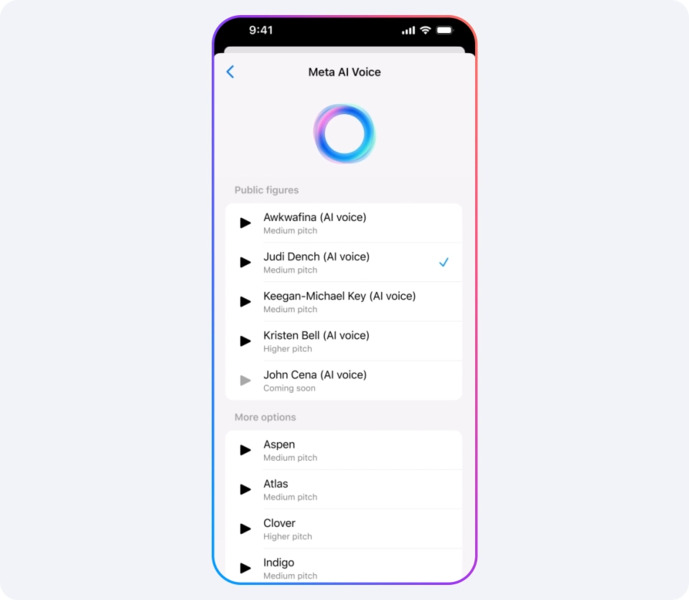

Meta notes in a shared press release that users can also choose familiar voices to hear the response, which includes the AI voices of Awkwafina, Dame Judi Dench, John Cena, Keegan Michael Key, and Kristen Bell. The rollout of this voice feature will be first seen in regions including the U.S., Canada, Australia, and New Zealand.

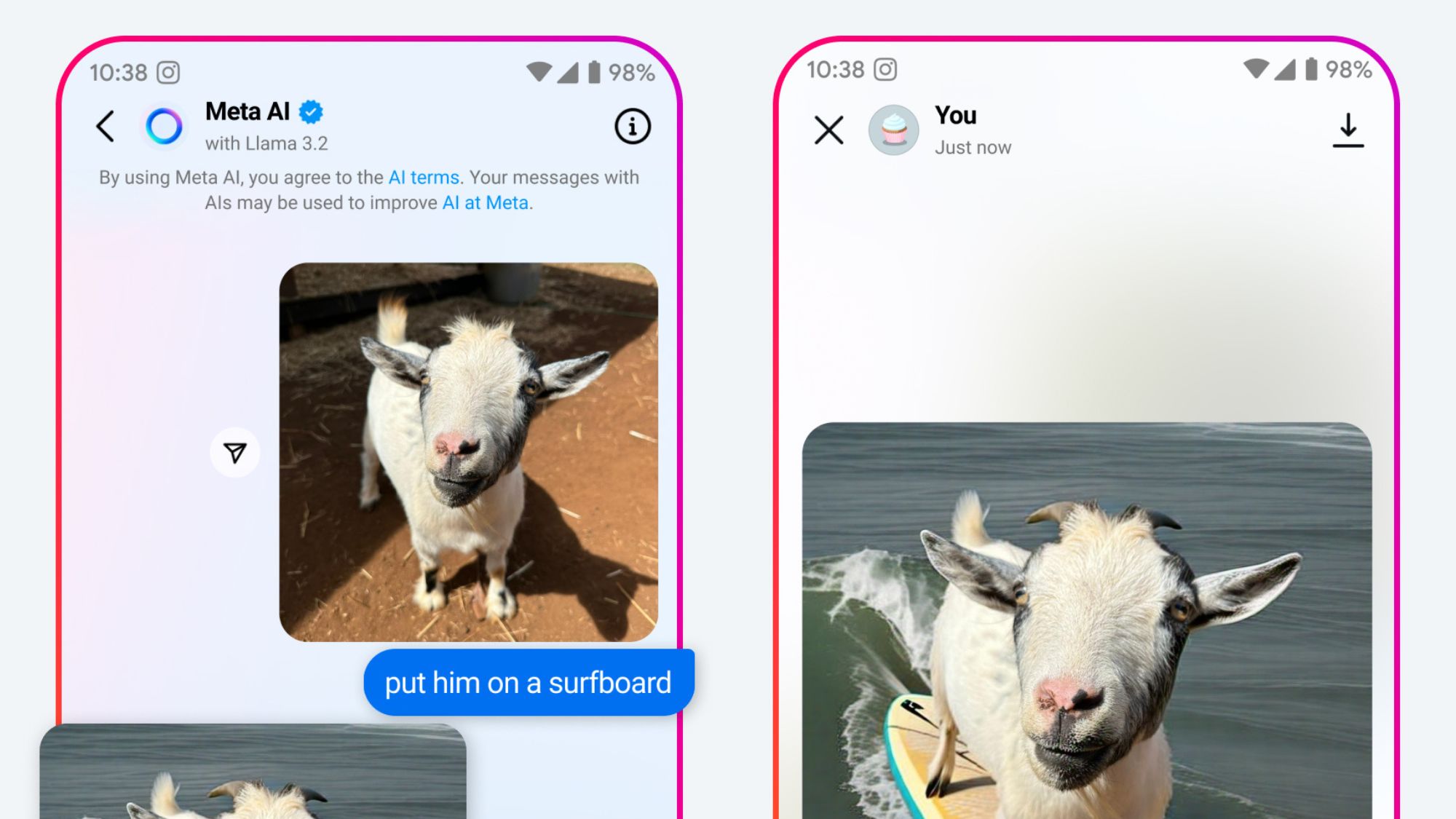

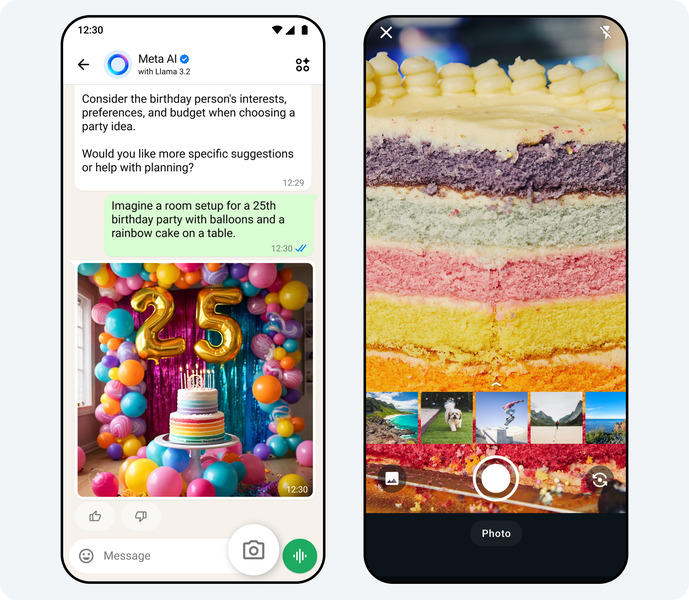

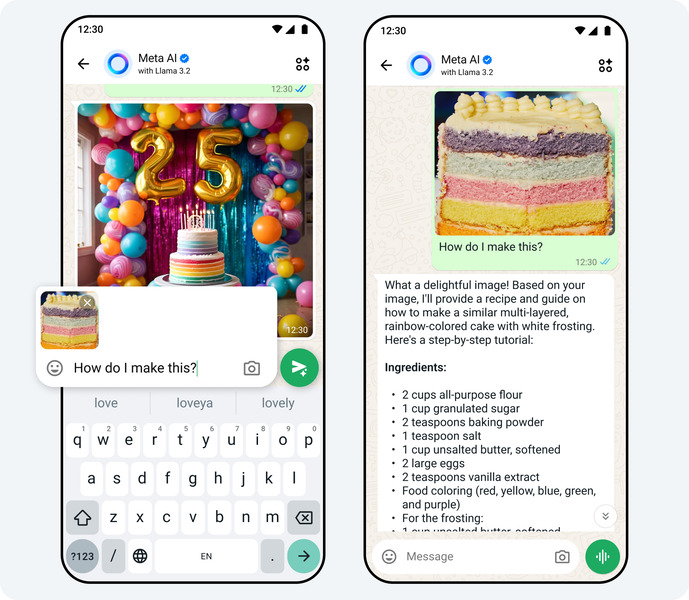

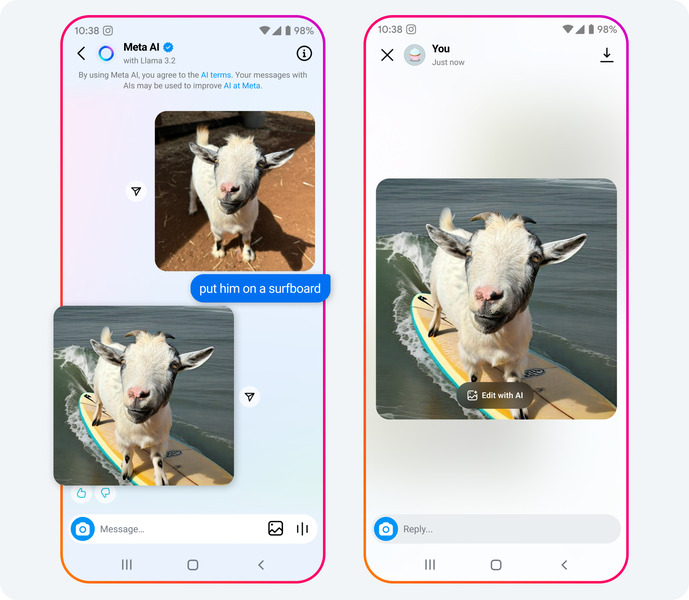

Users in the U.S. will further be able to share photos with Meta AI. The assistant is believed to understand what the photo is, and users can ask questions about it. For instance, you can share a photo of a flower or dog and ask about the kind, or they can snap a picture of a dish and simply ask for a recipe on how to prepare it.

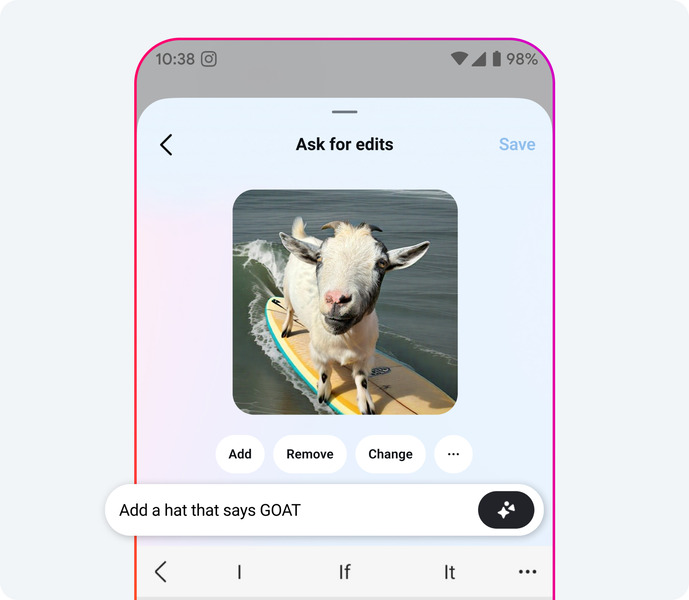

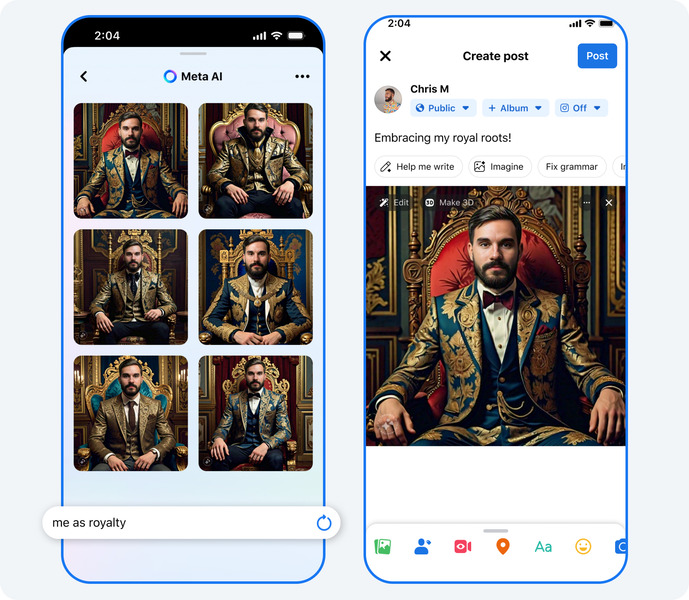

Moreover, users can ask Meta AI to edit a photo or ask for ideas on how to edit it. They can instruct the assistant what they want to add or remove or even tell it to make changes, which can include the ability to change the outfit or replace the background entirely.

Since Meta AI is available on Instagram, users can also share their photos to the Story and generate a fun background by giving Meta AI the relevant prompt before sharing it.

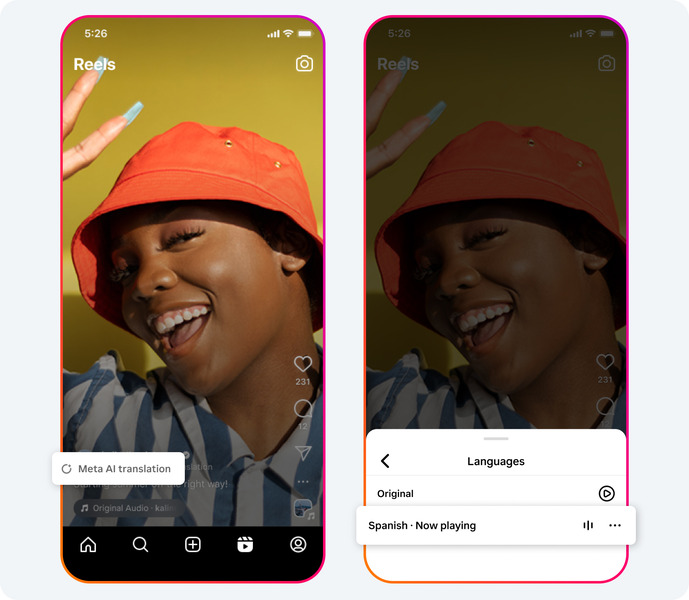

Another interesting addition is a new Meta AI translation tool, which "will automatically translate the audio of Reels. "

Get the latest news from Android Central, your trusted companion in the world of Android

This will allow creators to reach a wider audience with their content and consumers to rely less on captions and experience the reels in their preferred language. Content creators can enjoy the benefits of "automatic dubbing and lip syncing; Meta AI will simulate the speaker's voice in another language and sync their lips to match. "

The rollout will be in a testing phase on Instagram and Facebook for creators' videos from Latin America. These videos will be translated into English and Spanish in the U.S., and more regions are expected to expand soon.

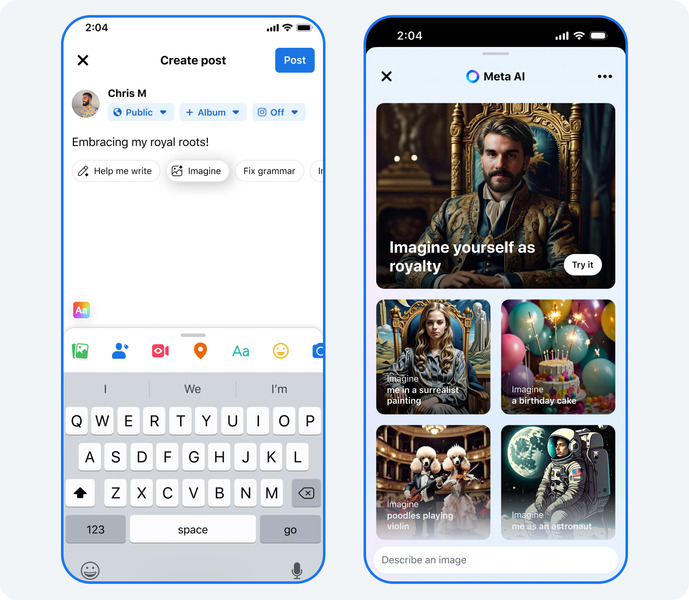

Facebook is also getting nifty AI features, which are basically an expansion of Meta AI Imagine features. Users can generate their own AI-powered superhero photos, which can be shared as Stories or set as their profile pictures on the social media platform. These images can also be shared with friends who can see, react, or even mimic them. Like Instagram, Meta AI can suggest captions for Stories on Facebook as well.

For Messenger and Instagram DMs, people can choose from a wide range of chat backgrounds or change the text bubble color. While they are preset, users can now create personalized ones with the help of AI.

Meta says it also tests AI-generated content for Facebook and Instagram feeds with which users could see images made by Meta AI specified for each user based on their interests or current trends. They can further "tap a suggested prompt to take that content in a new direction or swipe to Imagine new content in real time."

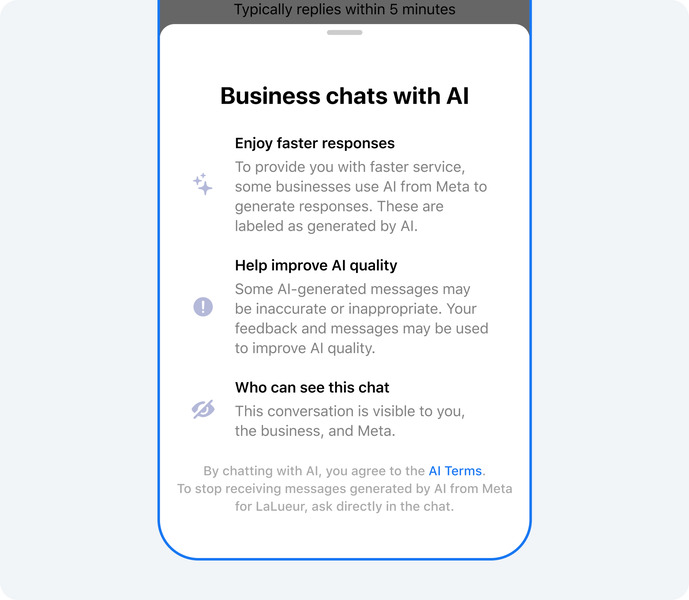

Lastly, Meta is expanding its business AIs to other businesses by utilizing click-to-message ads on its platforms like WhatsApp and Messenger. This will help in quickly setting up business AIs to talk to their customers, further provide support, and facilitate commerce.

The support includes answering customer questions and finalizing a purchase, among other things. These business AIs aim to help businesses "engage with more customers and increase sales."

The image-sharing capabilities could be part of the latest Llama 3.2 rollout, which includes Meta's vision models (11B and 90B), as they understand both "images and text." Then, there are smaller 1B and 3B models aimed at mobile devices made by Qualcomm and MediaTek chips. These models are also optimized for ARM-based processors.

Meta is sharing its official Llama Stack Distributions so developers can work effortlessly with Llama models in environments like single-node, on-prem, cloud, and on-device. It further works with companies like AWS, Databricks, Dell, Fireworks, Infosys, and Together AI "to build Llama Stack Distributions for their downstream enterprise clients. "

Vishnu is a freelance news writer for Android Central. Since 2018, he has written about consumer technology, especially smartphones, computers, and every other gizmo connected to the internet. When he is not at the keyboard, you can find him on a long drive or lounging on the couch binge-watching a crime series.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.