I expected more from Google Search's new privacy features

Much ado about nothing.

Google recently improved and expanded some of Search's features to try and help you better protect your personal information. It's great to see Google doing anything in the name of privacy, but this feels a bit underwhelming and hardly worth more than a blog post — yet, Google actually held a press briefing to detail all of the changes.

I realize that a product as complex as Google Search can't just be rebuilt on the fly, but I wanted — and expected — a little more.

The changes Google announced are good, and all three of them are things that need attention. I just don't like the policies that govern them nor how they are presented to the user in some cases.

Hopefully, they are just the beginning, and we will see a new Google that values user privacy a little more than it has in the past.

The biggest (and best) change

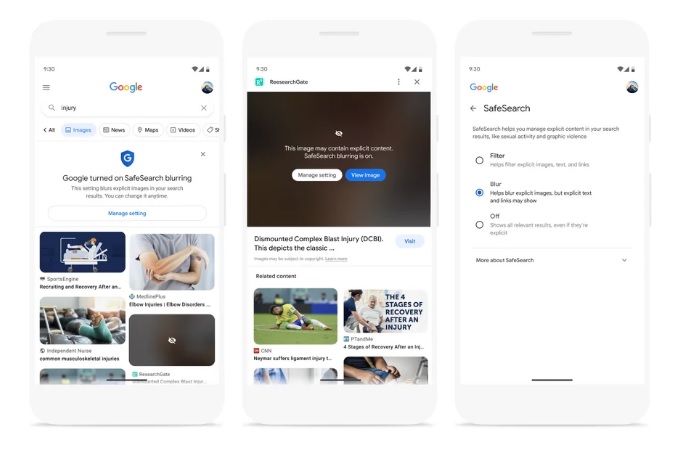

The biggest change, and one that was sorely needed, isn't really about your privacy. It does protect you and your children from seeing inappropriate content, though, and that's important.

You probably know what Google Safe Search is. It's a filter that doesn't show search results for specific content like gore or nudity. It's mostly an image search thing, but it can filter text results, too.

You probably also turned it off for yourself but have it enabled for a kid's account. But that does nothing when the kiddos happen to grab your phone or use your laptop while you're signed in.

Be an expert in 5 minutes

Get the latest news from Android Central, your trusted companion in the world of Android

Now the default, even when Safe Search is turned off, is to blur content that isn't fit for everyone. The image itself is still there, and so is the result, so you can click to visit, but you aren't going to have vehicle accident photos, or some dude's wang pop up in the results — unless you choose to turn the blur feature off.

This is good. I like seeing Google give us control over the content that appears on our screens. It's exactly the kind of change we deserve.

Results about you

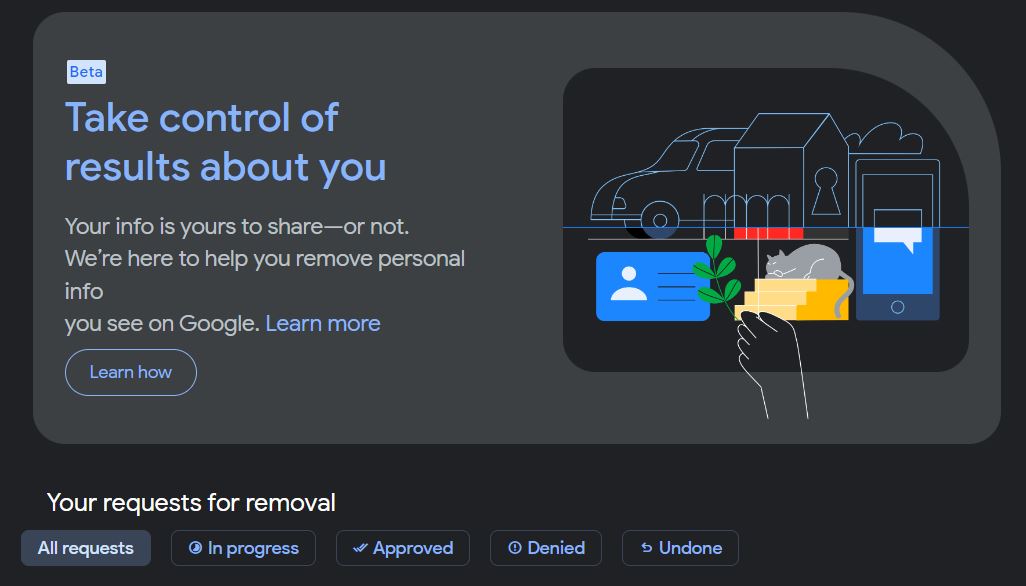

Here's where things are a bit lacking. Google has changed — they say improved — how you can find and possibly remove content about you from search results. Remember, this only removes the search hit, not the content itself, because Google has no control over that.

The new feature is a dedicated "Results about you" page where you can find everything Google sees when you search your name. So far, this is good.

You can also use forms and tools to have private information removed directly from the "Results about you" page as well as set it to highlight new instances where your personal data shows up in Google.

One of the web's longest-running tech columns, Android & Chill is your Saturday discussion of Android, Google, and all things tech.

The dashboard and notification feature is excellent. Even if you don't care about your address or phone number on the internet, other people do. Making it easier to keep track of it all is something that should have been there from the beginning.

Too bad it's hidden on a separate web page and not part of the search itself. Google is more than happy to force ads at the top of your search results, and soon, the first thing you'll see when you Google something is a bunch of drivel written by AI that you can safely ignore.

This feature belongs at the top of the Google Search page instead of a tool you need to click on your profile to find or know the URL. It's goo.gle/resultsaboutyou if you need or want it. Of course, it's only in the U.S. for now, so you need to use a VPN if you live elsewhere.

You can remove some explicit photos of yourself but not all of them

This is a problem. Google has updated its policies about how it handles "explicit or intimate personal imagery" and what it takes to get it removed. At first, everything looks good — visit this page and you can click to start the removable request of pics or videos of yourself that you don't want to be publically available.

The criteria for removal are still just as bad, though. For Google to consider the content for removal, it must meet the following requirements:

- The imagery shows you (or the individual you're representing) nude, in a sexual act, or an intimate state.

- You (or the individual you're representing) didn't consent to the imagery or the act and it was made publicly available OR the imagery was made available online without your consent.

- You are not currently being paid for this content online or elsewhere.

Who decides what a sexual act or intimate state is? What if a third party says you did consent, but you say you didn't? If you see a photo of you that you don't like and didn't give someone permission to post, Google should just yank it off search results. Period. Full stop.

I don't know why Google won't just do that, but until it does, Google is in control and not you. You should not have to ask permission to get naked photos that your ex put on the internet removed from Google results.

Number three is also going to upset some OnlyFans models, but that's how it's always been, and there isn't much change there. DCMA is the answer.

The way the company handles SafeSearch is awesome for anyone with children using their devices and is exactly the kind of feature we need from Google. Give us control and let us decide what we want — and don't want — to see.

The privacy changes are a case where Google has to do better. Our information, especially our photos, aren't just there for Google to use however it likes. We deserve better ways to mark it as hands-off where the search giant is concerned.

Jerry is an amateur woodworker and struggling shade tree mechanic. There's nothing he can't take apart, but many things he can't reassemble. You'll find him writing and speaking his loud opinion on Android Central and occasionally on Threads.