Google is training its AI model to decode what Dolphins are saying

DolphinGemma is being programmed to understand Dolphin sounds to possibly decipher their language.

What you need to know

- Google is collaborating with researchers at Georgia Tech and the Wild Dolphin Project to help them understand Dolphins better.

- Dubbed DolphinGemma, the LLM is based off of Gemma— Google's open source language learning model.

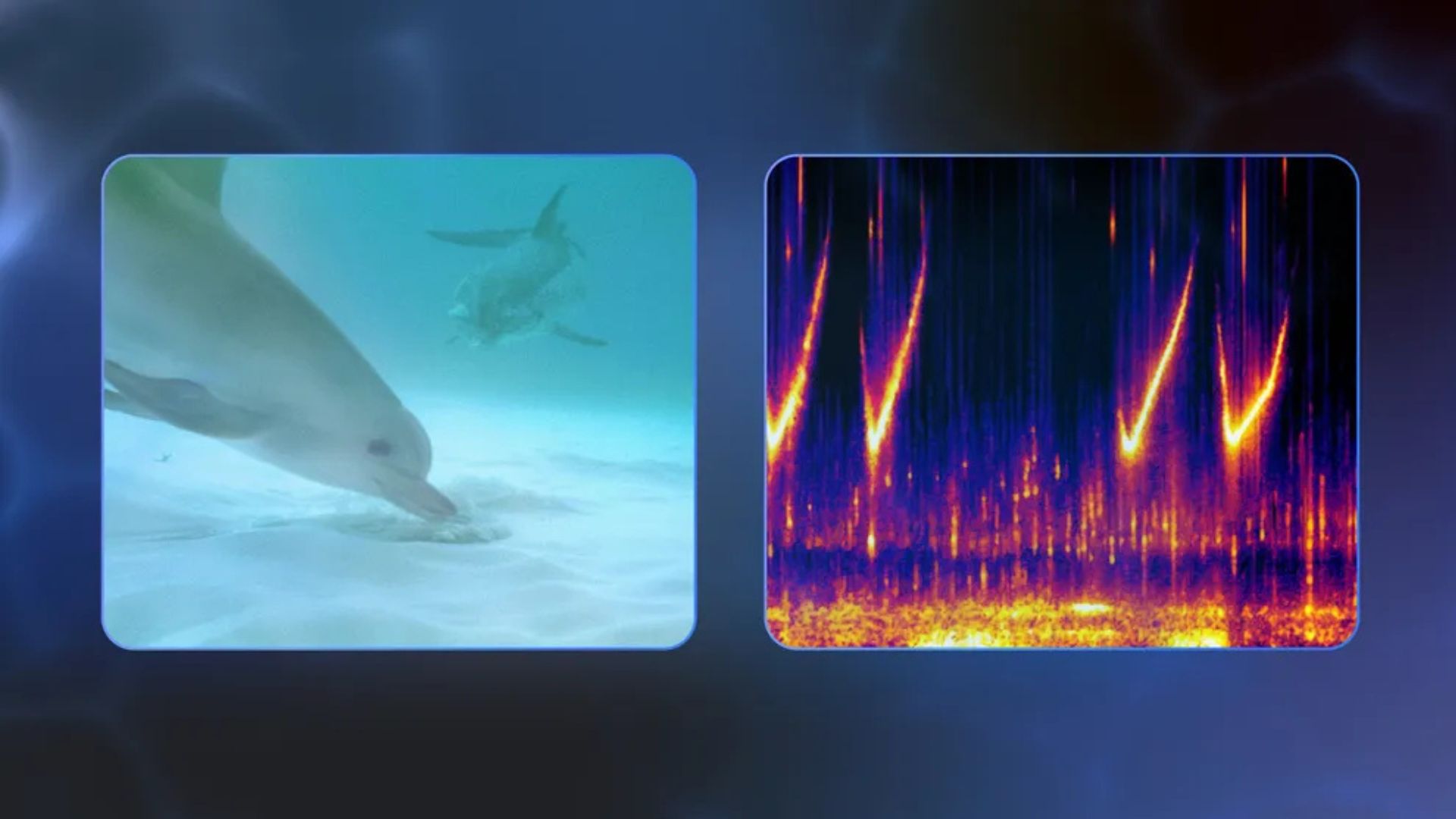

- DolphinGemma will wade through several audio files to understand sounds and clicks that these aquatic mammals make.

- The improvised LLM will then identify patterns of communication and also predict what the dolphin might say next.

If Dory can talk to a whale, then why can we bridge the gap of communication with real dolphins? Seems like Google and a group of scientists have been hard at work trying to make sense of the squeaks and clicks of these aquatic mammals.

For National Dolphin Day earlier this month, Google, in collaboration with researchers at Georgia Tech and the field research of the Wild Dolphin Project (WDP), has been working on training a large language learning model called DolphinGemma. This LLM is helping scientists study how dolphins communicate — and in the future, it could find out what they're saying, too.

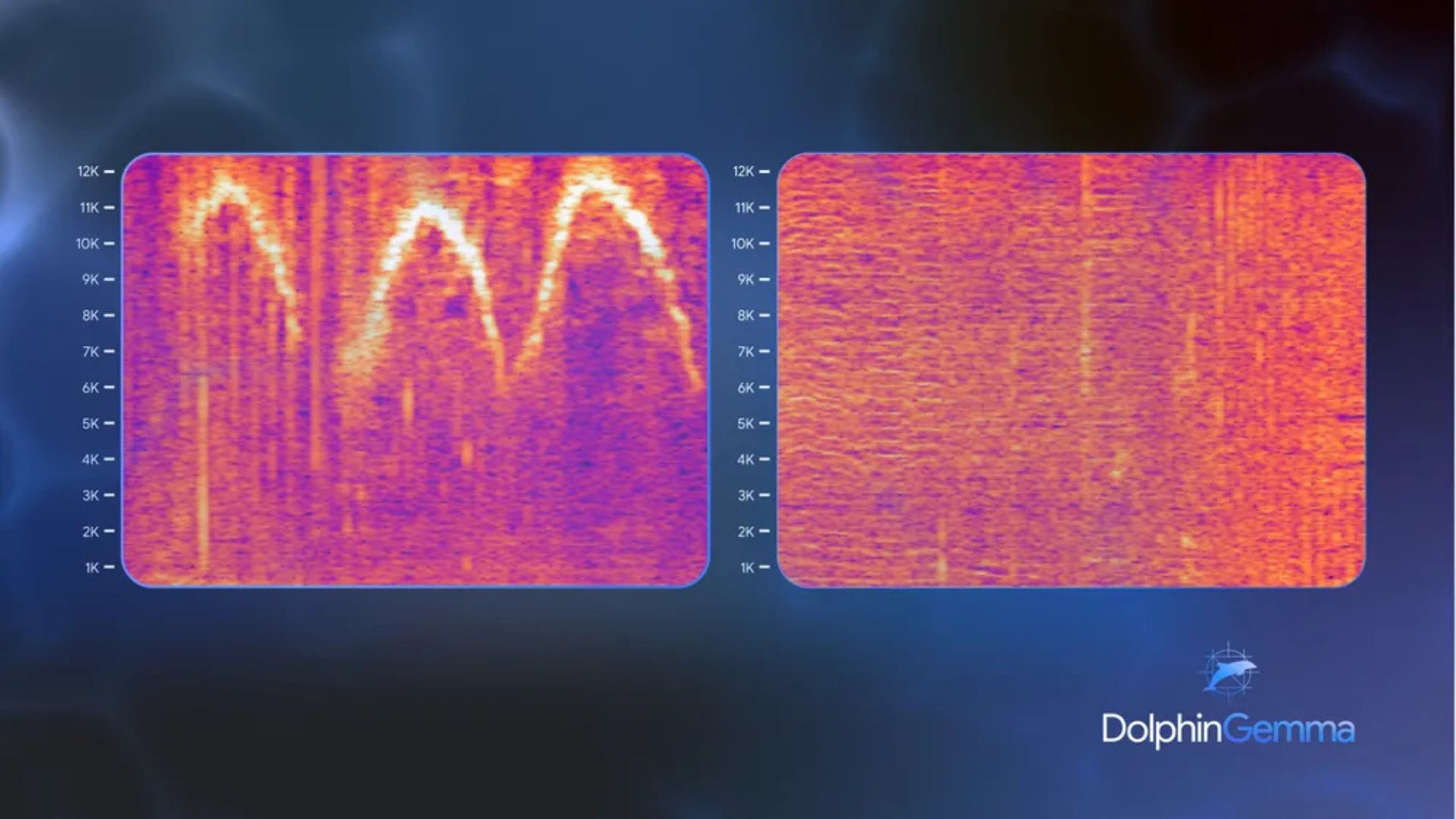

The Keyword blog states that the primary focus of WDP is observing and analyzing the dolphins' communication and social interactions. For instance, they have been able to correlate certain sounds with behaviors of these mammals. For instance, signature whistles (unique names) are usually used by mother dolphins to reunite with their young, while burst-pulse "squawks" are often seen during fights, and "Click buzzes" are often used during courtship or chasing sharks.

Where does the AI come in, you ask? Building on its open-source Gemma model, Google has created DolphinGemma, which is essentially an AI that processes audio that it receives.

WDP scientists have collected tons of acoustics of wild Atlantic spotted dolphins, and to help them interpret them better, these clicks and squawks are fed to the LLM.

After that, the AI model looks for patterns in these sounds. For instance, if a squawk is followed by two whistles, how often is the dolphin "communicating" that way? What's more is that once DolphinGemma understands these patterns, it will try to predict the sound that the dolphin might make next.

DolphinGemma is making it easier for scientists to spot these patterns, lessening the need for human intervention, which would essentially take longer. "The model can help researchers uncover hidden structures and potential meanings within the dolphins' natural communication," the post added.

Be an expert in 5 minutes

Get the latest news from Android Central, your trusted companion in the world of Android

Nandika Ravi is an Editor for Android Central. Based in Toronto, after rocking the news scene as a Multimedia Reporter and Editor at Rogers Sports and Media, she now brings her expertise into the Tech ecosystem. When not breaking tech news, you can catch her sipping coffee at cozy cafes, exploring new trails with her boxer dog, or leveling up in the gaming universe.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.