AI probably won't drive mankind to extinction but it can be harmful

AI is already hurting some very vulnerable people.

Over 350 tech experts, AI researchers, and industry leaders signed the Statement on AI Risk published by the Center for AI Safety this past week. It's a very short and succinct single-sentence warning for us all:

Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.

So the AI experts, including hands-on engineers from Google and Microsoft who are actively unleashing AI upon the world, think AI has the potential to be a global extinction event in the same vein as nuclear war. Yikes.

I'll admit I thought the same thing a lot of folks did when they first read this statement — that's a load of horseshit. Yes AI has plenty of problems and I think it's a bit early to lean on it as much as some tech and news companies are doing but that kind of hyperbole is just silly.

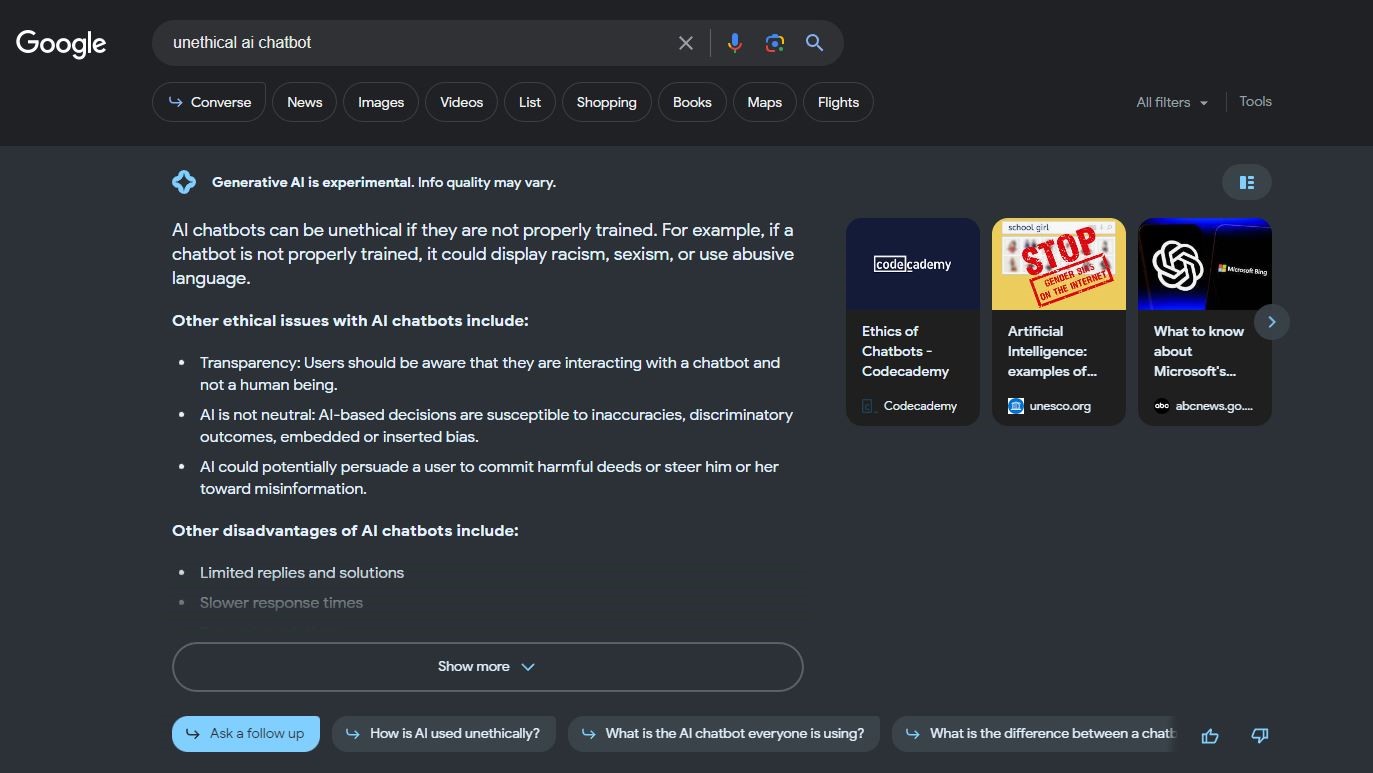

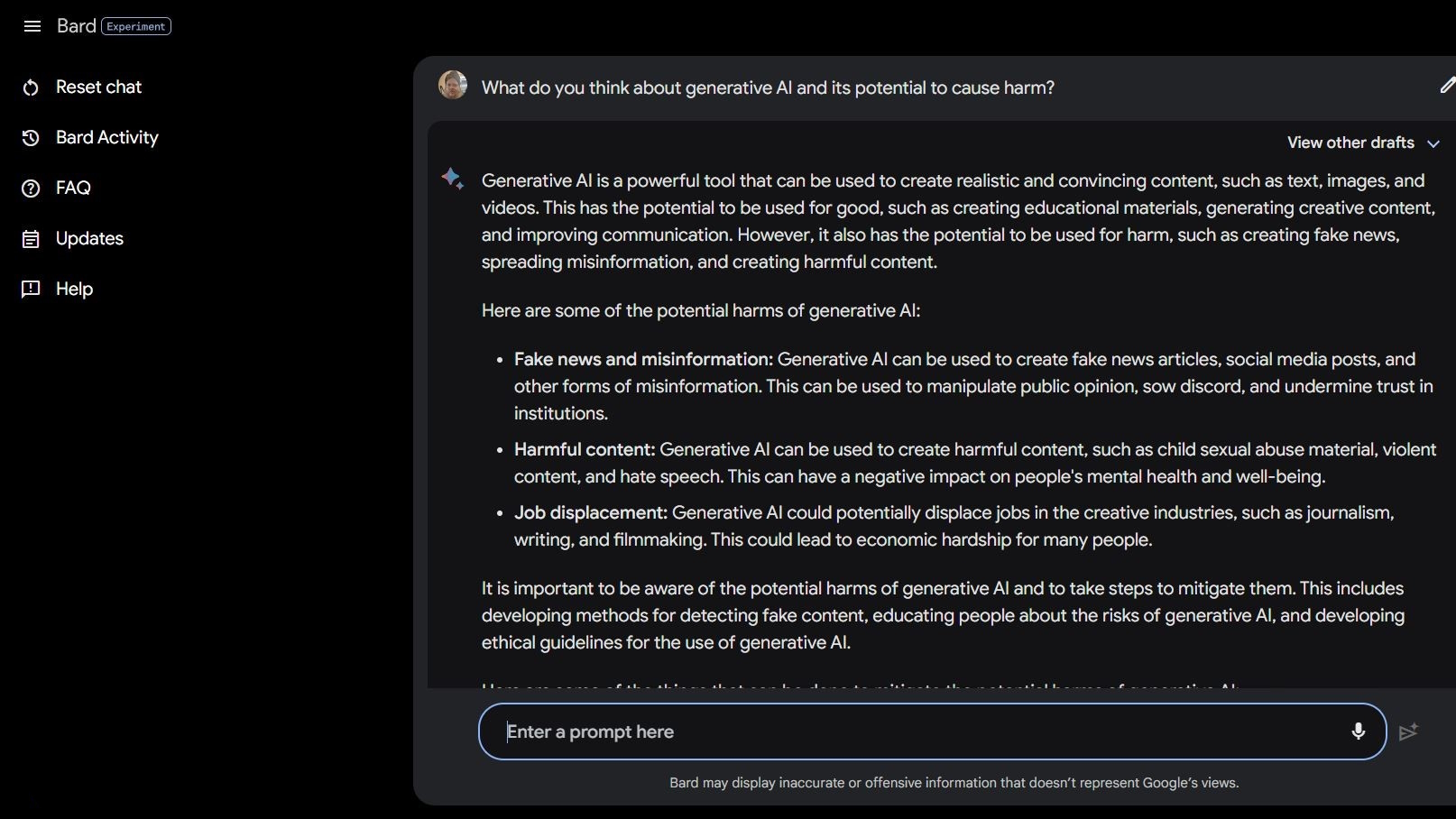

Then I did some Bard Beta Lab AI Googling and found several ways that AI is already harmful. Some of society's most vulnerable are even more at risk because of generative AI and just how stupid these smart computers actually are.

The National Eating Disorders Association fired its helpline operators on May 25, 2023, and replaced them with Tessa the ChatBot. The workers were in the midst of unionizing, but NEDA claims "this was a long-anticipated change and that AI can better serve those with eating disorders" and had nothing to do with six paid staffers and assorted volunteers trying to unionize.

On May 30, 2023, NEDA disabled Tessa the ChatBot because it was offering harmful advice to people with serious eating disorders. Officially, NEDA is "concerned and is working with the technology team and the research team to investigate this further; that language is against our policies and core beliefs as an eating disorder organization."

Be an expert in 5 minutes

Get the latest news from Android Central, your trusted companion in the world of Android

In the U.S. there are 30 million people with serious eating disorders and 10,200 will die each year as a direct result of them. One every hour.

Then we have Koko, a mental-health nonprofit that used AI as an experiment on suicidal teenagers. Yes, you read that right.

At-risk users were funneled to Koko's website from social media where each was placed into one of two groups. One group was provided a phone number to an actual crisis hotline where they could hopefully find the help and support they needed.

The other group got Koko's experiment where they got to take a quiz and were asked to identify the things that triggered their thoughts and what they were doing to cope with them.

Once finished, the AI asked them if they would check their phone notifications the next day. If the answer was yes, they got pushed to a screen saying "Thanks for that! Here's a cat!" Of course, there was a picture of a cat, and apparently, Koko and the AI researcher who helped create this think that will make things better somehow.

I'm not qualified to speak on the ethics of situations like this where AI is used to provide diagnosis or help for folks struggling with mental health. I'm a technology expert who mostly focuses on smartphones. Most human experts agree that the practice is rife with issues, though. I do know that the wrong kind of "help" can and will make a bad situation far worse.

If you're struggling with your mental health or feeling like you need some help, please call or text 988 to speak with a human who can help you.

These kinds of stories tell two things — AI is very problematic when used in place of qualified people in the event of a crisis, and real people who are supposed to know better can be dumb, too.

AI in its current state is not ready to be used this way. Not even close. University of Washington professor Emily M. Bender makes a great point in a statement to Vice:

"Large language models are programs for generating plausible-sounding text given their training data and an input prompt. They do not have empathy, nor any understanding of the language they producing, nor any understanding of the situation they are in. But the text they produce sounds plausible and so people are likely to assign meaning to it. To throw something like that into sensitive situations is to take unknown risks."

I want to deny what I'm seeing and reading so I can pretend that people aren't taking shortcuts or trying to save money by using AI in ways that are this harmful. The very idea is sickening to me. But I can't because AI is still dumb and apparently so are a lot of the people who want to use it.

Maybe the idea of a mass extinction event due to AI isn't such a far-out idea after all.

Jerry is an amateur woodworker and struggling shade tree mechanic. There's nothing he can't take apart, but many things he can't reassemble. You'll find him writing and speaking his loud opinion on Android Central and occasionally on Threads.